当前位置:网站首页>Scheduling with Testing

Scheduling with Testing

2022-06-22 20:38:00 【ZZZZZ Zhongjie】

. Abstract

We study a new class of scheduling problems , It captures common settings in the service environment , One of them must serve a priori uncertain property ( for example , Processing time and priority ) Job set for , And the service provider must decide how to dynamically In the test ( The diagnosis ) Allocate resources between jobs ( for example , personnel 、 Equipment and time ), To learn more about their respective uncertain properties and processing work . The former can provide information for future decisions , But it may delay the service time of other work , The latter directly promotes the handling of the work , But decisions need to be made under uncertainty . Through novel analysis , We obtained a surprising result of optimal policy structure , These results provide operational management insight 、 Efficient optimal and near optimal algorithms , And the quantification of test value . We believe that , Our approach will lead to further research , To explore this important practical trade-off .

1 introduction

The effective management of many service systems usually depends on the customers 、 The ability to properly classify and prioritize tasks or work . However , in many instances , The exact nature of the work is uncertain . for example , The amount of time and resources required to process a given job and its relative priority may not be known accurately . Although recent advances in information technology have enabled more accurate predictions about every job , But there are still many cases , Gathering more information about work requires allocating the same resources for processing work . This leads to an operational tradeoff between exploration and utilization , In particular, how to work in diagnostics called testing ( To gather more information about the work that arrived ) And processing work called work ( Only serve the work in the system ( Customer ) .

In this paper , We introduced a new scheduling model that captures these tradeoffs , It also provides some structural results and insights about the optimal strategy , And how to obtain the optimal and provably near optimal strategies . It's amazing , In many interesting cases , The optimal strategy can be achieved by shortsightedness ( Local ) Rules to describe .

A relevant example of the trade-off types studied in this paper appears in aircraft maintenance . Engine maintenance requires disassembly and reassembly of the engine , This is expensive in terms of time . perhaps , Special tests can be used to diagnose engine equipment , It can reveal the nature of the fault , And the required corrective measures and processing time . under these circumstances , The shared resource between work and testing is the maintainer .

Another example is in the emergency department . under these circumstances , Patients undergo triage , To gather information about its urgency ( Sensitivity to waiting ) And information about the required activities and processing time . This information allows patients to be prioritized , To ensure effective allocation of limited medical resources . Although these examples come from very different practices , But they all produce similar tradeoffs , In particular, how resources should be allocated between diagnostics and actual work processing .

This paper focuses on one of the core problems in scheduling theory , That is, a single server and the goal of minimizing the weighted sum of completion times for a given job set . This objective reflects the minimization of the weighted total ( Or average ) The goal of waiting time , This is realistic in the above practical setup and many other practical setups , There is a trade-off between testing and work . The processing time and weight of the job are unknown , But if the job is tested , Can reveal , This is an activity that requires specifying the server time . therefore , At any stage, you have to decide whether to test another job or deal with a job ( Or it has been tested , Or it still has uncertain processing time and weight ). Once the job is processed , It must be done ( namely , Preemption is not allowed ). We noticed that , If there is no test option , Known problems can be best solved by processing jobs in an increasing order of expected weighted processing time ; This is called the weighted minimum processing time rule (WSPT)

1.1 contribution

This paper has made several important contributions .

First , It introduces a new scheduling model , It can capture the trade-offs between exploration and utilization in a service environment . Although it is generally believed that , Understanding and controlling variability is critical to sustaining uninterrupted operations , But as far as we know , This is the first study on the extent to which resources should be used to collect information and reduce uncertainty . secondly , Although the natural expression of the problem leads to high-dimensional dynamic programs (DP), But this article provides a structural analysis , The characteristics of the optimal policy are obtained , This is intuitive in management . say concretely , We clearly identify ( And calculate ) Two thresholds , Divide the test assignments into three groups . The first group should be dealt with immediately , Do not delay . The second group shall finish processing after all other operations .

Last , Unknown jobs can only be potentially tested before processing a third set of known jobs .

We also show that , The optimal policy has the structure of the optimal stopping time problem ; It tests the jobs and immediately processes the jobs in the first group , Until at some point it switches to using WPST Rule handles all remaining jobs , And no more testing . Third , Based on the structural characteristics of the optimal strategy and the innovative marginal cost accounting scheme , We propose a low dimension with five dimensions DP Formula to describe each system state ( And can use Standard method ). It is different from the traditional cost accounting scheme , The contribution of one work to the overall objective function is considered when the processing of the work is completed , In marginal cost accounting , Its contribution to the completion time of other work is calculated after completion . Determine the relative scheduling order between jobs . Besides , The structural characteristics of the optimal policy lead to low dimension DP The formula can use the complete polynomial approximation scheme (FPTAS) Solve any given accuracy in an almost optimal way . Fourth , Under certain conditions ( Including special cases of work with equal weight ), The optimal policy is proved to be a short-sighted rule , Can only be based on the current state .

The fifth , The analysis provides insights into the value of testing as a function of the various parameters of the problem , The performance of the simpler strategy is analyzed and evaluated . This kind of analysis can be better used to understand and evaluate when the test function is really worth . Last , Analysis extends to a wider set of settings , The test may reveal only part of the information about the potential attributes .

1.2 Literature review

For more than half a century , The research community has developed rich and extensive literature in the field of scheduling . For all that , Although a wide range of issues have been explored , But the subject of the test itself doesn't seem to be getting much attention . Typical characteristics of scheduling problems involve such as single job attributes ( for example , The processing time 、 Due date 、 Release date and preemption )、 Dependencies between jobs ( for example , Precedence constraint 、 Homework series 、 Setup time )、 Server properties ( for example , Multiple servers 、 speed control 、 Batch and fault )、 Server job settings ( for example , Flow shop 、 Workshop and open shop issues ), Under various objectives ( for example , Manufacturing time 、 Running water time 、 Delay and late ). The literature review of this paper does not attempt to make a comprehensive investigation of this huge knowledge system , Instead, it introduces the main research fields in the scheduling literature , And how they relate to our work . A comprehensive treatment of the subject , Readers may refer to Pinedo (2012) and Leung (2004).

The main method to classify scheduling work is based on the amount of information known by the scheduler . The main category is deterministic 、 Random and online . Third, the availability of information decreased . In deterministic problems , All information is known in advance , This means that decisions can be made in advance , And can predict the overall performance . Random scheduling assumes the probability characteristics of uncertain data . The most extreme is online scheduling , It does not assume knowledge about processing or system arrival time , And the information gradually shows . The models studied in this paper share the properties of stochastic and online scheduling problems . One side , Probability distributions are used to model uncertainty , But on the other hand , Allow testing as a means of understanding these uncertainties online .

Two areas closely related to our model are medical classification and maintenance . In medical triage , A series of papers in the past decade have challenged the current triage practice ( for example ,Sacco wait forsomeone 2005、Lerner wait forsomeone 2008 and Jenkins wait forsomeone 2008).

One of the main criticisms is that the availability of resources is not taken into account when deciding on patient priorities . meanwhile , New work on models and heuristics provides insights and alternatives for creating better classification processes ( for example ,Sacco wait forsomeone .

2005 year , Li He Glazebrook 2010 year , Jacobson et al . 2012 year , And mills et al . 2013). However , Usually the basic premise of this kind of work is that information about the patient can be obtained ( Whether partial or complete ), And the goal is to optimize the resource allocation under the given information . In our work , In addition to determining the allocation of resources to serve patients , We also consider the process of determining each patient's condition , It also consumes resources ( But in quite different environments ). stay Alizamir wait forsomeone . (2013), A model is studied , The diagnostic accuracy can be controlled ( for example , By investing more time in diagnosis ), And make a trade-off between diagnostic accuracy and patient delay . The latter is related to diagnostic accuracy , Our focus is on determining the order in which we should serve our patients , This is an important lever to improve various performance indicators in many service systems

In the literature on maintenance , In a class of models called preparation models (McCall 1965) in , A significant effort has been made to study maintenance problems through inspection . In these models , The machine will degenerate over time in a hidden process , Unless checked , So as to reveal the real state of the machine . Most of the literature on inspection models focuses on single component systems in infinite field setup , If there is no inspection , Faults are invisible . Besides , Suppose the inspection is expensive , Its duration is negligible , The goal is to minimize costs . in other words , The focus is on cost rather than limited capacity allocation . In a multicomponent system , The main work is to reduce maintenance costs by repairing multiple components at the same time by taking advantage of economies of scale . Besides , A model is also created for the system , In these systems , There is a correlation between the evolution of components , Or jointly maintain components due to structural dependencies . As far as we know , These works do not study how to use checking to dynamically inform scheduling decisions . A survey of the maintenance model , Readers can refer to McCall (1965)、Pierskalla and Voelker (1976)、Sherif and Smith (1981)、Sherif (1982)、Yamayee (1982)、Valdez-Flores and Feldman (1989)、Cho and Parlar (1991), Dekker wait forsomeone . (1997), Guo et al .

(2000)、 king (2002)、Frangopol wait forsomeone . (2004 year ) Yihe et al . (2006)、Nicolai and Dekker (2008) as well as van Noortwijk (2009).

We also note some results of studying the value of information in single server queuing and scheduling settings . Bansal (2005) Studied a M/M/1 queue , The duration of work is known at the time of arrival . He quantified the improvement of a policy , This policy processes jobs in an increasing order of remaining processing time , Instead of the standard first come, first served policy Wierman and Nuyens (2008) In this paper, we study a class of strategies to generalize the shortest processing time rule .

When it is necessary to group jobs with different processing times and assign the same priority rule ( This is similar to not having exact information about the processing time ), These are used in practice . They derive the bounds of multiple performance measures , And study how boundaries are affected by information accuracy .

The trade-off between exploration and development has been studied in the context of several operational issues in revenue management and supply chain management ( for example , See Besbes and Zeevi 2009 as well as Besbes and Muharremoglu 2013). However , The typical assumption in this workflow is that the underlying distribution is unknown and learned from the data .

contrary , In our setup , Suppose the distribution is known , However, specific instances of these distributions can be observed through testing .

The novelty of our work lies in the incorporation of learning decisions into job scheduling problems . Traditionally , The scheduling problem focuses on determining the best sequence of job processing in a deterministic environment , Or affected by the uncertainty represented by the probability distribution . However , As far as we know , The testing problem has not been studied in the published literature (Sun et al. 2017 Recent work has studied a very special case of this model ).

The rest of the work is arranged as follows . In the 2 In the festival , We describe the model 、 The new cost accounting scheme and the resulting DP The formula . The first 3 This section contains the analysis of the model and the characterization of the optimal strategy , And then we're in the second 4 This characterization is used in section to develop an algorithm that solves the problem approximately optimally . In the 5 In the festival , We study a short-sighted strategy and prove its optimality under some assumptions . In the 6 In the festival , We discussed the value of testing , In the 7 In the festival , We extend the model to a wider set of settings , It shows that the results of the basic model are still valid . We are the first 8 Section summarizes and discusses future research directions . Please note that , Due to space limitation , Some proofs have been omitted , But these appear in the online appendix .

2 The mathematical formula

Consider what needs to be handled by a single non preemptive server N0 Homework . Every assignment i With a given processing time ti And a weight indicating the relative importance of the job wi Related to . Homework i Duration of ti And weight wi Is based on a known joint distribution D A priori random variable of distribution (T, W), The degree of support is [1, D] × [1, V], And it is independent and identically distributed among jobs .

When the server is idle , The scheduler can do one of the following . It can handle a job , under these circumstances , The processing time of the job is realized T And weight W. however , The operation must be handled without preemption . perhaps , The server can be used for testing needs to specify the processing time ta The homework , And reveal the processing time and weight required for a specific job . After testing , The job can be paused and processed later . therefore , As long as the server is idle , You can make three decisions : Handle “ It is known that ” One of the assignments ( That is, processing the tested jobs ), Handle “ Unknown ” Homework ( Processing untested jobs ), Or test one “ Unknown ” The job of .

Please note that , Both known and unknown are related to unprocessed jobs .

The state of the system can be expressed as a vector (N,[t1 , w1 , . . . , tn , wn ]), among N and n Indicates the number of unknown and known jobs, respectively , and t1 , w1 , . . . , tn , wn Express n Implementation of processing time and weight of each job in a given job .

When there are no known jobs , The state of the system is (N,[ ]). No loss of generality , We always assume that the ratio ti/wi stay i Without subtracting . We use... Separately ρƐ[T]/Ɛ[W] and ρi ti/wi Represents an unknown job and a given test job i Processing time and weight ( Importance ) Than . Action space can be set {test, processu, processi} To describe .

Control means testing unknown jobs 、 Processing unknown jobs and processing known jobs i.

The goal is to find an adaptive scheduling strategy , To minimize the expected weighted sum of completion times . This will be called S&T Model ( Scheduling with tests ).

Asking questions DP Before the formula , We are the first 2.1 This section shows WSPT A variation of the rule extends to our problem . This allows you to 2.2 The marginal cost accounting scheme is introduced in section , Then it is used to obtain the DP The formula ( The first 2.3 section ).

2.1 WSPT The rules

as everyone knows , The deterministic variant of the problem studied in this paper can be solved by the strategy of processing jobs in proportional non decreasing order ( Also known as WSPT Rules or Smith The rules ; See Smith 1956) Get the best solution . The difference between this rule ( dynamic ) The view is the best policy to always choose to process jobs at the lowest rate .

In this section , Indicates that a weaker version of this attribute applies to S&T Model ( Later on, we'll talk about 3 Section ). say concretely , We show that , When dealing with , When the ratio of known jobs is less than that of unknown jobs , It is best to deal with it .

Please note that , Although simple exchange parameters are often used to prove this property , But in S&T In the model , The test operation can occur between the processing of any two jobs . under these circumstances , Swapping two jobs to form a decreasing ratio sequence may no longer guarantee an improved scheduling strategy . Besides , Pass the test , We observed the true proportion of assignments , This may also affect the optimal scheduling sequence .

In lemma 1 in , We show that given two consecutive jobs , Their ratio must be non decreasing . then , We're lemma 2 A stronger attribute is proved in , That is, the given two jobs have not been tested , It is suboptimal to process jobs with a higher ratio before processing jobs with a lower ratio ( Even if these jobs are not processed continuously ).

lemma 1. It is suboptimal to process jobs continuously at a decreasing rate .

prove . Please refer to page... In the online appendix A.1 section .

lemma 2. Handle work with a rate higher than the known work rate ( Known or unknown ) It's second best .

prove . Please refer to page... In the online appendix A.2 section .

Please note that , lemma 2 Significantly reduced movement space . In any state , We just need to choose between testing or processing unknown jobs and processing known jobs at a minimum rate .

2.2 Marginal cost accounting

Marginal cost accounting is related to the concept of linear ordering ( See Queyranne and Schulz 1994 And the references ). For untested problems , Use the processing order of any pair of jobs to describe the strategy through linear sorting . then , The target value can be written as Pn i1 tiwi + P i,j 1i<j tiwj , among 1i<j It's homework j Previous processing jobs i The indicator function of the event . We see every assignment i Contribute its processing time to itself (wi ti ), And each job processed later j (tiwj ).

When the job can be tested , Further delays caused by testing must also be considered . For homework i, The delay due to testing is job i Number of previous test jobs ta times . In hindsight , We can write the target value as :

(1)

lemma 2 This means that when jobs are tested and their respective values ti 、 wi Become known , The best processing sequence is partially determined . therefore , Some future costs can be calculated during testing . More generally , In marginal cost accounting , We charge all future costs known as a result of current actions .

say concretely , When in a state (N,[t1 ,w1 ,…,tn ,wn ]) Test unknown job l<{1…n} And implement values (tl ,wl ) when , It causes delay Pass the test ,ta (Σ n i1wi+ (N −1)Ɛ[W]+wl ), And the ordering cost relative to other known jobs ,tlwl +Σ n i1 (1ρl<ρi tlwi +1ρl>ρi tiwl ) It can be Electrification . Besides , If an unknown job is processed , The ordering cost of all other activities can be charged Ɛ[TW +Σ n i1 Twi +(N -1)TƐ[W]].

This includes “ Self imposed costs ”TW、 Costs associated with known work Σ n i1 Twi And with others N -1 Unknown job related costs , It is expected that (N−1)TƐ[W]( We use Independence between jobs ).

Last , Processing known jobs 1 when , Additional costs are activities 1 Relative to the ordering cost of an unknown job :N t1Ɛ[W]. Please note that , The previous operation has considered other ordering costs .

2.3 DP The formula

In this section , We describe the DP The formula . DP The state of is determined by the vector defined before (N,[t1 , w1 , . . . ,tn , wn ]) Express . According to lemma 2, We can limit the control space to {test, processu, process1}. The conversion is simple , Processing unknown jobs will make N reduce 1; Processing jobs 1 Do your homework 1 Remove from state ; Test an unknown job , take N reduce 1, And through processing time and weight (T,W) The implementation of adds a known job to the state . This happens by distribution D Defined probability . Use the marginal cost account scheme ( The first 2.2 section ), We define the Behrman equation as follows :

(2)

because T and W Is random , So the transition to the next system state is random , therefore , Capture costs by expecting all possible states .

The total number of observed states is O( N0+DV+1 N0 ), Therefore, the running time is O(DV N0+DV+1 N0 ), Therefore, it is difficult to calculate .

Although the marginal cost accounting method seems not as simple as the traditional method of adding completion time after processing the work , But the marginal cost accounting method has the advantage of calculating the cost as soon as possible . Intuitively speaking , This means that we need to be in DP Less information is encoded in the state , This will enable us to develop more compact DP. More specifically , Using the marginal cost accounting method and several structural attributes of the optimal strategy ( The first 3 section ), We express the problem as a low dimensional problem DP( The first 4 section ), It is shown that five statistics of unknown and known work are sufficient Consider all future costs ( With the above N dimension DP The formula is the opposite

3 3. The optimal Strategy The nature of

cy In this section , Use the 2.3 Described in section DP Formula to characterize the structural characteristics of the optimal strategy . These are then used to design low dimensional DP The formula .

We first introduce a new quantity ρa , It is associated with ρ Ɛ[T]/Ɛ[W] Together , Will be the key to characterizing the optimal strategy .

Definition 1. The test is more than ρa Defined as the unique solution of the equation

(3)

3) lemma 3 indicate ρa Is clearly defined .

lemma 3. (1) function f (x) ta − Ɛ[(xW − T) + ] stay x China is not increasing . Besides ,f (x) stay x ≥ inf{d/v: Prob(T d, W v) > 0} Strictly decreasing , Its value is ta > 0.

(2) ta −Ɛ[(xW −T) + ] 0 The solution is the only one .

(3) If x < ρa be ta − Ɛ[(xW − T) + ] > 0; If x > ρa be ta −Ɛ[(xW −T) + ] < 0.

(4) ρa < ρ ⇔ ta < Ɛ[(ρW -T) + ].

prove . The proof is straightforward , Omit... For brevity . Q.E.D.

The amount ρa It has the intuitive meaning of minimum working ratio , So test earlier and test later . As we will see ,ρa It is the trigger point for testing unknown work ; say concretely , We will prove , Work in a known i (ρa < ρi) Or known work (ρa > ρi) Testing unknown jobs before is never optimal .

Similarly ,ρ Will act as a trigger point for handling unknown work , We will prove that in ρ < ρi Known work of i After or ρ > ρi Known work of i It is never optimal to deal with the unknown before .

Use ρ and ρa We can divide the known work into three groups : (i) Work at a low rate (ρi < min(ρ, ρa )); (ii) Work at a moderate rate (min(ρ, ρa ) < ρi < max(ρ, ρa )); (iii) High rate of work (ρi > max(ρ, ρa )). For the sake of illustration , We assume that ρa , ρ ( No loss of generality ), And for each i, We have ρi , ρa and ρi , ρ. ( There is a slight general loss , Can be easily solved , But it will hinder readability .) For the State (N,[t1,w1,…,tn,wn]), Means low / in / A collection of high proportion jobs Press separately SLow/S Med/S High. Observe that these sets are state dependent

figure 1

chart 1 Describes this job classification , hypothesis ρa < ρ. Jobs are sorted on the displayed axis according to their ratios . Unknown work is represented by circles , Known to work with “x” Express . In this example , There are four unknown positions 、 Two low ratio positions 、 Three medium ratio positions and two high ratio positions .

The next lemma shows , Low rate jobs have the highest priority , And it is processed immediately after the test , No further delay .

lemma 4. For any state (N,[t1 , w1 , … , tn , wn ]), Among them, the operation is 1 The ratio of , Processing jobs 1 Is the only optimal control .

prove . Please refer to page... In the online appendix A.3 section .

lemma 4 This means that low rate operations should be handled immediately after testing . for example , In the figure 1 In the state shown , Process the two shortest jobs and convert to figure 2 The state shown is optimal . No loss of generality , We can always assume that ρa < ρ1 or ρ < ρ1 . in other words , Under the optimal strategy , There will never be a low rate of work .

Now let's consider two cases respectively :(1) ρa < ρ and (2) ρa > ρ. Because of the parameters ρa Monotonically increase with test time ( Definition 1), We use short test time and long test time to express these two situations . In the first case ( The first 3.1 section ) in , We show that any test must be preceded by a medium ratio 、 Any processing of high rates and unknown jobs . therefore , All tests should be graphs 2.( Online color ) Process low rate jobs immediately after detection Treatment time to weight ratio Low ratio High ratio Medium ratio a 1 2 3 4 5 Known jobs Unknown jobs completed immediately after processing Low rate of homework , Once we stop testing , All remaining jobs are processed in non decreasing order of their ratios , Basically follow WSPT The rules . For the second case ( The first 3.2 section ), We show that unknown work should never be tested . therefore , The problem is simplified to the traditional problem of minimizing the weighted sum of completion times ( There is no need to test ).

3.1 Short test time

Use ρa < ρ Assumptions , We first prove the local optimal conditions related to testing immediately after processing . Although partial , But this result, together with the previous lemmas and properties, imposes a lot of structure on the optimal policy .

lemma 5. If ρa < ρ, When the processing ratio is higher than ρa It is suboptimal to test immediately after the job .

prove . Please refer to page... In the online appendix A.4 section .

As lemma 5 An example of , Please consider the diagram 2 Status shown in . lemma 5 Pointed out that , The test job is not optimal after processing any subset of the remaining known and unknown jobs . We come to a conclusion , When ρa < ρ when , The optimal policy always handles all low rate jobs before testing , And it usually runs in two stages . In the first phase , Only when the ratio is low , Will immediately test and process unknown jobs . In the second phase , All jobs in the system are processed in non decreasing order of their ratios . This means that the problem can be considered a stop problem , The decision to continue corresponds to testing an unknown job ( If the ratio of corresponding jobs is low, process ), Stop corresponds to processing all remaining jobs .

figure 2

chart 3 The optimal strategy is illustrated by a series of actions , These actions lead to A-D The transition between the four states represented by . In the figure 3(a) in , We see the State A—— The current state of the system . then , The optimal strategy needs to be decided in two actions : Either you stop ( according to WSPT Rules handle all jobs ), Or test unknown jobs . Suppose the test is optimal . under these circumstances , We test an unknown job and transition to state B( chart 3(b)). Please note that , Now we have a little-known job , And test the work ( The number is 1) Have a medium ratio . Once again, , We need to make a decision between stopping and testing . Suppose the retest is optimal . We test unknown jobs and transition to state C( chart 3). In state C in , There are only two unknown jobs and one extra low rate job left ( The number is 2). Process low rate jobs immediately , This leads us into a state D( chart 3(d)). Once again, , We need to choose between stopping and testing . Suppose stopping is the best , We process all jobs according to their ratios , That is, according to their position on the axis from left to right .

These results are summarized in the following theorem .

Theorem 1. about ρa < ρ, The dynamics of the optimal strategy are as follows :

(1) Deal with ratios lower than in non decreasing order ρa All work of .

(2) Either all remaining jobs are processed in a non decreasing order of ratios , Either test a job and return (1).

( When we process all jobs in a non decreasing order of ratios ( In the case 2 in ), We use ρ As the ratio of all unknown jobs , So you can deal with them in turn .) prove . Proof follows lemma 4 and 5.Q.E.D.

Interestingly , The form of the best solution is very similar to the current practice of the emergency department . The top priority is emergency patients ( High weight ) And cases that can be solved quickly ( Short processing time ). Other cases are classified ( test ) And put on hold . This may indicate that classification models should be considered in other industries ( It may be adjusted for specific areas ).

lemma 4 and 5 Another explanation for this is the exchange property of the test . It is similar to exchanging parameters to reorder jobs in the problem without testing , Two lemmas reorder tests , So that it can be executed after processing the low rate job and before processing any other jobs .

Please note that , Some questions remain unanswered .

The main thing is that we should be at all lowratio Test or process all jobs after all jobs are processed ( That is, when to stop )

3.2 Long test time

When ρ < ρa when , We show that tests are always suboptimal , This means that the problem is reduced to the traditional problem without testing .

Theorem 2. If ρ < ρa , So for each state (N,[t1 , w1 , . . . , tn , wn ]), The optimal policy handles all jobs in a non decreasing order ( That is, testing is never optimal ) .

prove . prove . Please refer to page... In the online appendix A.5 section .

Although a basic assumption of the model is that there are N0 A job , But even when the initial state contains known work , Theorem 2( And all other lemmas and theorems ) Still established .

Please note that , When ρ < ρa when , The optimal strategy for processing all jobs in non decreasing order of ratios is ρa < ρ A special case of optimal policy , In this case, the test should not be performed .

4 Solution and algorithm

In this section , We have developed an effective algorithm solution for this problem . In the 4.1 In the festival , We use the 3 The properties of the optimal policy are proved in section to obtain low dimensional DP The formula . stay 4.2 In the festival , We analyze the new formula , stay 4.3 In the festival , We use it to develop an approximation .

4.1. Low dimension DP The formula We start with an arbitrary state (N,[t1 , w1 , . . . , tn , wn ]) Define several statistics : • ωM , P i∈S Med w Work );

• ωH , P i∈S high wi( The total weight of high rate work ); • τM , P i∈S Med ti( Total processing time for medium rate jobs ); • ωτ , P i∈S Med∪S High Ɛ[min(Twi , tiW)]( Expected ordering costs for test and known jobs ).

Based on the theorem 1 and S&T Explanation of the stop time of the problem , We have developed improved DP.

Definition 2. Low dimension DP The definition is as follows :

(4)

(5)

(6)

In these expressions ,d and v It is the implementation of processing time and weight of unknown jobs .

LD DP There are only two controls in :“testone” and “process-all”. Test one is to test an unknown job , If its ratio is low , Then handle the corresponding work . Process-all This means that all jobs are processed in a proportional, non decreasing order . Observe process-all There is a closed form , also test-one It's defined recursively .

Next , We will prove that 2.3 Chaste DP Formula and LD DP There is equivalence between .

Theorem 3. For each state (N,[t1 , w1 , … , tn , wn ]), The following is established : Jmrg(N,[t1 , w1 , … , tn , wn ]) JLD(N, ωM , ωH , τM , ωτ).

prove . We prove lemma in three steps . First , We will be the first to 3 The structural results of section are incorporated into section 2.3 Chaste DP In the formula , To obtain a formula with reduced control and state space . secondly , We show that the new formula is consistent with the goal ( Expected weighted sum of completion times ). Last , We substitute the term to obtain the equivalent LD DP The formula .

4.1.1 An improved DP formula . In the 3 In the festival , We prove two important properties of the optimal policy :(1) Deal with low rates of work immediately ; (2) This problem can be regarded as a stop time problem , There are only two controls :test-one and process-all. Use these two properties , We have reduced the control space ( There are only two controls ) And state space ( There are no low rate jobs ). We use the principle of marginal cost accounting method and the structure of optimal strategy to define a cost function , This function first considers test delay and ordering cost when determining any order between two jobs .

We analyze the cost under the two controls from the beginning of the test . chart 4 The sources of different costs caused by control test I are explained . chart 4(a) Describes the initial system state before performing test one operation . There are two unknown jobs , Two medium rate jobs and two high rate jobs .

There are six types of costs : 1. The self imposed costs of testing work :Ɛ[TW]( chart 4(b)). The duration of the test work now implemented chart 4.( Online color ) LD DP: Control test one (a) Medium ratio unknown ?? ?? ?? ??

0 High ratio (b) Medium ratio High ratio Medium ratio High ratio (d) Medium ratio High ratio a (e) Medium ratio ?

0 High ratio (f) Medium ratio ?

High ratio (g) Medium ratio ?

High ratio a ? Unknown work ; Work better than known ; ratio i Testing work ; ratio d/v It is a part of the same work completion time , It contributes cost to the goal Ɛ[TW].

2. Test delay :( P i∈S Med∪S High wi + NƐ[W])ta( chart 4). Every job in the system is delayed ta Duration of .

3. The cost of known work and testing work :P i∈S Med∪S High Ɛ[min(Twi , tiW)]( chart 4(d)).

Once the test job is implemented ( The duration of the T), Its relative order with respect to known jobs will be determined , Because these assignments are always based on WSPT Rules to deal with . therefore , We can calculate these costs immediately . If the ratio of the tested jobs is less than the ratio of the known jobs (T/W < ti/wi ), The tested jobs are processed first , under these circumstances , Goal increase Twi ; otherwise , Process known jobs first , This will tiW Add to target . This is equivalent to adding the smaller of these two items :min(Twi , tiW).

4. Tested work ( Implemented as a (d,v)) And other unknown work when the work under test has a low ratio (d/v < ρa, chart 4(e)) Costs incurred :d(N -1) Ɛ[W]. Process low rate jobs immediately , And add its duration to the completion time of each unknown job , take (d(N - 1)Ɛ[W]) Add the sum of to the target .

The observed , Once we test , You will find a little-known job , This explains why this expression contains N -1.

5. The future cost of testing with low rates :Jmrg(N − 1,[t1 , w1 , . . . , tn , wn ]) ( chart 4(e)).

The job tested has been processed , Therefore, it is not included in the future state .

6. Testing has a medium or high rate of future costs :Jmrg(N − 1,[t1 , w1 , . . . , tn , wn ] ∪ {d, v}) ( chart 4(e) and 4 (F)). The job tested was not processed , So included in the future state .

figure 4

figure 5

When you choose to process all work , According to its ratio ρ Dealing with unknown work . By that time , The whole processing sequence is determined , We can consider the ordering cost between unknown jobs and other known jobs ( The relative order of known jobs is known before deciding to process all jobs , therefore The respective costs of these work pairs have been included ).

chart 5 Describes the relative order of the different types being determined when we decide to process all . New information about job sequencing generates three types of costs : 1. Sequencing costs between unknown work pairs :N 2 Ɛ[T]Ɛ[W] + NƐ[TW]( chart 5(a)). Yes N 2 For unknown jobs , In each pair , The expected duration is Ɛ[T] Of jobs are delayed with an expected weight of Ɛ[W] The homework . Besides ,N Each of the unknown jobs will delay itself according to its duration , This results in total additional costs NƐ[TW].

2. Ordering cost between medium rate work and unknown work pairs :Ɛ[W]N( P i∈S Med ti )( chart 5(b)). Unknown jobs are processed after medium ratio jobs , It means N Each of the unknown jobs is delayed by the total duration of the medium rate jobs .

3. Ordering costs between high rate work and unknown work pairs :NƐ[T](P i∈S High wi )( chart 5).

High rate jobs are processed after unknown jobs , therefore , Each high rate job will delay the total duration of the unknown job :(NƐ[T]). The total contribution of these to the goal is the total expected duration of the unknown work multiplied by the total weight of the high rate work .

We can now modify the DP Write the complete Behrman equation

()

4.1.2. Consistency with target value . We now show that , For any strategy , The modified DP The formula returns the expected weighted sum of the completion time .

We think of the equation (1) The three types of costs are calculated in a similar way :

1. Self inflicted costs . Under any policy , The duration of any work is part of its completion time . therefore , Whatever the policy ,NƐ[TW] The word should be part of the final cost . According to the modified formula , It takes this cost into account when testing or when we deal with all the activities , under these circumstances , We add terms for each unknown job Ɛ[TW]. Ɛ[TW] Item just adds N Time .

2. Test delay . View test delay costs ( equation (1)) A natural way to , For any job , We all need to multiply the weight of the work by the total test delay .

Another view is , One unknown at a time j After testing , We added the total weight of unprocessed jobs . The latter is the accurate cost accounting done by the modified formula . Every time we test ( And only then ) We add the product of test time to the total weight of jobs in the system .

3. Order cost . In the revised DP In the formula , We consider ordering costs as early as possible when determining the sequence between a pair of activities . This ensures that the order cost is calculated only once . To see that , Please consider any unknown work i. In limine , All work is unknown , Therefore, the ordering cost is not considered . If we test an assignment j And its ratio is very low , So homework j Will be dealt with immediately , We will consider (i, j) Yes . Work j Not part of the state , We will not consider this pair of . If homework j The ratio is not low , Then we (1) Test assignments j Will consider (i, j) Cost of ordering , In this case we immediately consider it , And we will ignore this pair in the future ( Whether we test one or all of the processes ); (2) Process all jobs , under these circumstances , We only think about (i, j) once . This is true for any unknown job , So the same is true for the entire ordering cost .

4.1.3. Variable substitution . The modified DP The cost function of can be expressed by the following quantities : Unknown number of jobs (N)、 The total weight of a medium rate job (ωM P i∈S Med wi )、 The total weight of high rate work Ratio work (ωH P i∈S High wi ), The sum of processing time for medium rate jobs (τM P i∈S Med ti ), Known working implemented functions (ωτ P i∈S Med∪S High Ɛ[min( Twi , tiW)]) And some other constants ( for example ,Ɛ[TW]). By replacing these quantities , We have established two DP Equivalence between recipes . That is ,

()qed

Please note that , The dimension of the state space is now 5( With the first 2.3 Section DP The formula is compared with , The dimension is as high as N0).

It is observed that although this significantly reduces the complexity of the problem , But the number of states in the low dimensional formula may still be very large , Because it contains pseudo polynomial terms :0 ≤ N ≤ N0 , 0 ≤ ωM ≤ N0V, 0 ≤ ωH ≤ N0V,0≤τM≤N0D,0≤ωτ≤N0DV. For this reason , We developed a FPTAS, It provides an approximation for the optimal solution and guarantees the polynomial run time ( There is a certain dependence on the approximation ).

4.2. Optimal threshold policy

LD Formulas have low dimensions , It also has several advantageous features . Its parameters are monotonic , But more importantly , Its optimal solution is a threshold structure . in other words , about N、ωM、ωH and τM Each value of , There is always a ωτ threshold , This threshold determines whether the best action is to test or process all jobs .

lemma 6. Value function JLD stay τM and ωτ Is not reduced .

prove . Please refer to page... In the online appendix A.10 section .

lemma 7. about N、ωM、ωH and τM Each value of , There is a threshold ωτ, Make the test the optimal control , If and only if ,ωτ ≤ ωτ.

prove . Please refer to page... In the online appendix A.11 section .

lemma 7 It means that when the distribution D Support for is discrete , There is an efficient way to represent the optimal policy . N、ωM、ωH and τM The number of different values that can be taken is N0、D and V Polynomials in , And for the (N, ωM, ωH, τM) Each value of , Just one ωτ The value is sufficient to describe The best strategy .

however , To find the actual values of these thresholds , We need to solve DP. Because the state space grows exponentially , This does not apply to standards DP Method to complete . In the next section , We developed an approximation scheme to solve the low dimensional problem DP The formula .

4.3. FPTAS

In the 4.1 Chaste LD Based on the formula , We use the rounding technique (Williamson and Shmoys 2011) To formulate approximate dynamic programs (ADP), Then use it as FTPAS The basis of .

For easy reading , Here we introduce FPTAS Definition and approximate scheme of ( Algorithm 1), The discussion on algorithm construction and runtime analysis is postponed to the online appendix A.12 section .

Definition 3. FPTAS It's a series of algorithms {A }, Each of them > 0 There is an algorithm , bring {A } It's a (1+ ) The approximate algorithm ( Used to minimize problems ) And run {A } The time is in the order of 1/ The polynomials in are bounded .

Algorithm 1( The approximate algorithm )

Theorem 4. Algorithm 1 Is a complete polynomial time approximation scheme , For scheduling with test problems .

5. Short sighted strategy

The optimal and near optimal algorithm solutions proposed in the previous section enable us to solve the problem in polynomial steps . however , To get high-precision solutions for large problem instances ( namely Small value of ), Even polynomial runtime may not be practical . On the other hand , The heuristic method can effectively realize , But there may not always be a good enough performance guarantee . In this section , We study a relatively general assumption ( This includes cases where all work has the same weight ) Under the effective and optimal short-sighted policy . Considering the high dimension in the state space of the initial problem formulation ( Before analyzing and characterizing the optimal strategy ), This seems very surprising .

Before stating assumptions and main theorems , Let's start with the definition .

Definition 4. Process-all (PA) It's a strategy , All of these jobs are processed in non decreasing order of their expected ratios .

Definition 5. Single test strategy (STP) It is a strategy to test a single unknown job before processing all jobs , In a non decreasing order of expected ratios ( Suppose there is at least one unknown job ).

lemma 8. For those who do not work at a low rate and N > 0 Any state of (N,[t1 , w1 , . . . , tn , wn ]), policy PA and STP The difference between the target values is equal to

(7)

prove . Please refer to page... In the online appendix A.13 section .

hypothesis 1. For all jobs with medium ratios i:ti ≤ Ɛ[T], And for all jobs with high rates i:wi ≤ Ɛ[W].

chart 6 It shows that the assumption 1 The distribution of D. Support for these distributions is located in the unshaded area .

Two interesting special cases satisfy the hypothesis 1. In the first special case , All jobs have the same weight , But the processing time may be different . low 、 in 、 High ratio job categories correspond to short processing time 、 in 、 Long homework . Besides , The test threshold corresponds to a certain duration ( Shorter than average processing time ), Therefore, all jobs with processing time shorter than the test threshold should be processed immediately . Before the test phase is completed , All intermediate and long-term work will be suspended .

In the second special case , All jobs have the same processing time , But the weights may be different . In this case , low 、 in 、 A high proportion of job categories correspond to high..., respectively 、 in 、 Low weight work . The test threshold here corresponds to the minimum weight ( Above average weight ), Therefore, all jobs with weights above this threshold should be processed immediately , Without further delay , The weight of other known operations is low or medium Put on hold .

figure 6

For satisfying assumptions 1 Examples of all problems , A simple short-sighted rule governs the decision to stop testing , As shown in the following theorem .

Theorem 5. Assuming 1 Next , For any job that does not have a low ratio and N > 0 The state of (N,[t1 , w1 , . . . , tn , wn ]), Optimal control is to handle all jobs , If and only If , The following conditions hold :

(8)

prove . Please refer to page... In the online appendix A.6 section .

Algorithm 2 Summarize the assumptions 1 The optimal strategy under the condition of . From a technical point of view , Assuming 1 Next , equation (8) There is monotonicity , I.e. at each test , No matter how the test work is implemented , Left - Hand and right-hand side add . This means that once the left side exceeds the right ( From a short-sighted point of view , That means we want to stop ), It will always remain high . under these circumstances , We apply an inductive argument , And prove that any potential optimal strategy tested must be tested only once , This means that the strategy is STP Strategy . This contradicts the optimality of this potential optimal strategy , Because the shortsightedness strategy will test STP Whether the strategy is better than handling all the work .

From an intuitive point of view , The optimal strategy is very sensitive to the implementation in the shadow area . As expected , It may not be worth testing , But if the result of implementation happens to be the result of abnormally high processing time and weight , We may suddenly want to test again , Because compared with the test cost , The cost of a suboptimal program increases . Based on this intuition , We built an example , And show that when assuming 1 When not satisfied , Myopic strategies are not always optimal ( See... In the online appendix A.7 section ).

Last , We note that although the myopic strategy may not be optimal for all instances ( namely , When assuming 1 When not satisfied ), But numerical experiments show that this strategy performs very well in practice . In an extensive computational experiment , This includes resolving more than 50,000 Examples of different problems ( Different test times 、 Combination of processing time and weight distribution ), covers 1010 Multiple different states , in the majority of cases , The myopic strategy is optimal . Besides , In the worst case , The ratio of the expected goal under the short-sighted policy and the optimal policy is 1.1%. ( Full details about the experiment , See page... In the online appendix A.8 section .)

Algorithm 2( Short sighted strategy algorithm )

1: Deal with ratios lower than in non decreasing order ρa All work of .

2: When the following conditions are met

()

3: Test assignments , If the ratio is low , Immediate attention .

4: end , and 5: Process all jobs in a proportional and non decreasing order , among ρ Is the proportion of all unknown jobs , The processing order of any two equal proportion jobs can be arbitrarily selected .

6. The value of testing

In this section , We will discuss how much we can actually benefit from testing . We first analyze some simple heuristic methods , Then examine how different problem parameters affect the value of the test .

6.1. heuristic

We analyzed the performance of three simple policies : • “Process-all”(PA, In the 3.1 Described in the section ); • “ Test first ”(TAF); • “ Test all processes for low ratios ”(TAPL).

As their names suggest , stay “ First test ” in , We first test all the assignments , They are then processed in a non decreasing order of job ratios . stay “test-all process low-ratio” Under the strategy of , We test all unknown jobs , But deal with low rate jobs immediately after all tests are completed , And process other known jobs in a non decreasing order . Please note that , Strategy PA and TAPL Corresponding to the two extremes of the optimal strategy : first , When the stop time is zero , the second , When the stop time is N when . We use it OPT Indicates the optimal policy .

6.1.1. Perspective solutions (CL). We use perspective solutions (CL) As the lower bound of the optimal policy . in other words , When the scheduler knows the processing time and weight ( Or if the test time is zero ), We calculate the target value . Use marginal cost accounting , The target value is

()

Objective value includes self imposed costs (PN i1 TiWi ) And the ordering cost of perfect sorting jobs ( Because all information is known in advance ).

6.1.2. The whole process (PA) policy . Like the solution of clairvoyant , We use marginal cost accounting to calculate policy PA Target value under

()

The self imposed cost of each job is Ɛ[TW], And each pair contributes to the goal Ɛ[T]Ɛ[W] Cost of ( We use independence between jobs ). We get the following about strategy PA The bounds of objective values of :

()

We are right. PA Policy is of particular interest , Because it can be used as a basis for comparison when testing is not possible .

let me put it another way , Comparing the optimal strategy with the whole strategy process can tell us the value of testing .

To better understand the boundaries , Below it we list the unweighted cases (W 1) The value of the distribution sample of . For easy reading , The certificate is included in the online appendix A.14 In the festival

lemma 9. If T ∼ Uni(a, b) and W 1, Then strategy PA Is the optimal policy 3(b + a)/(2(b +2a)) Approximate value .

lemma 10. If T ∼ Exp(λ) and W 1, Then strategy PA Is the optimal policy 2 Approximate value .

lemma 11. If T ∼ a, w.p. p; ∼ b, w.p. 1 - p and W 1 Strategy PA Is the optimal policy (pa + (1 - p)b)/(a + (1 - p) 2 (b - a)) Approximate value .

In the last example , When a 0, p 1/2 when , Strategy PA yes 2 Approximate value , And for a 0, b M, p 1 − 1/M,PA yes M Approximate value . This means policy PA Can do anything bad . When the test time is relatively short , The solution of clairvoyant is close to the optimal strategy , And the boundaries get tighter . In these examples , We see that testing can improve the performance of evenly distributed targets 33%, The target of exponential distribution is improved 50%, And in some cases can do better ( for example M The approximate ).

6.1.3. “ Test all first ”(TAF) policy . When the test time is very short , It makes sense to test all jobs before any processing , In order to perform the processing in the best order . It's easy to see

(9)

This results in an approximate ratio of

()

in fact , With ta Close to zero ,TAF The strategy becomes optimal .

6.1.4. “ Test all processes for low ratios ”(TAPL) policy . Strategy TAPL The intuition behind it is , You can try to improve the policy by processing low rate jobs immediately after detection TAF( To follow lemma 12).

Compared to perspective solutions ,TAPL There are two types of additional costs for a strategy : Because the test is delayed ( Because it tests all jobs ), And because of the suboptimal processing order . use Nl Indicates the number of low rate jobs , Strategy TAPL The total expected cost under can be written as follows :

(10)

For complete derivation , See page... In the online appendix A.15 section . Comparison strategy TAF and TAPL Cost under , We observe strategies TAF Total test time lost under (taN2Ɛ[W]) Higher , But on the other hand , The processing order is optimal . let me put it another way , Strategy TAPL Optimality of transaction processing sequence , In exchange for shorter testing time .

Use the equation (9) and (10), We can come to a conclusion , Under what circumstances is it worth the trade-off .

6.2 Initial quantity of work

We continue to examine how the value of testing is affected by the initial number of unknown jobs . say concretely , We use sufficient conditions on the test optimal state space , To find the lower limit of the optimal number of control tests ( hypothesis ρa < ρ).

The following quantities are important to describe the lower limit of the optimal stop time .

Definition 6. We will stop the factor β Defined as

()

In order to cultivate β Value intuition , Consider testing an unknown job immediately after processing another unknown job . Stop factor β It's early testing ( Use an improved schedule ) Cost savings and additional costs of testing ( We wait for another job while testing ) The ratio between . Intuitively speaking ,β The higher the value , The more convenient it is to test . ( Please note that , As the test time decreases ,β increase , This is consistent with our intuition , That is, for higher β value , Testing is more beneficial .) Also pay attention to , When the ratio is less than 1 when , Testing is not optimal ( because ρa < ρ ). therefore , We can assume that β Greater than 1.

lemma 12. For each state (N, ωM , ωH , τM , ωτ), among β > (NƐ[W]+ ωH)/(N -1), Optimal control is a test .

prove . Please refer to page... In the online appendix A.16 section .

Q.E.D.

Please note that , lemma 12 The left side of the inequality in ( namely β) Is the constant of the problem , The right side dynamically evolves as a function of state . Besides ,β > 1, With N Bigger , The right side tends to 1. therefore , When the number of initial unknown jobs is large , Testing is best for more and more jobs . From a practical point of view , In a crowded system with many unknown jobs , Testing is the most beneficial .

In the next Theorem , We use lemma 12 To get the lower bound of the minimum number of tests .

figure 7

Theorem 6. For the finite and symmetric distribution of working weights , The optimal strategy test is at least Ntests A cycle , among

()

prove . Please refer to page... In the online appendix A.9 section . Q.E.D.

lemma 12 Indicates that the greater the number of unknown jobs , The more likely we are to test , That is, the value of information increases with N0 To increase by .

lemma 12 Another meaning of ( It can be similar to the theorem 6 derived ) yes , When the initial state has N0 Unknown working hours , To find the optimal strategy , We need to solve only N β/(β -1) A working DP, in other words , We just need to solve a constant size DP. Although the number may still be high ( Especially when the test time is very short ), But it does not depend on N0 , This means that all N0 The strategy of processing jobs with low ratios is asymptotically optimal ( To see this , We note briefly JLD(N0 , 0, 0, 0, 0) Θ(N2 0 ), and JLD(β/(β − 1), ωM , ωH , τM , ωτ)Θ((β/(β −1))2+(β/(β−1))N0 ), This means that immediate testing and processing of low rate jobs may not be optimal , Until the cost increases with N0 Asymptotically decreasing .

6.3. Test time

Conditions of use ρ < ρa , We get the threshold of the above test time , This test is never optimal .

Theorem 7. If the test time is greater than t max a : Ɛ[(ρW -T) + ], The test will never be optimal .

prove . According to the definition , If ta > t max a , be ta > Ɛ[(ρW -T) + ].

therefore ,ρa > ρ( lemma 3), Testing is not optimal ( Theorem 2). Q.E.D.

Theorem 7 Provides an upper limit on the test time that testing is still beneficial . In the 6.2 In the festival , We see that it is actually the smallest possible limit ( When ρa < ρ when , For large enough N0 value , Testing is always optimal ).

6.4. Numerical explanation

To illustrate the above observations , We are in the picture 7 The test values of different problem parameters are plotted in . say concretely , We aim at three probability distributions ( See the picture 8) And three initial working quantity values (N0 6, 7, 8), As a test time ta Function of . We summarize our main findings below .

Test time . For all curves , We see that when the test time is very long , It is optimal to handle all jobs ( That is, the test value is zero , Theorem 7). As the test time decreases , Strategy PA Performance degradation , The optimal strategy is a strategy that TAPL To PA A two-stage strategy for switching between . When ta → 0 when , Strategy PA Worse , The optimal strategy and the strategy TAF and TAPL coincidence .

Variability . The probability distribution chosen for this experiment has the same mean value , But in other ways . In the figure 7 in , We see that the test value of the two-point distribution is the highest , The test value of binomial distribution is the lowest . in other words , In the example above , Variability of test value and processing time . This result is very intuitive , Because we get more information by testing jobs with higher variability in processing time distribution .

Initial number of jobs . We are still trying to 7 see , The value of testing increases with the number of initial jobs . This observation may not seem intuitive at first , Because the cost of testing increases when there are more jobs in the system ( Because the test will delay more jobs ). However , The benefits of testing when there is more work seem to outweigh the additional testing costs .

figure 8

7. Expand

In this section , We study the generalization of the problem , It is also indicated that the 3 Section and section 4 Section describes the analysis and methods to solve it . say concretely , We consider cases where the test does not reveal the exact processing time and weight , It is the category of the corresponding work . for example , In the case of an emergency department , The test may reveal the treatment the patient needs , However, the actual service time and severity may still be uncertain .

We assume that there is C Categories , For categories i ∈ C, Processing time and weight of each job Ti and Wi Is from a known distribution Di Random variable of , With expected processing time T¯ i And expected weights W¯ i . The assignment belongs to the i The probability of a class is expressed in p c i Express ( This means that the expected processing time and weight of the unknown job are Ɛ[T] P p c i T¯ i and Ɛ[W] P p c i W¯ i ) ). Testing a job reveals its categories and requires ta Unit time .

ad locum , We use the unknown 、 Jobs that have not been tested and known jobs that have been tested and known categories represent ( Although the actual processing time and weight may still be random ).

figure 9

chart 9 The difference between the original problem and the generalized problem is explained . In the original question (9(a)) in , Once the test is performed , Know the exact processing time . On the other hand , In a broad sense (9(b)) in , Implement classes only by testing , Only know the exact processing time after processing . Please note that , In generalized problems , The job can be tested at most once , in other words , We can test a job to find its category and the corresponding distribution of processing time and weight Di, But we can't test its exact value further . Attention, please. , If the distribution Di It's degenerative , Then the generalized problem will be simplified to discrete instances of the original problem .

Last , Observe that this generalization can be used to model errors in testing , The test either reveals the real implementation with some probability , Either the test reveals the wrong implementation with complementary probability ( Such as Sun wait forsomeone 2017 Captured by the model of ).

We note briefly , This can be done by defining the work category corresponding to each test result , And specify the probability that the random variable in the distribution is realized as true value and false value .

Although the generalized problem is more complex , But the first 3 The analysis of section is carried out . especially , We can prove that this problem is equivalent to the initial model , Each of these classes C Replaced by a deterministic job , Its processing time and weight are equal to the expected processing time and job class C Expected weight of . Intuitively speaking , This is as follows Linear from expectation operator , And because the objective function ( Weighted sum of completion time ) Relative to a single processing time and weight are actually linear ( Self imposed costs do not depend on strategy , Negligible ). For detailed discussion and proof , See... In the online appendix A.17 section .

We conclude this section by pointing out , Although the basic assumption of the model is that the test time is a constant , But it is easy to show that even if the test time is a random variable Ta You can also get results , This may be related to processing time And the weight of the test work . under these circumstances , Test threshold ρa Use Ta The expectation of ( Instead of using ta ) Similarly define . Need to 2.3 Chaste DP Make minor changes to the formula , To be specific , Expression N taƐ[W] Replace with item (N − 1)Ɛ[Ta ]Ɛ[W] + Ɛ[WTa ]. We also note that , If the test time is part of the processing time , in other words , Testing reduces processing time ta, The structure of the optimal policy is preserved . However , under these circumstances , Test threshold ρa Less than the threshold of the original problem .

8 Conclusion

In this paper , We introduce a new class of models , This model captures the main explorations common in many scheduling problems - Use trade-offs . We analyzed this problem , The intuitive characteristics of the optimal strategy are found . For a large number of cases , The optimal policy is explicitly given in the form of stop rules . For all other cases , A new cost accounting scheme is used to formulate low dimension DP, So as to produce optimal and near optimal algorithms .

We studied the performance of several intuitive strategies and how problem parameters affect the value of testing . Last , It shows that attributes and algorithms are extended to more general models .

The concept of testing in scheduling problems seems to be relevant to many other environments . Some of the directions that seem interesting are more generic scheduling models with jobs and multiple server arrivals , A model that can control the degree of testing , And tests that reveal other information about the job , For example, their expiration date or arrival Time .

边栏推荐

- [in depth understanding of tcapulusdb technology] tcapulusdb adds a new business cluster cluster

- DynamicDatabaseSource,在应用端支持数据库的主从

- 讲真,Kotlin 协程的挂起没那么神秘(原理篇)

- [in depth understanding of tcapulusdb technology] business guide for creating doc acceptance

- 元宇宙中的云计算,提升你的数字体验

- 同花顺开户选哪家券商比较好?手机开户安全么?

- What can the accelerated implementation of digital economy bring to SMEs?

- [proteus simulation] 8x8LED dot matrix digital cyclic display

- Introduction to JWT

- MYSQL 几个常用命令使用

猜你喜欢

web技术分享| 【高德地图】实现自定义的轨迹回放

How to realize @ person function in IM instant messaging

ZABBIX learning notes (37)

![[in depth understanding of tcapulusdb technology] getting started with MySQL driver](/img/7b/8c4f1549054ee8c0184495d9e8e378.png)

[in depth understanding of tcapulusdb technology] getting started with MySQL driver

What can the accelerated implementation of digital economy bring to SMEs?

Cloud computing in the metauniverse to enhance your digital experience

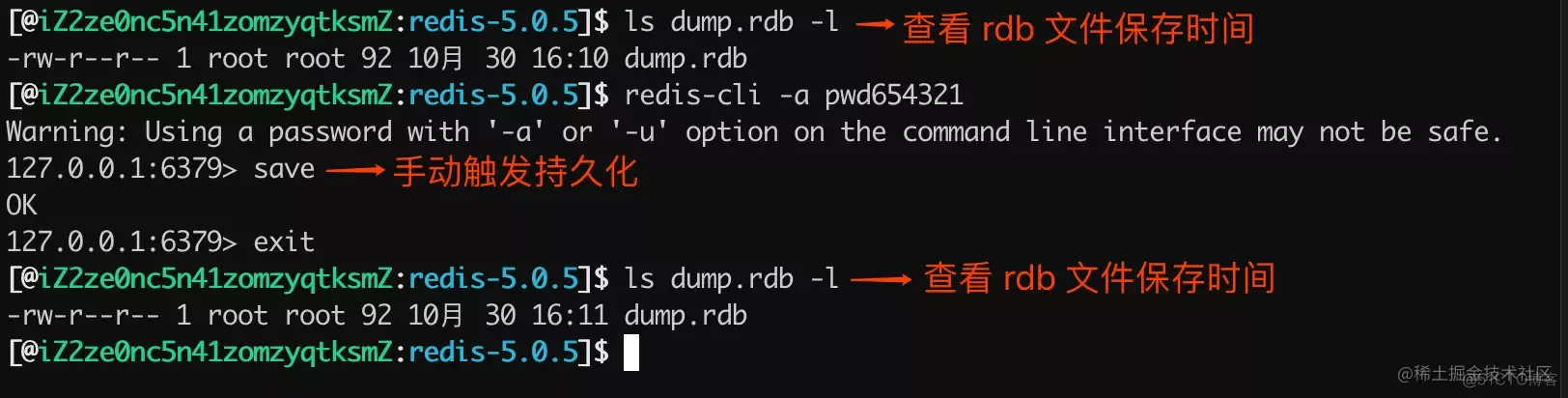

Redis持久化的几种方式——深入解析RDB

防火墙基础之安全策略和NAT(Easy IP)

How should programmers look up dates

Solutions to Oracle system/ user locking

随机推荐

[deeply understand tcapulusdb technology] create a game area for document acceptance

数字化转型的失败原因及成功之道

Introduction to JWT

client-go gin的简单整合十一-Delete

Random talk about redis source code onehundredandtwenty

让知识付费系统视频支持M3U8格式播放的方法

Stochastic Adaptive Dynamics of a Simple Market as a Non-Stationary Multi-Armed Bandit Problem

R语言数据预处理、把类型变量转化为因子变量,把数据集转化为h2o格式、数据集划分(训练集、测试集、验证集)

密码学系列之:PKI的证书格式表示X.509

It supports running in kubernetes, adds multiple connectors, and seatunnel version 2.1.2 is officially released!

EasyDSS问题及解决方案汇总

[deeply understand tcapulusdb technology] create a game zone

JWT简介

Cloud computing in the metauniverse to enhance your digital experience

uniapp小程序商城开发thinkphp6积分商城、团购、秒杀 封装APP

R语言基于h2o包构建二分类模型:使用h2o.glm构建正则化的逻辑回归模型、使用h2o.auc计算模型的AUC值

深度学习常用损失函数总览:基本形式、原理、特点

[deeply understand tcapulusdb technology] realize tcapulusdb transaction management in the operation and maintenance platform

AAAI 2022 | 传统GAN修改后可解释,并保证卷积核可解释性和生成图像真实性

市场开始降温,对NFT 是坏事么?