当前位置:网站首页>Google | ICML 2022: sparse training status in deep reinforcement learning

Google | ICML 2022: sparse training status in deep reinforcement learning

2022-06-22 19:53:00 【Zhiyuan community】

【 title 】The State of Sparse Training in Deep Reinforcement Learning

【 The author team 】Laura Graesser, Utku Evci, Erich Elsen, Pablo Samuel Castro

【 Date of publication 】2022.6.17

【 Thesis link 】https://arxiv.org/pdf/2206.10369.pdf

【 Recommended reasons 】 In recent years , The use of sparse neural networks in various fields of deep learning is growing rapidly , Especially in the field of computer vision . The attraction of sparse neural networks is mainly due to the reduction of the number of parameters required for training and storage , And the improvement of learning efficiency . It's kind of surprising , Few people try to explore them in deep reinforcement learning (DRL) Application in . In this work , The author's team has systematically investigated the application of some existing sparse training techniques in various deep reinforcement learning agents and environments . The final results of the investigation confirm the results of sparse training in the field of computer vision —— In the field of deep reinforcement learning , For the same parameter count , The performance of sparse network is better than that of dense network . The author's team analyzed in detail how various components of deep reinforcement learning are affected by the use of sparse networks , And by proposing promising ways to improve the effectiveness of sparse training methods and promote their use in deep reinforcement learning .

边栏推荐

猜你喜欢

![[nfs failed to mount problem] mount nfs: access denied by server while mounting localhost:/data/dev/mysql](/img/15/cbb95ec823cdde5fb8f032dc45cfc7.png)

[nfs failed to mount problem] mount nfs: access denied by server while mounting localhost:/data/dev/mysql

安装Office的一些工具

第一章 力扣热题100道(1-5)

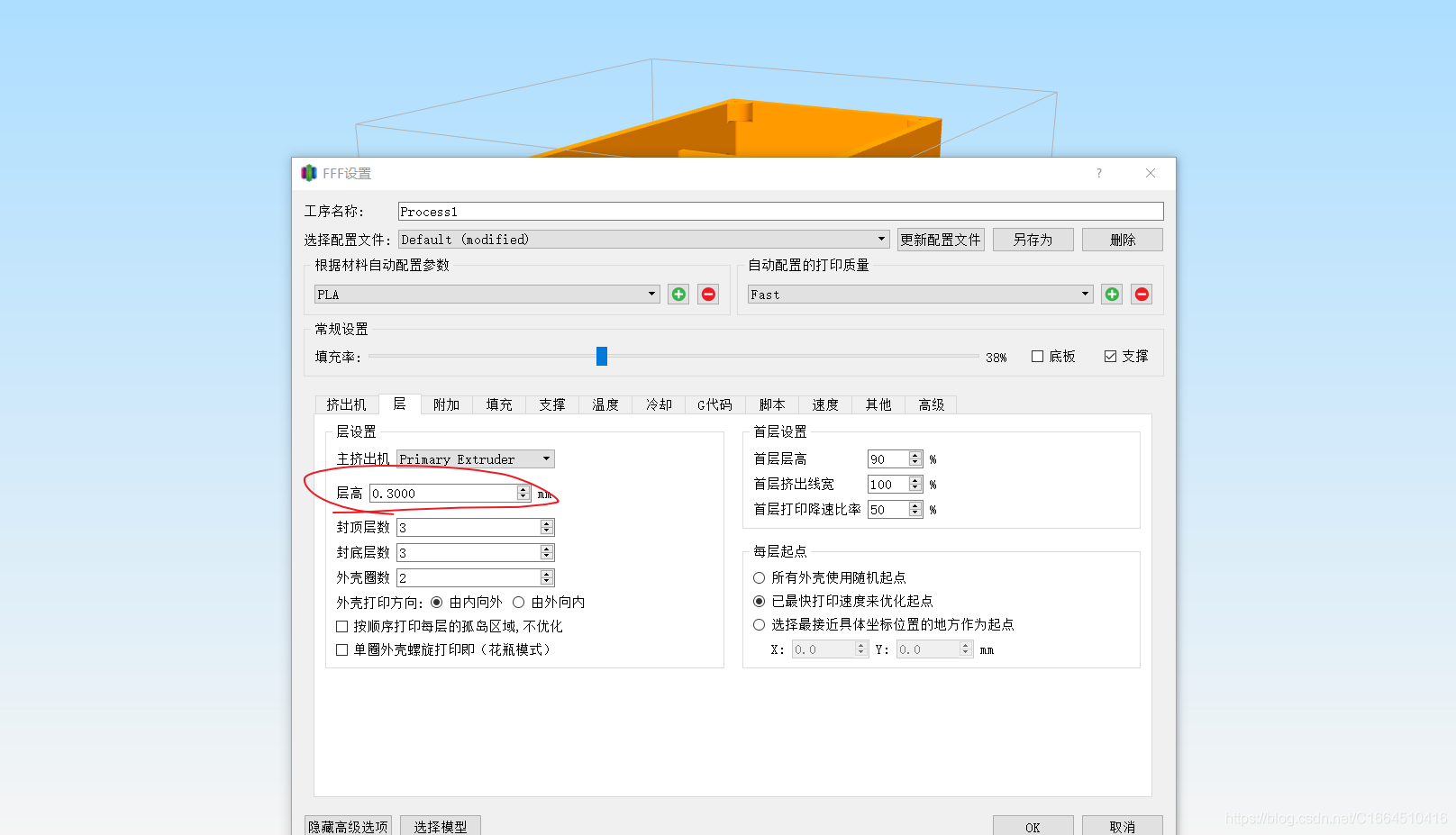

1.3-----Simplify 3D切片软件简单设置

C #, introductory tutorial -- a little knowledge about function parameter ref and source program

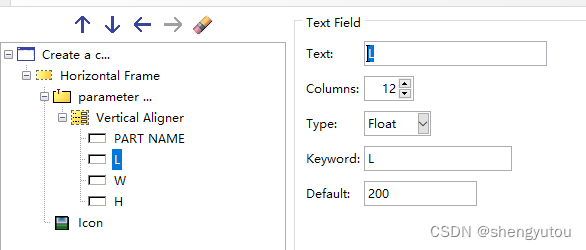

ABAQUS 使用RSG绘制插件初体验

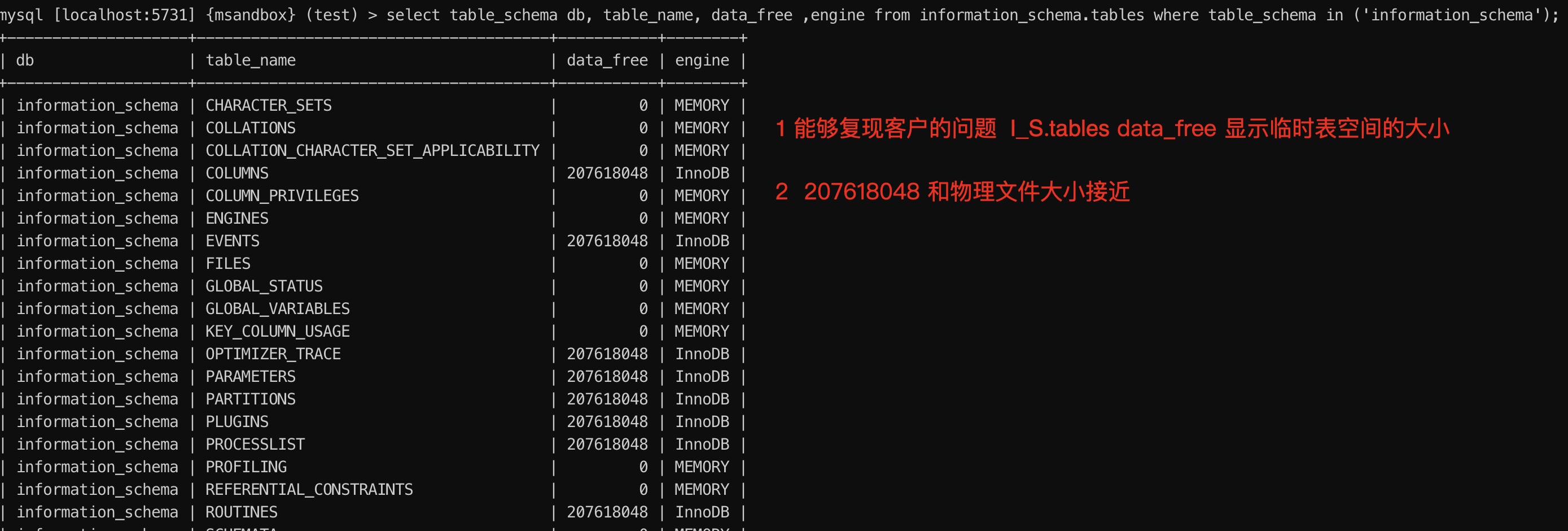

故障分析 | 从 data_free 异常说起

Some problem records of openpnp using process

使用 qrcodejs2 生成二维码详细API和参数

详解openGauss多线程架构启动过程

随机推荐

matlab调用API

Fault analysis | from data_ Free exception

记可视化项目代码设计的心路历程以及理解

MySQL约束

数组对象实现一 一对比(索引和id相同的保留原数据,原数组没有的数据从默认列表加进去)

DIV横向布局

Solution of off grid pin in Altium Designer

MySQL多表操作

K8s deploy MySQL

Focal and global knowledge distillation for detectors

Antd tree tree tree selector subclass required

故障分析 | 从 data_free 异常说起

[in depth understanding of tcapulusdb technology] new models of tcapulusdb

自定义控件AutoScaleMode为Font造成宽度增加的问题

【深入理解TcaplusDB技术】集群管理操作

About Random Forest

第一章 力扣热题100道(1-5)

The custom control autoscalemode causes the problem of increasing the width of font

How to use yincan IS903 to master DIY's own USB flash disk? (good items for practicing BGA welding)

delegate