当前位置:网站首页>Mathematical derivation from perceptron to feedforward neural network

Mathematical derivation from perceptron to feedforward neural network

2022-06-27 19:44:00 【Barnepokhev】

perceptron

Perceptron is a classical linear model for binary classification . Its principle is simple and intuitive , Introduced the concept of learning , It is one of the basic components of neural network .

The definition of perceptron

Suppose the input space is X ∈ R n X\in \Bbb R^n X∈Rn, The output space is Y = { + 1 , − 1 } Y=\{+1,-1\} Y={ +1,−1}. Input x ∈ X x\in X x∈X Represents the eigenvector in the example , Corresponds to a point in the input space ; Output y ∈ Y y\in Y y∈Y Represents the category of the instance . Then called formula (1) This function from input space to output space is the perceptron model .

f ( x ) = s i g n ( w ⋅ x + b ) (1) f(x) =sign(w\cdot x+b) \tag{1} f(x)=sign(w⋅x+b)(1)

among , w w w and b b b For the parameters of the perceptron model , w ∈ R n w\in\Bbb R^n w∈Rn It is called weight or weight vector , b ∈ R b\in \Bbb R b∈R It's called bias , w ⋅ x w\cdot x w⋅x Express w w w and x x x Inner product , s i g n sign sign It's a symbolic function , namely :

s i g n ( x ) = { + 1 , x ≥ 0 − 1 , x < 0 (2) sign(x)=\begin{cases} +1,&x\ge 0\\ -1,&x\lt 0 \end{cases} \tag{2} sign(x)={ +1,−1,x≥0x<0(2)

The geometric meaning of the perceptron

For a given sample set T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T=\{(x_1,y_1),(x_2,y_2),\cdots,(x_N,y_N)\} T={ (x1,y1),(x2,y2),⋯,(xN,yN)}, In which the opposite formula is (1) The linear equation appearing in w ⋅ x + b = 0 w\cdot x+b=0 w⋅x+b=0 Corresponding to the feature space R n \Bbb R^n Rn A hyperplane of S S S, among w w w yes S S S The normal vector of , b b b yes S S S The intercept of . If the characteristic space is linearly separable , Then the hyperplane can exactly divide the feature space into two parts ( Pictured 1 Shown ). The eigenvectors in the two parts correspond to positive and negative samples respectively . here , The hyperplane 𝑆 It is also called separating hyperplane .

Loss function of perceptron

Sum up , The main goal of the perceptron is to determine a hyperplane that can completely separate the positive and negative samples of the data set , The hyperplane is mainly through the continuous adjustment of parameters during training w w w and b b b To determine . as for w w w and b b b Then you can define about w w w and b b b And minimize the loss function to get .

When the number of misclassification points is used as the loss function , The loss function is not about w w w and b b b Continuous differentiable function of , It is not easy to optimize in actual operation , So you can use all misclassification points to the hyperplane S S S As a loss function . input space R n \Bbb R^n Rn Any point in x 0 x_0 x0 To the hyperplane S S S The distance to :

1 ∣ ∣ w ∣ ∣ ∣ w ⋅ x + b ∣ (3) \frac{1}{||w||}|w\cdot x+b| \tag{3} ∣∣w∣∣1∣w⋅x+b∣(3)

When sample label y i y_i yi by + 1 +1 +1 when , w ⋅ x i + b > 0 w\cdot x_i+b\gt 0 w⋅xi+b>0 The classification is correct ; When sample label y i y_i yi by − 1 −1 −1 when , w ⋅ x i + b < 0 w\cdot x_i+b\lt 0 w⋅xi+b<0 The classification is correct , Therefore, the two formulas are integrated as follows :

{ y i ( w ⋅ x i + b ) > 0 , Correct classification y i ( w ⋅ x i + b ) < 0 , Classification error (4) \begin{cases} y_i(w\cdot x_i+b)\gt0,&\text{ Correct classification }\\ y_i(w\cdot x_i+b)\lt0,&\text{ Classification error } \end{cases} \tag{4} { yi(w⋅xi+b)>0,yi(w⋅xi+b)<0, Correct classification Classification error (4)

Therefore, the misclassification point x i x_i xi To the hyperplane S S S The distance is :

− 1 ∣ ∣ w ∣ ∣ y i ( w ⋅ x i + b ) (5) -\frac{1}{||w||}y_i(w\cdot x_i+b) \tag{5} −∣∣w∣∣1yi(w⋅xi+b)(5)

Let's set a hyperplane S S S The set of misclassification points is M M M, So all misclassification points to the hyperplane S S S The total distance is :

− 1 ∣ ∣ w ∣ ∣ ∑ x i ∈ M y i ( w ⋅ x i + b ) (6) -\frac{1}{||w||}\sum_{x_i\in M}y_i(w\cdot x_i+b)\tag{6} −∣∣w∣∣1xi∈M∑yi(w⋅xi+b)(6)

Stop thinking about 1 ∣ ∣ w ∣ ∣ \frac{1}{||w||} ∣∣w∣∣1, The loss function of perceptron learning and training is obtained L ( w , b ) L(w,b) L(w,b):

L ( w , b ) = − ∑ x i ∈ M y i ( w ⋅ x i + b ) (7) L(w,b)=-\sum_{x_i\in M}y_i(w\cdot x_i+b) \tag{7} L(w,b)=−xi∈M∑yi(w⋅xi+b)(7)

Perceptron learning algorithm

The learning goal of the known perceptron is to get the parameter that minimizes the value of the loss function w w w and b b b, namely :

min w , b L ( w , b ) = − ∑ x i ∈ M y i ( w ⋅ x i + b ) (8) \min_{w,b}L(w,b)=-\sum_{x_i\in M}y_i(w\cdot x_i+b) \tag{8} w,bminL(w,b)=−xi∈M∑yi(w⋅xi+b)(8)

because L ( w , b ) L(w,b) L(w,b) Is an independent variable w w w and b b b Simple linear function of , And because only misclassification points in the loss function are involved in the calculation , So you don't have to take derivatives for all the points . Therefore, the descent method adopted by the perceptron is random gradient descent (Stochastic Gradient Descent,SGD).

In the random gradient descent method , Only one sample is randomly selected for each iteration , If the sample is correctly classified under the current hyperplane , No operation ; If the classification is wrong , For this misclassified sample x i x_i xi Find gradient :

∇ w = ∂ − y i ( w ⋅ x i + b ) ∂ w = − y i x i (9) \nabla w = \frac{\partial-y_i(w\cdot x_i+b)}{\partial w}=-y_ix_i \tag{9} ∇w=∂w∂−yi(w⋅xi+b)=−yixi(9) ∇ b = ∂ − y i ( w ⋅ x i + b ) ∂ b = − y i x i (10) \nabla b=\frac{\partial-y_i(w\cdot x_i+b)}{\partial b}=-y_ix_i \tag{10} ∇b=∂b∂−yi(w⋅xi+b)=−yixi(10)

Using the gradient of the solution w w w and b b b updated , have to :

w ← w + η y i x i (11) w\leftarrow w+\eta y_ix_i \tag{11} w←w+ηyixi(11) b ← w + η y i (12) b \leftarrow w+\eta y_i \tag{12} b←w+ηyi(12)

among , η \eta η For learning rate , Represents the speed of learning , Usually take 0 ∼ 1 0∼1 0∼1 Between . Again by Novikoff The theorem shows that , The loss function can be made as small as possible after finite iterations , Until zero , At this point, you can get w w w and b b b Value , Then we get the hyperplane S S S.

Defect and improvement of perceptron model

The perceptron model is simple and intuitive in binary classification , But it has great limitations , That is, we can only deal with linear separable problems . Take the XOR problem in logic as an example , For input { A , B } \{A,B\} { A,B}, When A = B A=B A=B When the output is 1 1 1, When A ≠ B A\ne B A=B When the output is 0 0 0. With 0 , 0 {0,0} 0,0、 0 , 0 {0,0} 0,0、 1 , 0 {1,0} 1,0, 1 , 1 {1,1} 1,1 For example , The output results are shown in table 1 Shown :

| A | B | A XOR B |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

The XOR problem seems very simple , But it is a nonlinear separable problem , So for the perceptron model , The problem is complicated . Will table 1 Display the results on the two-dimensional coordinates as shown in the figure 2 Shown .

From the figure 2 Shown , Since the points with the same DE value occupy the diagonal respectively , So you can't find a straight line anyway ( hyperplane ) Separate the two classes . Of course , Using two perceptron models seems to solve the problem . Pictured 2 Shown , The segmentation hyperplane corresponding to the two perceptron models is the two solid lines in the graph . Set up perceptron 1 by f 1 ( x ) = s i g n ( w 1 ⋅ x + b 1 ) f_1 (x)=sign(w_1⋅x+b_1) f1(x)=sign(w1⋅x+b1); perceptron 2 by f 2 ( x ) = s i g n ( w 2 ⋅ x + b 2 ) f_2 (x)=sign(w_2⋅x+b_2) f2(x)=sign(w2⋅x+b2). And make the perceptron 1 The lower right of the corresponding hyperplane and the perceptron 2 The upper left output of the corresponding hyperplane is + 1 +1 +1; perceptron 1 The upper right of the corresponding hyperplane and the perceptron 2 The lower right of the corresponding hyperplane is output as − 1 -1 −1.

At this point, continue to add the perceptron 3 To integrate the perceptron 1 And perceptron 2 Output result of , You might as well set up a perceptron 3 by f 3 ( x 3 ) = s i g n ( w 3 ⋅ x 3 + b 3 ) f_3 (x_3)=sign(w_3⋅x_3+b_3) f3(x3)=sign(w3⋅x3+b3). among , w 3 = [ 1 , 1 ] w_3=[1,1] w3=[1,1], x 3 = [ f 1 ( x ) , f 2 ( x ) ] x_3=[f_1 (x),f_2 (x)] x3=[f1(x),f2(x)], b 3 = − 1 b_3=-1 b3=−1.

obviously , When the perceptron 3 Output is + 1 +1 +1 when , Indicates that the sample belongs to a positive class ; Output is − 1 -1 −1 when , Indicates that the sample belongs to negative class . The operation architecture of the three perceptrons is shown in Figure 3 Shown :

actually , The XOR problem can be solved by using the superposition value of two perceptrons , perceptron 3 Just added for uniform output . In terms of promotion , When the problem is more complicated , We can consider using more perceptron models to stack . Although a perceptron has limited capabilities , But once the sensors are stacked in multiple layers , Its ability will be greatly enhanced . Practical application , In order to better solve the linear inseparable problem , The perceptron model is on the way to multi-layer , This has become the cornerstone of various neural networks . The neurons in each layer are all interconnected with those in the next layer , The neural network structure without the same layer or cross layer connection is called multilayer perceptron or multilayer feedforward neural network .

边栏推荐

猜你喜欢

External interrupt experiment based on stm32f103zet6 library function

華大單片機KEIL報錯_WEAK的解决方案

什么是SSR/SSG/ISR?如何在AWS上托管它们?

《第五项修炼》(The Fifth Discipline):学习型组织的艺术与实践

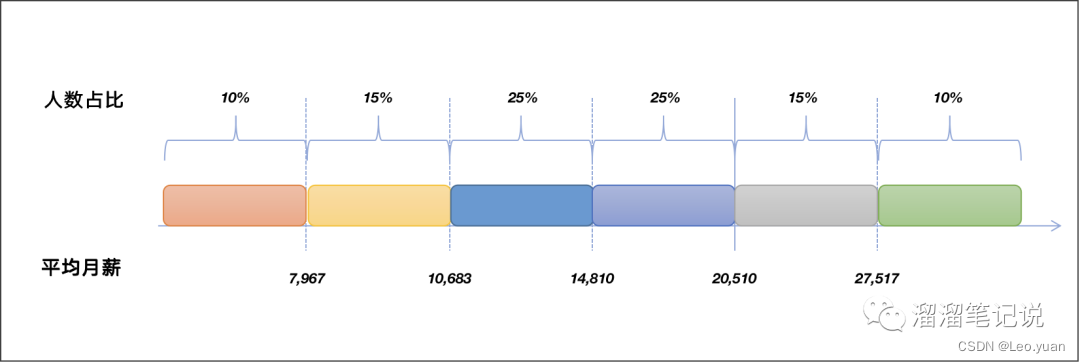

数据分析师太火?月入3W?用数据告诉你这个行业的真实情况

【ELT.ZIP】OpenHarmony啃论文俱乐部—数据密集型应用内存压缩

Minmei new energy rushes to Shenzhen Stock Exchange: the annual accounts receivable exceeds 600million and the proposed fund-raising is 450million

GIS遥感R语言学习看这里

openssl客户端编程:一个不起眼的函数导致的SSL会话失败问题

电脑安全证书错误怎么处理比较好

随机推荐

Blink SQL built in functions

如何封裝調用一個庫

基于STM32F103ZET6库函数跑马灯实验

Hi,你有一份Code Review攻略待查收!

使用logrotate对宝塔的网站日志进行自动切割

昱琛航空IPO被终止:曾拟募资5亿 郭峥为大股东

运算符的基础知识

IDEA 官网插件地址

如何利用 RPA 实现自动化获客?

Tupu digital twin intelligent energy integrated management and control platform

Usage of rxjs mergemap

实战回忆录:从Webshell开始突破边界

Bit.Store:熊市漫漫,稳定Staking产品或成主旋律

网上期货开户安全么?

Market status and development prospect forecast of global 4-methyl-2-pentanone industry in 2022

What is ICMP? What is the relationship between Ping and ICMP?

Memoirs of actual combat: breaking the border from webshell

Add in address of idea official website

数仓的字符截取三胞胎:substrb、substr、substring

基于STM32F103ZET6库函数按键输入实验