当前位置:网站首页>Pytorch遇到的坑:为什么模型训练时,L1loss损失无法下降?

Pytorch遇到的坑:为什么模型训练时,L1loss损失无法下降?

2022-06-25 06:39:00 【一个菜鸟的奋斗】

最近在用L1loss做一个回归模型的训练,发现模型训练过程中loss及其不稳定,且训练效果很差,终于找到原因了!

原代码如下:

criterion = nn.L1Loss()

def train():

print('Epoch {}:'.format(epoch + 1))

model.train()

# switch to train mode

for i, sample_batched in enumerate(train_dataloader):

input, target = sample_batched['geno'], sample_batched['pheno']

# compute output

output = model(input.float().cuda())

loss = criterion(output, target.float().cuda())

# compute gradient and do SGD step

optimizer.zero_grad()

loss.backward()

optimizer.step()以上代码问题出在:

loss = criterion(output, target.float().cuda())

我输入的batchsize是4,因此output的size是[4,1],也就是一个二维的数据;target的size是[4]。loss输出的结果是一个正确的数值。这也是我没发现问题的原因!我们看一下pytorch库里l1_loss的代码:

def l1_loss(input, target, size_average=None, reduce=None, reduction='mean'):

# type: (Tensor, Tensor, Optional[bool], Optional[bool], str) -> Tensor

r"""l1_loss(input, target, size_average=None, reduce=None, reduction='mean') -> Tensor

Function that takes the mean element-wise absolute value difference.

See :class:`~torch.nn.L1Loss` for details.

"""

if not torch.jit.is_scripting():

tens_ops = (input, target)

if any([type(t) is not Tensor for t in tens_ops]) and has_torch_function(tens_ops):

return handle_torch_function(

l1_loss, tens_ops, input, target, size_average=size_average, reduce=reduce,

reduction=reduction)

if not (target.size() == input.size()):

warnings.warn("Using a target size ({}) that is different to the input size ({}). "

"This will likely lead to incorrect results due to broadcasting. "

"Please ensure they have the same size.".format(target.size(), input.size()),

stacklevel=2)

if size_average is not None or reduce is not None:

reduction = _Reduction.legacy_get_string(size_average, reduce)

if target.requires_grad:

ret = torch.abs(input - target)

if reduction != 'none':

ret = torch.mean(ret) if reduction == 'mean' else torch.sum(ret)

else:

expanded_input, expanded_target = torch.broadcast_tensors(input, target)

ret = torch._C._nn.l1_loss(expanded_input, expanded_target, _Reduction.get_enum(reduction))

return ret

代码里的warning,要求input和target的size必须一致,不然会出现不对的结果。我自己代码里把warning给ignore了,所以这个warning一直没看到!这里提醒大家,一定不要随意ignore warning,并且要好好看warning,不要只看error。。。。

我把代码改成以下,就没有问题了:

loss = criterion(output.squeeze(), target.float().cuda())

既然问题解决了,得知道为啥size不匹配会导致模型出错呀,不然找了那么久的bug不是白瞎了= =

我们先尝试错误输入,输入的size是[4,1],target的size是[4]:

input = tensor([[-0.3704, -0.2918, -0.6895, -0.6023]], device='cuda:0', grad_fn=<PermuteBackward>) target = tensor([ 63.6000, 127.0000, 102.2000, 115.4000], device='cuda:0') expanded_input, expanded_target = torch.broadcast_tensors(input, target) ret = torch._C._nn.l1_loss(expanded_input, expanded_target, _Reduction.get_enum(reduction))

返回 expanded_input:

tensor([[-0.3704, -0.2918, -0.6895, -0.6023],

[-0.3704, -0.2918, -0.6895, -0.6023],

[-0.3704, -0.2918, -0.6895, -0.6023],

[-0.3704, -0.2918, -0.6895, -0.6023]], device='cuda:0',

grad_fn=<PermuteBackward>)

返回 expanded_target:

tensor([[ 63.6000, 63.6000, 63.6000, 63.6000],

[127.0000, 127.0000, 127.0000, 127.0000],

[102.2000, 102.2000, 102.2000, 102.2000],

[115.4000, 115.4000, 115.4000, 115.4000]], device='cuda:0')

返回ret:

tensor(102.5385, device='cuda:0', grad_fn=<PermuteBackward>)

接下来是正确输入,输入的size是[4],target的size是[4]:

input = tensor([-0.3704, -0.2918, -0.6895, -0.6023], device='cuda:0',

grad_fn=<PermuteBackward>)

target = tensor([ 63.6000, 127.0000, 102.2000, 115.4000], device='cuda:0')

expanded_input, expanded_target = torch.broadcast_tensors(input, target)

ret = torch._C._nn.l1_loss(expanded_input, expanded_target, _Reduction.get_enum(reduction))

返回 expanded_input:

tensor([[-0.3704, -0.2918, -0.6895, -0.6023],

[-0.3704, -0.2918, -0.6895, -0.6023],

[-0.3704, -0.2918, -0.6895, -0.6023],

[-0.3704, -0.2918, -0.6895, -0.6023]], device='cuda:0',

grad_fn=<PermuteBackward>)

返回ret:

tensor(102.5385, device='cuda:0', grad_fn=<PermuteBackward>)

经过mean求平均之后,返回的ret值是一样的,唯一不同的是expanded_input。这个中间值不一样,是否会导致梯度变化?为了验证这个想法,我们在代码中输出input的梯度值。

for name, parms in model.named_parameters():

print('name:', name)

print('grad_requirs:', parms.requires_grad)

print('grad_value:', parms.grad)

以下为错误输入,输入的size是[4,1],target的size是[4]:

===

name: module.linear1.bias

grad_requirs: True

grad_value: tensor([-0.1339, 0.0000, 0.0505, 0.0219, -0.1498, 0.0265, -0.0604, -0.0385,

0.0471, 0.0000, 0.0304, 0.0000, 0.0000, 0.0406, 0.0066, 0.0000,

-0.0259, -0.1544, 0.0000, -0.0208, 0.0050, 0.0000, 0.0625, -0.0474,

0.0000, 0.0858, -0.0116, 0.0777, 0.0000, -0.0828, 0.0000, -0.1265],

device='cuda:0')

===

name: module.linear2.weight

grad_requirs: True

grad_value: tensor([[-0.9879, -0.0000, -1.0088, -0.1680, -0.7312, -0.0066, -0.3093, -0.7478,

-0.3104, -0.0000, -0.1615, -0.0000, -0.0000, -0.3162, -0.1047, -0.0000,

-0.4030, -0.3385, -0.0000, -0.1738, -0.0831, -0.0000, -0.3490, -0.1129,

-0.0000, -0.8220, -0.0279, -0.3754, -0.0000, -0.3566, -0.0000, -0.5950]],

device='cuda:0')

===

name: module.linear2.bias

grad_requirs: True

grad_value: tensor([-1.], device='cuda:0')

===

以下为正确输入,输入的size是[4],target的size是[4]得到的梯度:

===

name: module.linear1.bias

grad_requirs: True

grad_value: tensor([-0.1351, 0.0000, 0.0000, 0.0000, -0.0377, 0.0000, -0.0809, -0.0394,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0202, 0.0098, -0.0365,

-0.0263, -0.2063, -0.1533, -0.0626, 0.0050, 0.0000, 0.0000, -0.0950,

0.0000, 0.0000, -0.0348, 0.0000, 0.0000, -0.1108, -0.0402, -0.1693],

device='cuda:0')

===

name: module.linear2.weight

grad_requirs: True

grad_value: tensor([[-7.4419, 0.0000, 0.0000, 0.0000, -1.9245, 0.0000, -2.7927, -2.4551,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, -0.0309, -0.4843, -0.0211,

-1.7046, -7.7090, -0.1696, -0.9997, -0.0862, 0.0000, 0.0000, -2.0397,

0.0000, 0.0000, -0.3125, 0.0000, 0.0000, -3.9532, -0.0643, -6.5799]],

device='cuda:0')

===

name: module.linear2.bias

grad_requirs: True

grad_value: tensor([-1.], device='cuda:0')

===

果然,梯度值不一样!!!经验教训:每一行代码都要深入理解其作用的机理,不要想当然!

边栏推荐

- CPDA | how to start the growth path of data analysts?

- C#入门教程

- RTKLIB-b33版本中GALILEO广播星历存储问题

- Analysis on the trend of the number of national cinemas, film viewers and average ticket prices in 2021 [figure]

- Several good weather plug-ins

- MySQL (12) -- Notes on changing tables

- 【UVM入門 ===> Episode_9 】~ 寄存器模型、寄存器模型的集成、寄存器模型的常規方法、寄存器模型的應用場景

- Ca-is1200u current detection isolation amplifier has been delivered in batch

- Harmony美食菜单界面

- Unity3D邪门实现之GUI下拉菜单Dropdown设计无重复项

猜你喜欢

Operate cnblogs metaweblog API

Sichuan earth microelectronics ca-is1300 isolated operational amplifier for current detection is on the market

正版photoshop2022購買體驗經曆分享

JMeter introduction practice ----- use of global variables and local variables

Research on 3D model retrieval method based on two channel attention residual network - Zhou Jie - paper notes

Chuantu microelectronics ca-if1051 can-fd transceiver

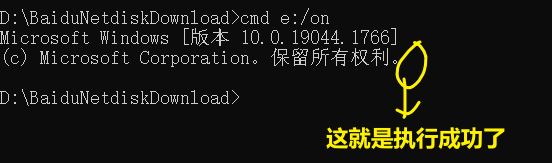

【批处理DOS-CMD命令-汇总和小结】-cmd扩展命令、扩展功能(cmd /e:on、cmd /e:off)

Ns32f103c8t6 can perfectly replace stm32f103c8t6

Escape analysis of 982 golang

Intel announced five new technological developments, including quantum computing, neural pseudo computing, machine programming, integrated optoelectronics, and secure computing

随机推荐

对链表进行插入排序[dummy统一操作+断链核心--被动节点]

【UVM入门 ===> Episode_9 】~ 寄存器模型、寄存器模型的集成、寄存器模型的常规方法、寄存器模型的应用场景

What is the new business model of Taishan crowdfunding in 2022?

音频(五)音频特征提取

VectorDraw Developer Framework 10.10

Intel announced five new technological developments, including quantum computing, neural pseudo computing, machine programming, integrated optoelectronics, and secure computing

Can I open a stock account with a compass? Is it safe?

Enter an integer with any number of bits, and output the sum of each bit of the number. For example: 1234 – > 10

The perfect presentation of Dao in the metauniverse, and platofarm creates a farm themed metauniverse

Why "New Year's Eve", the original memory burst!

Orcad Schematic常用功能

Tempest HDMI leak receive 1

14 BS object Node name Name attrs string get node name attribute content

线程状态变化涉及哪些常用 API

13 `bs_ duixiang. Tag tag ` get a tag object

Operate cnblogs metaweblog API

Sichuan earth microelectronics ca-is1200 isolated operational amplifier for current detection

Tuwei Digital Isolator and interface chip can perfectly replace imported brands Ti and ADI

Debian introduction

【批處理DOS-CMD命令-匯總和小結】-cmd擴展命令、擴展功能(cmd /e:on、cmd /e:off)