当前位置:网站首页>Pad User Guide

Pad User Guide

2022-06-24 00:46:00 【Shenluohua】

List of articles

- One 、10 Minute quick start paddle : Handwritten numeral recognition task

- Two 、Tensor

- 3、 ... and 、 Data set definition and loading

- Four 、 Data preprocessing

- 5、 ... and 、 Model networking

- 5.1 Use the built-in model directly

- 5.2 Paddle.nn Introduce 、 Model parameters

- 5.3 Use paddle.nn.Sequential networking

- 5.4 Use paddle.nn.Layer networking

- 5.5 networking 、 Training 、 Evaluate frequently asked questions

- 5.6 Model parameter FAQs ( Gradient cut 、 Share weight 、 Hierarchical learning rate, etc )

- 6、 ... and : model training 、 Evaluation and reasoning

- 7、 ... and 、 Model saving and loading

- 8、 ... and 、paddle Develop advanced usage

explain :

In this paper, the reference paddle Official website 、paddlepaddle Official documents

CPU edition paddle install :

! python3 -m pip install paddlepaddle -i https://mirror.baidu.com/pypi/simple. Reference documents for other versions Fast installation .

One 、10 Minute quick start paddle : Handwritten numeral recognition task

Reference in this section :10 Minute quick start paddle .

This use MNIST Handwritten digital data sets are used for image classification to get a first understanding of paddle Use .

The following is the complete code of handwritten numeral recognition task :

import paddle

import numpy as np

from paddle.vision.transforms import Normalize

# Define the image normalization processing method , there CHW Means that the image format must be [C The channel number ,H Height of the image ,W The width of the image ]

transform = Normalize(mean=[127.5], std=[127.5], data_format='CHW')

# Download the dataset and initialize DataSet

train_dataset = paddle.vision.datasets.MNIST(mode='train', transform=transform)

test_dataset = paddle.vision.datasets.MNIST(mode='test', transform=transform)

# Model networking and initialize the network

lenet = paddle.vision.models.LeNet(num_classes=10)

model = paddle.Model(lenet)

# Configuration preparation for model training , Prepare the loss function , Optimizer and evaluation index

model.prepare(paddle.optimizer.Adam(parameters=model.parameters()),

paddle.nn.CrossEntropyLoss(),

paddle.metric.Accuracy())

# model training

model.fit(train_dataset, epochs=5, batch_size=64, verbose=1)

# Model to evaluate

model.evaluate(test_dataset, batch_size=64, verbose=1)

# Save the model

model.save('./output/mnist')

# Load model

model.load('output/mnist')

# Take a picture from the test set

img, label = test_dataset[0]

# The picture shape from 1*28*28 Turn into 1*1*28*28, Add one more batch dimension , To match model input format requirements

img_batch = np.expand_dims(img.astype('float32'), axis=0)

# Execute the reasoning and print the results , here predict_batch Back to a list, Take out the data and get the prediction results

out = model.predict_batch(img_batch)[0]

pred_label = out.argmax()

print('true label: {}, pred label: {}'.format(label[0], pred_label))

# Visualizations

from matplotlib import pyplot as plt

plt.imshow(img[0])

In short , The deep learning task is generally divided into the following core steps :

- Data set definition and loading

- Model networking

- Model training and evaluation

- Model reasoning

Next, step by step , Help you quickly master the method of using the propeller frame to practice the deep learning task .

1.1 Dataset definition

Some data sets have been built into the propeller , Include :

- paddle.vision.datasets : Built in computer vision (Computer Vision,CV) Common data sets in the field ,

- paddle.text: Built in naturallanguageprocessing (Natural Language Processing,NLP) Common data sets in the field .

From the print results, we can see that the propeller has :

- CV In the field of MNIST、FashionMNIST、Flowers、Cifar10、Cifar100、VOC2012 Data sets

- NLP In the field of Conll05st、Imdb、Imikolov、Movielens、UCIHousing、WMT14、WMT16 Data sets .

import paddle

print(' Computer vision (CV) Related data sets :', paddle.vision.datasets.__all__)

print(' natural language processing (NLP) Related data sets :', paddle.text.__all__)

Computer vision (CV) Related data sets : ['DatasetFolder', 'ImageFolder', 'MNIST', 'FashionMNIST', 'Flowers', 'Cifar10', 'Cifar100', 'VOC2012']

natural language processing (NLP) Related data sets : ['Conll05st', 'Imdb', 'Imikolov', 'Movielens', 'UCIHousing', 'WMT14', 'WMT16', 'ViterbiDecoder', 'viterbi_decode']

In this task , Built in MNIST The data set has been divided into training set and test set , adopt mode Field in ‘train’ or ‘test’ To distinguish between .

1.2 Dataset loading

1.2.1 Load the built-in data set directly

paddle The built-in classic dataset can be called directly :

import paddle

from paddle.vision.transforms import Normalize

transform = Normalize(mean=[127.5], std=[127.5], data_format='CHW')

# Download the dataset and initialize DataSet

train_dataset = paddle.vision.datasets.MNIST(mode='train', transform=transform)

test_dataset = paddle.vision.datasets.MNIST(mode='test', transform=transform)

# Print the number of pictures in the dataset

print('{} images in train_dataset, {} images in test_dataset'.format(len(train_dataset), len(test_dataset)))

60000 images in train_dataset, 10000 images in test_dataset

After data set initialization , You can use the following code to iteratively read the dataset directly .

from matplotlib import pyplot as plt

for data in train_dataset:

image, label = data

print('shape of image: ',image.shape)

plt.title(str(label))

plt.imshow(image[0])

break

shape of image: (1, 28, 28)

And then there is paddle.vision.transforms , Provides some common image transformation operations , Such as center clipping of the image 、 Flip the image horizontally and normalize the image . Here is initialization MNIST Data set passed in Normalize Transform to normalize the image , Image normalization can accelerate the convergence speed of model training .

1.2.2 Custom read dataset

Reference resources : Data set definition and loading 、 Data preprocessing

paddle.io.Dataset and paddle.io.DataLoader : Custom datasets and loading functions API

1.3 Defining models

1.3.1 Built in model

paddle.vision.models : Built in CV Some classical models in the field , such as LeNe, One line of code can complete LeNet Network construction and initialization ,num_classes Field to define the number of categories for the classification .

1.3.2 Print model information

adopt paddle.summary It can easily print network infrastructure and parameter information .

# Model networking and initialize the network

lenet = paddle.vision.models.LeNet(num_classes=10)

# Visualization model networking structure and parameters

paddle.summary(lenet,(1, 1, 28, 28))

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 1, 28, 28]] [1, 6, 28, 28] 60

ReLU-1 [[1, 6, 28, 28]] [1, 6, 28, 28] 0

MaxPool2D-1 [[1, 6, 28, 28]] [1, 6, 14, 14] 0

Conv2D-2 [[1, 6, 14, 14]] [1, 16, 10, 10] 2,416

ReLU-2 [[1, 16, 10, 10]] [1, 16, 10, 10] 0

MaxPool2D-2 [[1, 16, 10, 10]] [1, 16, 5, 5] 0

Linear-1 [[1, 400]] [1, 120] 48,120

Linear-2 [[1, 120]] [1, 84] 10,164

Linear-3 [[1, 84]] [1, 10] 850

===========================================================================

Total params: 61,610

Trainable params: 61,610

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.11

Params size (MB): 0.24

Estimated Total Size (MB): 0.35

---------------------------------------------------------------------------

{

'total_params': 61610, 'trainable_params': 61610}

1.3.3 Custom neural networks

Through the oars paddle.nn.Sequential and paddle.nn.Layer API Can be more flexible and convenient to build a custom neural network , Please refer to 『 Model networking 』 chapter .

1.4 Model training and evaluation

Reference resources 《 model training 、 Evaluation and reasoning 》

1.4.1 Optimizer 、 model training

Model training needs to complete the following steps :

Use paddle.Model Packaging model . Combine network structures for quick use High level of propeller API Training 、 assessment 、 Examples of reasoning , Convenient follow-up operation .

Use paddle.Model.prepare Complete the configuration preparation for the training . Include :

- paddle.optimizer : Optimizer algorithm related API

- paddle.nn Loss: Loss function correlation API

- paddle.metric : The evaluation index is related API.

Use paddle.Model.fit Configure loop parameters and start training . Configuration parameters include specifying the training data source train_dataset、 Training batch size batch_size、 Number of training rounds epochs etc. , After execution, the training cycle of the model will be completed automatically .

Because it's a classification task , The loss function here uses the usual CrossEntropyLoss ( Cross entropy loss function ), Optimizer usage Adam, Use of evaluation indicators Accuracy To calculate the accuracy of the model on the training set .

# Packaging model , Easy for follow-up training 、 Reasoning and evaluation

model = paddle.Model(lenet)

# Configuration preparation for model training , Prepare the loss function , Optimizer and evaluation index

model.prepare(paddle.optimizer.Adam(parameters=model.parameters()),

paddle.nn.CrossEntropyLoss(),

paddle.metric.Accuracy())

# Start training

model.fit(train_dataset, epochs=5, batch_size=64, verbose=1)

The loss value printed in the log is the current step, and the metric is the average value of previous steps.

Epoch 1/5

step 938/938 [==============================] - loss: 0.0011 - acc: 0.9865 - 14ms/step

Epoch 2/5

step 938/938 [==============================] - loss: 0.0045 - acc: 0.9885 - 14ms/step

Epoch 3/5

step 938/938 [==============================] - loss: 0.0519 - acc: 0.9896 - 14ms/step

Epoch 4/5

step 938/938 [==============================] - loss: 4.1989e-05 - acc: 0.9912 - 14ms/step

Epoch 5/5

step 938/938 [==============================] - loss: 0.0671 - acc: 0.9918 - 15ms/step

1.4.2 Model to evaluate

After the model training , call paddle.Model.evaluate , To evaluate the effect of the trained model .

# The model was evaluated

model.evaluate(test_dataset, batch_size=64, verbose=1)

Eval begin...

step 157/157 [==============================] - loss: 5.7177e-04 - acc: 0.9859 - 6ms/step

Eval samples: 10000

{

'loss': [0.00057177414], 'acc': 0.9859}

1.5 Model preservation 、 load 、 Reasoning

Reference resources : Model saving and loading 、 model training 、 Evaluation and reasoning

1.5.1 Model preservation

call paddle.Model.save Save the model :

# Save the model , The folder is created automatically

model.save('./output/mnist')

The above code will be executed in output Save two files in the directory ,mnist.pdopt Parameters for the optimizer ,mnist.pdparams For the parameters of the model .

output

├── mnist.pdopt # Optimizer parameters

└── mnist.pdparams # Parameters of the model

Every epoch Save the model once :

import os

data_dir='./output'

model.save(os.path.join(data_dir,'mnist_',str(epoch)))

1.5.2 Load the model and reason

Callable paddle.Model.load Load model , Then you can go through the paddle.Model.predict_batch Perform reasoning operations :

# Load model

model.load('output/mnist')

# Take a picture from the test set

img, label = test_dataset[0]

# The picture shape from 1*28*28 Turn into 1*1*28*28, Add one more batch dimension , To match model input format requirements

img_batch = np.expand_dims(img.astype('float32'), axis=0)

# Execute the reasoning and print the results , here predict_batch Back to a list, Take out the data and get the prediction results

out = model.predict_batch(img_batch)[0]

pred_label = out.argmax()

print('true label: {}, pred label: {}'.format(label[0], pred_label))

# Visualizations

from matplotlib import pyplot as plt

plt.imshow(img[0])

true label: 7, pred label: 7

Two 、Tensor

Reference resources 《Tensor Introduce 》、 paddle.Tensor API file

The propeller uses a tensor (Tensor) To represent the data transmitted in the neural network ,Tensor It can be understood as multidimensional array , Be similar to Numpy Array (ndarray) The concept of . In the propeller frame , Input of neural network 、 Output data , And the parameters in the network are Tensor data structure .

2.1 Tensor The creation of

2.1.1 Specify data creation

By giving Python Sequence ( As listing list、 Tuples tuple), Use paddle.to_tensor Create... Of any dimension Tensor:

import paddle

x=paddle.to_tensor(2)

y= paddle.to_tensor([[1.0, 2.0, 3.0],

[4.0, 5.0, 6.0]])

tensor_temp = paddle.to_tensor(np.array([1.0, 2.0]))

print(x,y)

Tensor(shape=[1], dtype=int64, place=Place(cpu), stop_gradient=True,

[2])

Tensor(shape=[2, 3], dtype=float32, place=Place(cpu), stop_gradient=True,

[[1., 2., 3.],

[4., 5., 6.]])

Tensor Must be shaped like a rectangle , That is, in any dimension , The number of elements must be equal , Otherwise, an exception will be thrown :

ValueError:

Faild to convert input data to a regular ndarray :

- Usually this means the input data contains nested lists with different lengths.

- It can be done by

paddle.tolisttake Tensor Convert to Python Sequence data - It can be done by

Tensor.numpyMethod implementation will Tensor Convert to Numpy Array - Create... Based on the given data Tensor when , The propeller is created by copying , Do not share memory with raw data .

2.1.2 Specify shape creation

If you want to create a Tensor, have access to paddle.zeros、paddle.ones、paddle.full Realization :

paddle.zeros([m, n], dtype=None, name=None) # The created data is all 0, Shape is [m, n] Of Tensor

paddle.ones([m, n], dtype=None) # The created data is all 1, Shape is [m, n] Of Tensor

paddle.full([m, n], 10, dtype=None, name=None) # The created data is all 10, Shape is [m, n] Of Tensor

for example :

paddle.ones([2,3],'float32')

2.1.3 Specify the interval to create

Create... Within the specified interval Tensor, have access to paddle.arrange、 paddle.linspace Realization :

# Create in steps step Evenly separate sections [start, end) Of Tensor

paddle.arange(start, end, step,dtype=None, name=None)

# Create by the number of elements num Evenly separate sections [start, end) Of Tensor

paddle.linspace(start, stop, num, dtype=None, name=None)

data = paddle.linspace(0, 10, 1, 'float32') # [0.0]

data = paddle.linspace(0, 10, 2, 'float32') # [0.,10.]

data = paddle.linspace(0, 10, 5, 'float32') # [0.0, 2.5, 5.0, 7.5, 10.0]

In addition to the data specified above 、 shape 、 Section creation Tensor Methods , The propeller also supports the following similar creation methods , Such as :

paddle.empty: Create an empty Tensor, According to shape and dtype Create an element whose value has not been initialized Tensorpaddle.ones_like、paddle.zeros_like、paddle.full_like、paddle.empty_like: Create one with other Tensor Have the same shape And dtype Of Tensorpaddle.clone: Copy and create one with the other Tensor Exactly the same Tensor, The API Provide gradient calculation .

clone_x = paddle.clone(x)

- paddle.rand(shape, dtype=None, name=None): In accordance with uniform distribution , The scope is [0, 1) Of Tensor

- paddle.randn(shape, dtype=None, name=None): In accordance with the standard normal distribution ( The mean for 0, The standard deviation is 1 Normal random distribution of ) The random Tensor

- paddle.randint(low=0, high=None, shape=[1], dtype=None, name=None): Uniformly distributed 、 The scope is [low, high) The random Tensor.

- Set random seed creation Tensor, Generate random numbers of the same element value each time Tensor, It can be done by

paddle.seedandpaddle.randCombination to achieve .

2.1.4 Specify the image 、 Text data creation

- paddle.vision.transforms.ToTensor : Direct will PIL.Image Format data to Tensor

- paddle.to_tensor : Label the image (Label, Usually Python or Numpy Formatted data ) To Tensor.

- Text scene , The text data needs to be decoded into numbers , Re pass

paddle.to_tensorTo Tensor

Let's take the image scene as an example , The following example code converts the randomly generated image to Tensor.

import numpy as np

from PIL import Image

import paddle.vision.transforms as T

import paddle.vision.transforms.functional as F

fake_img = Image.fromarray((np.random.rand(224, 224, 3) * 255.).astype(np.uint8)) # Create random pictures

transform = T.ToTensor()

tensor = transform(fake_img) # Use ToTensor() Convert the picture to Tensor

print(tensor)

Tensor(shape=[3, 224, 224], dtype=float32, place=Place(gpu:0), stop_gradient=True,

[[[0.78039223, 0.72941178, 0.34117648, ..., 0.76470596, 0.57647061, 0.94901967],

...,

[0.49803925, 0.72941178, 0.80392164, ..., 0.08627451, 0.97647065, 0.43137258]]])

explain : When actually coding , Due to the loading of the propeller data paddle.io.DataLoader API Be able to convert the original paddle.io.Dataset The defined data is automatically converted to Tensor, Therefore, manual conversion is not required . The details are described in the following sections .

2.1.5 Automatically create Tensor

paddle.io.DataLoaderCan be based on the original Dataset, Return read Dataset Iterator of data , Each element in the data returned by the iterator is a Tensorpaddle.Model.fit、paddle.Model.predict: These high-rise buildings API, If the incoming data is not Tensor, Will automatically change to Tensor Then model training or reasoning . So even if there is no write, the data will be converted to Tensor Code for , It can also be executed normally , Improved programming efficiency and fault tolerance .

In the following example code , The data of the original data set is printed separately , And feeding DataLoader Data returned after , You can see that the data structure consists of Python list Into the Tensor.

import paddle

from paddle.vision.transforms import Compose, Normalize

transform = Compose([Normalize(mean=[127.5],

std=[127.5],

data_format='CHW')])

test_dataset = paddle.vision.datasets.MNIST(mode='test', transform=transform)

print(test_dataset[0][1]) # Print the first data of the original data set label

loader = paddle.io.DataLoader(test_dataset)

for data in enumerate(loader):

x, label = data[1]

print(label) # Print by DataLoader Of the first data in the returned iterator label

break

[7] # In the raw data label by Python list

Tensor(shape=[1, 1], dtype=int64, place=Place(gpu_pinned), stop_gradient=True,

[[7]]) # from DataLoader After the transformation ,label by Tensor

2.2 Tensor Properties of

Tensor(shape=[3], dtype=float32, place=Place(gpu:0), stop_gradient=True,

[2., 3., 4.])

You can see the print from the Tensor From time to tome shape、dtype、place Etc , These are all Tensor Important attributes of .

2.2.1 Tensor The shape of the 、reshape

Can pass Tensor.shape View one Tensor The shape of the , The following are related concepts :

- shape: It describes Tensor The number of elements in each dimension .

- ndim: Tensor Number of dimensions . The scalar dimension is 0, The vector dimension is 1, The matrix dimension is 2,Tensor There can be any number of dimensions .

- axis perhaps dimension:Tensor The shaft , A particular dimension .

- size:Tensor The number of all elements in the .

establish 1 Four dimensions Tensor , And the relationship between the above concepts is intuitively expressed through graphics :

ndim_4_Tensor = paddle.ones([2, 3, 4, 5])

- paddle.reshape : change Tensor Of shape, But it doesn't change Tensor Of size And the element data in it .

- paddle.squeeze: Can be realized Tensor Dimension reduction operation of , Namely the Tensor The medium size is 1 Delete the dimension of .

- paddle.unsqueeze: Can be realized Tensor Dimension upgrade of , That is to Tensor Insert a dimension of 1 Dimensions .

- paddle.flatten: take Tensor The data of is flattened on the specified continuous dimension .

- transpose: Yes Tensor To rearrange the data .

x = paddle.to_tensor([1, 2, 3]).reshape([1, 3])

y = paddle.rand([5, 1, 10]).squeeze(axis=1)# shape=[5, 10]

x1=paddle.squeeze(x, axis=1)

y3= paddle.unsqueeze(y,axis=0)

z = paddle.randn([2, 3, 4])

z_transposed = paddle.transpose(z, perm=[1, 0, 2])

print(z_transposed.shape)#[3L, 2L, 4L]

2.2.2 Tensor Data types and changing data types

Tensor.dtype: see Tensor Data type of dtype , Support types include :bool、float16、float32、float64、uint8、int8、int16、int32、int64、complex64、complex128.paddle.cast: change Tensor Of dtype:

x= paddle.to_tensor(1.0)# Default float32 type

y = paddle.cast(x, dtype='float64')#float64 type

2.2.3 Tensor Location of equipment (place)

Tensor.place: Can be specified Tensor Assigned device location , Supported device locations are :CPU、GPU、 Fixed memory, etc .paddle.device.set_device: You can set the global default device location .Tensor.placeThe specified value of takes precedence over the global default value .- When... Is not specified place when ,Tensor The default equipment location is consistent with the installed version of the propeller frame . If installed GPU Version of the propeller , The default device location is GPU, namely Tensor Of place The default is paddle.CUDAPlace.

# establish CPU Upper Tensor

cpu_Tensor = paddle.to_tensor(1, place=paddle.CPUPlace())

print(cpu_Tensor.place)#Place(cpu)

gpu_Tensor = paddle.to_tensor(1, place=paddle.CUDAPlace(0))

print(gpu_Tensor.place) # Show Tensor be located GPU The second part of the equipment 0 On the graphics card

2.2.4 stop_gradient And in place & The difference between off-site operation

stop_gradient Indicates whether to stop calculating the gradient , The default value is True, Means to stop calculating the gradient . If it is not necessary to update some parameters , You can set the... Of the parameter stop_gradient Set to True:

eg = paddle.to_tensor(1)

print("Tensor stop_gradient:", eg.stop_gradient)

eg.stop_gradient = False

print("Tensor stop_gradient:", eg.stop_gradient)

paddle.reshape: Off site operation , Will not modify the original Tensor, But back to a new one Tensorpaddle.reshape_: In situ operation , In the original Tensor Save operation results on , Output Tensor Enter the with Tensor Shared data , And there's no Tensor The process of copying data

2.3 Tensor visit

2.3.1 Index and slice 、Tensor modify

modify Tensor You can index or slice on a single or multiple dimensions , The operation will modify the Tensor The numerical , And the original value will not be saved .

2.3.2 Mathematical calculation 、 Logical operations

The oars also provide a wealth of Tensor Operation of the API, Including mathematical operations 、 Logical operations 、 Linear algebra, etc 100 More than API, these API There are two methods to call :

x = paddle.to_tensor([[1.1, 2.2], [3.3, 4.4]], dtype="float64")

y = paddle.to_tensor([[5.5, 6.6], [7.7, 8.8]], dtype="float64")

print(paddle.add(x, y), "\n") # Method 1

print(x.add(y), "\n") # Method 2

Mathematical calculation :

x.abs() # Take the absolute value element by element

x.ceil() /x.floor() # Element by element up / Round down

x.round() # Round off element by element

x.exp() # Calculate the exponent with the base of the natural constant element by element

x.log() # Element by element x The natural logarithm of

x.reciprocal() # Find the reciprocal element by element

x.square() / x.sqrt() # Calculate the square element by element 、 square root

x.sin()/x.cos() # Calculate sinusoids element by element / cosine

x.max()/x.min() # Specifies the maximum value of the element on the dimension / minimum value , The default is all dimensions

x.prod() # Specifies the cumulative multiplication of elements on the dimension , The default is all dimensions

x.sum() # Specifies the sum of elements on the dimension , The default is all dimensions

The propeller frame is right Python The magic functions related to mathematical operations are rewritten , for example :

x + y -> x.add(y) # Add by element

x - y -> x.subtract(y) # Subtract by element

x * y -> x.multiply(y) # Multiply by element

x / y -> x.divide(y) # Divide by elements

x % y -> x.mod(y) # Divide element by element and take remainder

x ** y -> x.pow(y) # Element by element power operation

Logical operations :

x.isfinite() # Judge Tensor Whether the element in is a finite number , That is, excluding inf And nan

x.equal_all(y) # Whether two Tensor Whether all elements of are equal , And return the shape as [1] Boolean class of Tensor

x.equal(y) # Whether two Tensor Whether each element of is equal , And return Boolean classes with the same shape Tensor

x.not_equal(y) # Whether two Tensor Whether each element of is not equal

x.allclose(y) # Judge Tensor x Whether all elements of are related to Tensor y All elements of are close to , And return the shape as [1] Boolean class of Tensor

similarly , The propeller frame is right Python The logic comparison related magic functions are rewritten , The result is the same as the above operation .

x == y -> x.equal(y) # Whether two Tensor Whether each element of is equal

x != y -> x.not_equal(y) # Whether two Tensor Whether each element of is not equal

x < y -> x.less_than(y) # Judge Tensor x Whether the element of is less than Tensor y The corresponding element of

x <= y -> x.less_equal(y) # Judge Tensor x Whether the element of is less than or equal to Tensor y The corresponding element of

x > y -> x.greater_than(y) # Judge Tensor x Whether the element of is greater than Tensor y The corresponding element of

x >= y -> x.greater_equal(y) # Judge Tensor x Whether the element of is greater than or equal to Tensor y The corresponding element of

linear algebra :

x.t() # Matrix transposition

x.transpose([1, 0]) # In exchange for 0 Dimension and chapter 1 The order of dimensions

x.norm('fro') # Frobenius norm of matrix

x.dist(y, p=2) # matrix (x-y) Of 2 norm

x.matmul(y) # Matrix multiplication

3、 ... and 、 Data set definition and loading

Reference resources 《 Data set definition and loading 》

In the propeller frame , The definition and loading of data sets can be completed through the following two core steps :

Define datasets : The original picture saved on disk 、 Samples such as text and corresponding tags are mapped to Dataset, To facilitate subsequent indexing (index) Reading data , stay Dataset Some data transformation can also be carried out in 、 Data augmentation and other preprocessing operations . Recommended for use in the propeller frame

paddle.io.DatasetCustom datasets , In addition topaddle.vision.datasetsandpaddle.textSome classic data sets are built into the propeller under the directory to facilitate direct calling .Iteratively read data sets : Automatically batch samples from datasets (batch)、 Disorder (shuffle) Wait for the operation , Facilitate iterative reading during training , At the same time, it also supports multi process asynchronous reading function, which can speed up data reading . It can be used in the propeller frame

paddle.io.DataLoaderIteratively read data sets .

3.1 Define datasets

3.1.1 Load the built-in data set directly

This part is in this article 1.2.1 It's over

3.1.2 Use paddle.io.Dataset Custom datasets

In the actual scene , Generally, you need to use your own data to define the data set , You can pass paddle.io.Dataset Base classes to implement custom datasets .

You can build a subclass that inherits from paddle.io.Dataset , And implement the following three functions :

__init__: Complete dataset initialization , Map the sample file path and the corresponding label in the disk to a list .__getitem__: Define the specified index (index) How to obtain the sample data , Finally, the corresponding index A single piece of data ( Sample data 、 Corresponding label ).__len__: Returns the total number of samples in the dataset .

Here 's how to download MNIST Raw dataset file :

# Download the original MNIST Dataset and decompress

! wget https://paddle-imagenet-models-name.bj.bcebos.com/data/mnist.tar

# windows Lower to open bash Enter the following command to unzip tar package

! tar -xf mnist.tar

The file mode after decompression is as follows :

Corresponding label :

use paddle.io.Dataset Define datasets :

import os

import cv2

import numpy as np

from paddle.io import Dataset

from paddle.vision.transforms import Normalize

class MyDataset(Dataset):

def __init__(self, data_dir, label_path, transform=None):

""" 1. Inherit paddle.io.Dataset class 2. Realization __init__ function , Initialize the dataset , Map samples and labels to the list """

super(MyDataset, self).__init__()

self.data_list = []

with open(label_path,encoding='utf-8') as f:

for line in f.readlines():

#line The format is :'imgs/5/0.jpg\t5\n'..strip() Remove the newline ,.split('\t') Remove tabs

image_path, label = line.strip().split('\t')#('imgs/5/0.jpg', '5')

image_path = os.path.join(data_dir, image_path)#'./mnist/train/imgs/5/0.jpg'

self.data_list.append([image_path, label])

# Pass in the defined data processing method , As an attribute of the custom dataset class

self.transform = transform

def __getitem__(self, index):

""" 3. Realization __getitem__ function , The definition specifies index How to get data when , And return a single piece of data ( Sample data 、 Corresponding label ) """

# Index based , Take an image from the list

image_path, label = self.data_list[index]

# Read grayscale

image = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE)

# The default internal data format for propeller training is float32, Convert the image data format to float32

image = image.astype('float32')

# Apply data processing methods to images

if self.transform is not None:

image = self.transform(image)

# CrossEntropyLoss requirement label The format is int, take Label Format conversion to int

label = int(label)

# Returns the image and the corresponding label

return image, label

def __len__(self):

""" 4. Realization __len__ function , Returns the total number of samples in the dataset """

return len(self.data_list)

# Define the image normalization processing method , there CHW Means that the image format must be [C The channel number ,H Height of the image ,W The width of the image ]

transform = Normalize(mean=[127.5], std=[127.5], data_format='CHW')

# Print the number of data set samples

train_custom_dataset = MyDataset('mnist/train','mnist/train/label.txt', transform)

test_custom_dataset = MyDataset('mnist/val','mnist/val/label.txt', transform)

print('train_custom_dataset images: ',len(train_custom_dataset), 'test_custom_dataset images: ',len(test_custom_dataset))

In the code above , Customize a dataset class MyDataset,MyDataset Inherited from paddle.io.Dataset Base class , And implemented __init__,__getitem__ and __len__ Three functions .

- stay

__init__Function completes the reading and parsing of the label file , And all the image paths image_path And the corresponding label label Save to a list data_list in . - stay

__getitem__The specified... Is defined in the function index Method for acquiring corresponding image data , Finished reading the image 、 Preprocessing and image label format conversion , Finally, the image and corresponding label are returned image, label. - stay

__len__Function__init__The list of data sets initialized in the data_list length . - in addition , stay

__init__Functions and__getitem__Function can also implement some data preprocessing operations , Such as flipping the image 、 tailoring 、 Normalization and other operations , Finally, a single piece of processed data is returned ( Sample data 、 Corresponding label ), This operation can increase the diversity of image data , It helps to enhance the generalization ability of the model . The propeller frame is paddle.vision.transforms There are dozens of image data processing methods built in , Please refer to Data preprocessing chapter .

3.2 Iteratively read data sets

3.2.1 Direct iterative reading of custom data sets

Similar to built-in data sets , You can use the following code to iteratively read the custom data set directly :

for data in train_custom_dataset:

image, label = data

print('shape of image: ',image.shape)

plt.title(str(label))

plt.imshow(image[0])

break

shape of image: (1, 28, 28)

3.2.2 Use paddle.io.DataLoader Define data readers

In the propeller frame , Recommended paddle.io.DataLoader API Read data sets in multiple processes , And can automatically complete the division batch The job of .

# Define and initialize the data reader

train_loader = paddle.io.DataLoader(train_custom_dataset, batch_size=64, shuffle=True, num_workers=1, drop_last=True)

# call DataLoader Read data iteratively

for batch_id, data in enumerate(train_loader()):

images, labels = data

print("batch_id: {}, Training data shape: {}, Tag data shape: {}".format(batch_id, images.shape, labels.shape))

break

batch_id: 0, Training data shape: [64, 1, 28, 28], Tag data shape: [64]

- After defining the data reader , Ready to use for The loop easily iterates through the batch data , Used for model training .

- high-level API Of

paddle.Model.fitHas been partially encapsulated DataLoader The function of , When training, just define the data set Dataset that will do , There is no need to define it separately DataLoader. Details available model training 、 Evaluation and reasoning chapter . - DataLoader The batch size for sampling is defined in 、 Sequence and so on , The corresponding fields include batch_size、shuffle、drop_last. Through the batch sampler

BatchSamplerThe resulting batch index list , And get... According to the index Dataset Corresponding sample data in , To load batch data . - DataLoader These three fields can also be used as one

batch_samplerFields instead of , And in batch_sampler Import a customized batch sampler instance in . Choose one of the two methods , The same effect can be achieved , This usage provides more flexibility in defining sampling rules

3.2.3 ( Optional ) Custom sampler

Please refer to the tutorial for details

The sampler defines the sampling behavior from the dataset , Such as sequential sampling 、 Batch sampling 、 Random sampling 、 Distributed sampling, etc . The sampler will follow the set sampling rules , Returns the list of indexes in the dataset , Then the data reader Dataloader You can take the corresponding samples from the data set according to the index list .

The propeller frame is paddle.io A variety of samplers are available under the directory , Such as batch sampler BatchSampler、 Distributed batch sampler DistributedBatchSampler、 Sequential sampler SequenceSampler、 Random sampler RandomSampler etc. .

3.2.4 Multi card parallel training , How to configure DataLoader Read asynchronous data

paddle There is not much difference between setting asynchronous reading in multi card training and single card training , Dynamic graph mode , At present, only multi process and multi card are supported , Each process will use only one device , Such as a GPU card , In this case , It's the same as single card training , Just make sure that each process is using the correct card .

Please refer to the propeller for specific examples API paddle.io.DataLoader Example in .

Four 、 Data preprocessing

This section takes image data as an example , Introduce the method of data preprocessing .

4.1 paddle.vision.transforms brief introduction

The propeller frame is paddle.vision.transforms There are dozens of image data processing methods built in , Including random cropping of images 、 Image rotation transformation 、 Change image brightness 、 Common operations such as changing image contrast , For a brief introduction of each operation method, please refer to API file .

transform = CenterCrop(224) # Crop the input image , Keep the center point of the picture unchanged .

transform = RandomHorizontalFlip(0.5) # Flip the picture based on probability level , Default 0.5

transform = RandomVerticalFlip(0.5) # Flip the image vertically based on probability , Default 0.5

transform = RandomRotation(90) # Rotate the image randomly , Rotation angle range 0°-90°

# Randomly adjust the brightness of the image 、 Contrast 、 Saturation and hue .

transform = ColorJitter(brightness=0.5, contrast=0.5, saturation=0.5, hue=0.5)

- Single call :

from paddle.vision.transforms import Resize

# Defines the method of resizing the image

transform = Resize(size=28)

- Use

ComposeMake combined calls :

from paddle.vision.transforms import Compose, RandomRotation

# Define the data processing method to be used , This includes random rotation 、 Change the size of the picture in two combinations

transform = Compose([RandomRotation(10), Resize(size=32)])

4.2 Apply data preprocessing operations in a dataset

- Application in framework built-in data set

# adopt transform Fields pass defined data processing methods , You can complete the enhancement of the built-in data set of the framework

train_dataset = paddle.vision.datasets.MNIST(mode='train', transform=transform)

- Apply in custom datasets

For custom datasets , You can transfer the defined data processing methods into the data set init function , Define it as an attribute of the custom dataset class , And then in getitem Apply it to the image , See the code in this article 3.1.2 Section custom datasets .

5、 ... and 、 Model networking

Model networking is an important part of deep learning task , This link defines the hierarchical structure of the neural network 、 The process of calculating data from input to output ( Forward calculation ) etc. . There are three common uses for model networking :

- Use the built-in model directly

- Use

paddle.nn.Sequentialnetworking - Use

paddle.nn.Layernetworking

In addition, the propeller frame provides paddle.summary Function to view the network structure 、 Input and output of each layer shape And parameter information

5.1 Use the built-in model directly

Flying oar in paddle.vision.models Some classic models in the field of computer vision are built in , Line of code to complete the network construction and initialization .

import paddle

print(' Built in model of propeller frame :', paddle.vision.models.__all__)

Built in model of propeller frame : ['ResNet', 'resnet18', 'resnet34', 'resnet50', 'resnet101', 'resnet152', 'VGG', 'vgg11', 'vgg13', 'vgg16', 'vgg19', 'MobileNetV1', 'mobilenet_v1', 'MobileNetV2', 'mobilenet_v2', 'LeNet']

With LeNet The model, for example , The following code can be used for networking ,

# Model networking and initialize the network

lenet = paddle.vision.models.LeNet(num_classes=10)

# Visualization model networking structure and parameters

paddle.summary(lenet,(1, 1, 28, 28))

5.2 Paddle.nn Introduce 、 Model parameters

Reference resources Paddle.nn file

5.2.1 Paddle.nn

paddle.nn: A rich neural network layer and related functions are defined API, Include :

- Container layer : be based on OOD Dynamic graph of implementation Layer Of

paddle.nn.Layer、 Sequence containerspaddle.nn.Sequentialetc. - 1-3 Convolution layer : For example, one-dimensional convolution layer

paddle.nn.Conv1D、 One dimensional transposed convolution layerpaddle.nn.Conv1DTranspose - pooling layer : One, two, three-dimensional average pooling 、 Maximum pooling, etc

- Padding layer : One, two, three dimensions padding layer

- Recurrent neural network layer :

paddle.nn.RNN、paddle.nn.LSTM、paddle.nn.GRUetc. - Transformer relevant :

paddle.nn.Transformer、paddle.nn.MultiHeadAttention( Long attention )、paddle.nn.TransformerDecoder、paddle.nn.TransformerEncoder - Linear layer :

paddle.nn.Linear - Dropout layer :

paddle.nn.Dropoutetc. - Activation layer :

paddle.nn.GELU、paddle.nn.SoftmaxWait for the activation function - Loss layer : Cross entropy loss layer

paddle.nn.CrossEntropyLoss、paddle.nn.MSELossetc. - Normalization layer :

paddle.nn.BatchNorm、paddle.nn.LayerNormetc. - Embedding layer :

paddle.nn.Embedding

5.2.2 Parameters of the model (Parameter)

Available through the network parameters() and named_parameters() Methods all the parameters optimized during training were obtained ( The weight weight And offset bias), Through these methods, the network can be more finely controlled , For example, set the parameters of some layers not to update .

The following sample code , adopt named_parameters() Got LeNet Names and values of all network parameters , The name of the parameter is printed out (name) And shape (shape):

for name, param in lenet.named_parameters():

print(f"Layer: {

name} | Size: {

param.shape}")

Layer: features.0.weight | Size: [6, 1, 3, 3]

Layer: features.0.bias | Size: [6]

Layer: features.3.weight | Size: [16, 6, 5, 5]

Layer: features.3.bias | Size: [16]

Layer: fc.0.weight | Size: [400, 120]

Layer: fc.0.bias | Size: [120]

Layer: fc.1.weight | Size: [120, 84]

Layer: fc.1.bias | Size: [84]

Layer: fc.2.weight | Size: [84, 10]

Layer: fc.2.bias | Size: [10]

5.3 Use paddle.nn.Sequential networking

When building a sequential linear network structure , This method can be selected , Just follow the structural order of the model , Add layer by layer to paddle.nn.Sequential Subclasses . For example, build LeNet The code of the model structure is as follows :

from paddle import nn

# Use paddle.nn.Sequential structure LeNet Model

lenet_Sequential = nn.Sequential(

nn.Conv2D(1, 6, 3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2D(2, 2),

nn.Conv2D(6, 16, 5, stride=1, padding=0),

nn.ReLU(),

nn.MaxPool2D(2, 2),

nn.Flatten(),

nn.Linear(400, 120),

nn.Linear(120, 84),

nn.Linear(84, 10)

)

# Visualization model networking structure and parameters

paddle.summary(lenet_Sequential,(1, 1, 28, 28))

Use Sequential When networking , It will automatically complete the forward calculation process of the network according to the hierarchical stacking order , The code that defines the forward calculation function is briefly introduced . because Sequential Networking can only complete a simple linear structure model , Therefore, for models that need branch judgment, you need to use paddle.nn.Layer The networking mode is realized .

5.4 Use paddle.nn.Layer networking

When building some complex network structures , This method can be selected , Networking consists of three steps :

- Create an inheritance from paddle.nn.Layer Class ;

- In the class constructor

__init__Define the neural network layer used in networking (layer); - Evaluate the function forward of the class forward Use defined in layer Perform forward calculation .

Still with LeNet The model, for example , Use paddle.nn.Layer The networking code is as follows :

# Use Subclass Way to build LeNet Model

class LeNet(nn.Layer):

def __init__(self, num_classes=10):

super(LeNet, self).__init__()

self.num_classes = num_classes

# structure features subnet , Used for feature extraction of input image

self.features = nn.Sequential(

nn.Conv2D(

1, 6, 3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2D(2, 2),

nn.Conv2D(

6, 16, 5, stride=1, padding=0),

nn.ReLU(),

nn.MaxPool2D(2, 2))

# structure linear subnet , Used for classification

if num_classes > 0:

self.linear = nn.Sequential(

nn.Linear(400, 120),

nn.Linear(120, 84),

nn.Linear(84, num_classes)

)

# Perform forward calculation

def forward(self, inputs):

x = self.features(inputs)

if self.num_classes > 0:

x = paddle.flatten(x, 1)

x = self.linear(x)

return x

lenet_SubClass = LeNet()

# Visualization model networking structure and parameters

params_info = paddle.summary(lenet_SubClass,(1, 1, 28, 28))

print(params_info)

In the code above , take LeNet Divided into features and linear Two subnets ,features Used for feature extraction of input image ,linear Classification for outputting ten numbers .

5.5 networking 、 Training 、 Evaluate frequently asked questions

Reference resources 《 networking 、 Training 、 Evaluate frequently asked questions 》

5.6 Model parameter FAQs ( Gradient cut 、 Share weight 、 Hierarchical learning rate, etc )

Reference resources :《 Common problems in parameter adjustment 》

6、 ... and : model training 、 Evaluation and reasoning

The propeller frame provides two types of training 、 Methods of evaluation and reasoning :

- High level of propeller API: First use paddle.Model Encapsulate the model , And then through Model.fit 、 Model.evaluate 、 Model.predict When the training of the model is completed 、 Evaluation and reasoning . This method has less code , It's good for getting started quickly .

- Propeller foundation API: A loss function is provided 、 Optimizer 、 The evaluation index 、 Update parameters 、 Implementation of basic components such as back propagation , It can be applied to model training more flexibly 、 Evaluation and reasoning tasks , Of course, you can easily customize some components for related tasks .

6.1 Specify the training hardware

By default, the propeller frame will automatically select the corresponding hardware according to the installed version , For example, installed GPU Version of the propeller , Then automatically use GPU Training models , There is no need to specify... Manually . So in general , This step is not necessary .

But if you install GPU Version of the propeller frame , Want to switch to CPU Training , You can go through paddle.device.set_device modify . If the machine has more than one GPU card , You can also use the API Select a specific card to train , If it is not specified, it defaults to ‘gpu:0’.

i

mport paddle

# Specified in the CPU Training

paddle.device.set_device('cpu')

# Specified in the GPU The first 0 Train on Card No

# paddle.device.set_device('gpu:0')

This section only takes the single machine and single card scenario as an example , Introduce the method of model training , If you need to use a single multi card 、 Multi machine multi card training , Please refer to Distributed training . The propeller frame is supported in addition to CPU、GPU Training , It also supports Baidu Kunlun XPU、 Huawei shengteng NPU etc. AI Training on a computing processor

6.2 Load data set 、 Defining models

With MNIST Take handwritten numeral recognition task as an example , The code example is as follows :

from paddle.vision.transforms import Normalize

transform = Normalize(mean=[127.5], std=[127.5], data_format='CHW')

# load MNIST Training set and test set

train_dataset = paddle.vision.datasets.MNIST(mode='train', transform=transform)

test_dataset = paddle.vision.datasets.MNIST(mode='test', transform=transform)

# Model networking , Build and initialize a model mnist

mnist = paddle.nn.Sequential(

paddle.nn.Flatten(1, -1),

paddle.nn.Linear(784, 512),

paddle.nn.ReLU(),

paddle.nn.Dropout(0.2),

paddle.nn.Linear(512, 10)

)

6.3 Use paddle.Model high-level API Training 、 Evaluation and reasoning

- Use paddle.Model Packaging model

# The encapsulation model is a model example , Easy for follow-up training 、 Reasoning and evaluation

model = paddle.Model(mnist)

- Use Model.prepare Configure training preparation parameters

It can be done by Model.prepare Prepare the configuration before training , Include :

- paddle.optimizer Set the optimization algorithm 、 paddle.optimizer.lr Set learning rate policy ;

- paddle.nn Loss Layer Settings Loss computing method ;

- paddle.metric Set relevant calculation methods for evaluation indicators .

- amp_configs (str|dict|None) - Configuration of mixed accuracy training , Is usually a dict, It can also be str.

# Prepare for model training , Set the optimizer and its learning rate , And pass the parameters of the network into the optimizer , Set the loss function and precision calculation method

model.prepare(optimizer=paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters()),

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy())

- Use Model.fit Training models

call Model.fit Interface to start training , At least three key parameters need to be specified : Training data set , Training rounds and batch size .

# Start model training , Designated training data set , Set up training rounds , Set the batch size for each dataset calculation , Format log

model.fit(train_dataset,

epochs=5,

batch_size=64,

verbose=1)

The complete parameters are :

fit(train_data=None, eval_data=None, batch_size=1, epochs=1, eval_freq=1,

log_freq=10, save_dir=None, save_freq=1, verbose=2,

drop_last=False, shuffle=True, num_workers=0, callbacks=None)

train_data (Dataset|DataLoader) - An iterative data source , such as paddle paddle.io.Dataset or paddle.io.Dataloader Example .

eval_data (Dataset|DataLoader) - ditto , When given time , Will be in every epoch Will be evaluated later . The default value is :None.

batch_size (int) - Batch size of training data or evaluation data , When train_data or eval_data by DataLoader When an instance of the , This parameter will be ignored . The default value is :1.

shuffle (bool) - Whether the samples are out of order . When train_data by DataLoader When an instance of the , This parameter will be ignored . The default value is :True.

epochs (int) - Number of training rounds . The default value is :1.

eval_freq (int) - Frequency of evaluation , How many? epoch Evaluate once . The default value is :1.

log_freq (int) - Frequency of log printing , How many? step Print a log . The default value is :1.

save_dir (str|None) - The folder where the model is saved , If you don't set , The model will not be saved . The default value is :None.

save_freq (int) - How often to save the model , How many? epoch Save the model once . The default value is :1.

verbose (int) - Visual model , It has to be for 0,1,2. When set to 0 when , Don't print logs , Set to 1 when , Use the progress bar to print the log , Set to 2 when , Print the log line by line . The default value is :2.

drop_last (bool) - Whether to discard incomplete batch samples . The default value is :False.

num_workers (int) - The number of data read by the initiator process . When train_data and eval_data All for DataLoader When an instance of the , This parameter will be ignored . The default value is :0.

callbacks (Callback|list[Callback]|None) - Incoming callback function , Carry out some user-defined operations in each stage of model training , For example, collect some data and parameters in the training process .

The loss value printed in the log is the current step, and the metric is the average value of previous steps.

Epoch 1/5

step 10/938 [..............................] - loss: 0.9679 - acc: 0.4109 - ETA: 13s - 14ms/stepstep 938/938 [==============================] - loss: 0.1158 - acc: 0.9020 - 10ms/step

Epoch 2/5

step 938/938 [==============================] - loss: 0.0981 - acc: 0.9504 - 10ms/step

Epoch 3/5

step 938/938 [==============================] - loss: 0.0215 - acc: 0.9588 - 10ms/step

Epoch 4/5

step 938/938 [==============================] - loss: 0.0134 - acc: 0.9643 - 10ms/step

Epoch 5/5

step 938/938 [==============================] - loss: 0.3371 - acc: 0.9681 - 11ms/step

- Use Model.evaluate Evaluation model

After model training , Use Model.evaluate The interface completes the model evaluation operation , Based on Model.prepare As defined in loss and metric Calculate and return relevant evaluation results . The return format is a dictionary ( Can contain loss And multiple evaluation indicators )

# use evaluate Validate the model on the test set

eval_result = model.evaluate(test_dataset, verbose=1)

print(eval_result)

Eval begin...

step 10000/10000 [==============================] - loss: 2.3842e-07 - acc: 0.9714 - 2ms/step

Eval samples: 10000

{

'loss': [2.384186e-07], 'acc': 0.9714}

- Use Model.predict To carry out reasoning

Model.predict Interface , The trained model can be reasoned and verified , The format of the returned result is a list :

# use predict Reasoning the model on the test set

test_result = model.predict(test_dataset)

# Because the model is a single output ,test_result The shape of is [1, 10000],10000 Is the amount of data in the test data set .

# Here we print the result of the first data , This array represents the prediction probability of each number

print(test_result[0][0])

# Take a picture from the test set

img, label = test_dataset[0]

# Print the reasoning results , there argmax The function is used to extract the subscript of the one with the highest probability among the predicted values , As a forecast label

pred_label = test_result[0][0].argmax()

print('true label: {}, pred label: {}'.format(label[0], pred_label))

# Use matplotlib library , Visualizations

from matplotlib import pyplot as plt

plt.imshow(img[0])

Predict begin...

step 10000/10000 [==============================] - 2ms/step

Predict samples: 10000

[[ -6.512169 -6.7076845 0.5048795 1.6733919 -9.670526 -1.6352568

-15.833721 13.87411 -8.215239 1.5966017]]

true label: 7, pred label: 7

In addition to the three mentioned above API outside , paddle.Model Classes also provide other training 、 Evaluate reasoning related API:

- Model.train_batch: Training on a batch data set ;

- Model.eval_batch: Evaluate on a batch dataset ;

- Model.predict_batch: Reasoning on a data set of batches .

6.4 Use the basics API Training 、 Evaluation and reasoning

Model.prepare 、 Model.fit 、 Model.evaluate 、 Model.predict It is all based on API Come in a package .

6.4.1 model training

Corresponding to the high level API Of Model.prepare And Model.fit , It generally includes the following steps :

- Load training dataset 、 Declaration model 、 Set the model instance to train Pattern

- Set up the optimizer 、 Loss function and each super parameter

- Set up two-level loop nesting for model training , And set the following contents in the inner loop nesting

- From the data reader DataLoader Get a batch of training data

- Execute a forecast , That is, the predicted value of input data is obtained through model calculation

- Calculate the loss of predicted values and data set labels

- Calculate the accuracy of predicted values and dataset labels

- Back propagation of losses

- Print the number of rounds of the model 、 batch 、 Loss value 、 Accuracy and other information

- Perform the optimizer step once , That is, according to the selected optimization algorithm , Update the parameters passed into the optimizer according to the gradient of the current batch data

- Clear the gradient of the optimizer

# use DataLoader Realize data loading

train_loader = paddle.io.DataLoader(train_dataset, batch_size=64, shuffle=True)

mnist.train()

# Set the number of iterations 、 Loss function

epochs,loss_fn = 5,paddle.nn.CrossEntropyLoss()

# Set up the optimizer

optim = paddle.optimizer.Adam(parameters=mnist.parameters())

for epoch in range(epochs):

for batch_id, data in enumerate(train_loader()):

x_data = data[0] # Training data

y_data = data[1] # Training data labels

predicts = mnist(x_data) # Predicted results

loss = loss_fn(predicts, y_data)

acc = paddle.metric.accuracy(predicts, y_data)

# The following back propagation 、 Print training information 、 Update parameters 、 Gradient zeros are encapsulated in Model.fit() in

# Back propagation

loss.backward()

if (batch_id+1) % 900 == 0:

print("epoch: {}, batch_id: {}, loss is: {}, acc is: {}".format(epoch, batch_id+1, loss.numpy(), acc.numpy()))

optim.step() # Update parameters

optim.clear_grad() # Gradient clear

epoch: 0, batch_id: 900, loss is: [0.06991791], acc is: [0.96875]

epoch: 1, batch_id: 900, loss is: [0.02878829], acc is: [1.]

epoch: 2, batch_id: 900, loss is: [0.07192856], acc is: [0.96875]

epoch: 3, batch_id: 900, loss is: [0.20411499], acc is: [0.96875]

epoch: 4, batch_id: 900, loss is: [0.13589518], acc is: [0.96875]

6.4.2 Model to evaluate

Model instances are from train Change the mode to eval Pattern , No back propagation is required 、 Optimizer parameter update and optimizer gradient zeroing .

# Load test data set

test_loader = paddle.io.DataLoader(test_dataset, batch_size=64, drop_last=True)

loss_fn = paddle.nn.CrossEntropyLoss()

# Set the model and all its sublayers to prediction mode . This only affects certain modules , Such as Dropout and BatchNorm

mnist.eval()

# Disable dynamic graph gradient calculation

for batch_id, data in enumerate(test_loader()):

x_data = data[0] # Test data

y_data = data[1] # Test data label

predicts = mnist(x_data) # Predicted results

loss = loss_fn(predicts, y_data)

acc = paddle.metric.accuracy(predicts, y_data)

# Print information

if (batch_id+1) % 30 == 0:

print("batch_id: {}, loss is: {}, acc is: {}".format(batch_id+1, loss.numpy(), acc.numpy()))

batch_id: 30, loss is: [0.23106411], acc is: [0.953125]

batch_id: 60, loss is: [0.4329119], acc is: [0.90625]

batch_id: 90, loss is: [0.07333981], acc is: [0.96875]

batch_id: 120, loss is: [0.00324837], acc is: [1.]

batch_id: 150, loss is: [0.0857158], acc is: [0.96875]

6.4.3 Model reasoning

The reasoning process of the model is relatively independent , It is a separate step after model training and evaluation . Just perform the following steps :

- Load the test data of the reasoning to be executed , And set the model to eval Pattern

- Read the test data and get the predicted results

- Post process the prediction results

# Load test data set

test_loader = paddle.io.DataLoader(test_dataset, batch_size=64, drop_last=True)

# Set the model and all its sublayers to prediction mode

mnist.eval()

for batch_id, data in enumerate(test_loader()):

# Take out the test data

x_data = data[0]

# Get forecast results

predicts = mnist(x_data)

print("predict finished")

6.5 Comprehensive use of high-rise API And foundation API 、 Model deployment

The high level of the propeller API And foundation API Can be used in combination with , It is not completely separated , This will help developers complete the algorithm iteration more conveniently . The sample code is as follows :

from paddle.vision.models import LeNet

class FaceNet(paddle.nn.Layer):

def __init__(self):

super().__init__()

# Use high-level API networking

self.backbone = LeNet()

# Use the basics API networking

self.outLayer1 = paddle.nn.Sequential(

paddle.nn.Linear(10, 512),

paddle.nn.ReLU(),

paddle.nn.Dropout(0.2)

)

self.outLayer2 = paddle.nn.Linear(512, 10)

def forward(self, inputs):

out = self.backbone(inputs)

out = self.outLayer1(out)

out = self.outLayer2(out)

return out

# Use high-level API Encapsulate the network

model = paddle.Model(FaceNet())

# Use the basics API Define optimizer

optim = paddle.optimizer.Adam(learning_rate=1e-3, parameters=model.parameters())

# Use high-level API Encapsulate optimizer and loss function

model.prepare(optim, paddle.nn.CrossEntropyLoss(), metrics=paddle.metric.Accuracy())

# Use high-level API Training network

model.fit(train_dataset, test_dataset, epochs=5, batch_size=64, verbose=1)

This section describes the use of high-rise structures in the propeller frame API Model training 、 Methods of evaluation and reasoning , And disassemble the corresponding foundation API Implementation method . It should be noted that , The reasoning here is only used for model effect verification , In practical production application , You can use a series of reasoning deployment tools provided by the propeller , Meet the server-side requirements 、 Mobile 、 Webpage / Model deployment and online requirements for various environments such as applets , For details, see Reasoning deployment chapter .

7、 ... and 、 Model saving and loading

7.1 Save an introduction to the loading system

Reference resources :《 Model saving and loading 》、《 Model saving FAQs 》

panddle2.1 Save and load models and parameters , There are the following systems :

- Basics API Save the loading system (6 Interface )

- Training tuning scenarios : Recommended

paddle.save/loadSave and load models - Reasoning deployment scenarios , Recommended paddle.jit.save/load( Dynamic graph ) and paddle.static.save/load_inference_model( Static diagram ) Save loaded model

- Training tuning scenarios : Recommended

- Higher order API Save the loading system :

- paddle.Model.fit ( Training interface , It also has the function of saving parameters )

- paddle.Model.save、paddle.Model.load

7.2 Model of training and tuning scenarios & Parameter saving and loading

7.2.1 Dynamic graph parameters are saved and loaded

- If you only need to save / Load the parameters of the model , have access to

paddle.save/loadcombination Layer and Optimizer Of state_dict To achieve an end - state_dict Is the carrier of persistent parameters of objects ,dict Of key For the parameter name ,value True for parameters numpy array value .

- When saving parameters , Get the target object first (Layer perhaps Optimzier) Of state_dict, And then state_dict Save to disk

- When loading parameters , First load the saved... From the disk state_dict, And then through set_state_dict Method is configured into the target object

With LeNet give an example , How to save and load models :

import numpy as np

import paddle

import paddle.nn as nn

import paddle.optimizer as opt

# Define models and optimizers

model= paddle.vision.models.LeNet(num_classes=10)

adam = opt.Adam(learning_rate=0.001, parameters=layer.parameters())

# Save model parameters and optimizer parameters

""" When saving parameters , Get the target object first (Layer perhaps Optimzier) Of state_dict, And then state_dict Save to disk """

paddle.save(model.state_dict(), PATH1)#

paddle.save(adam.state_dict(), PATH2)

# Model and optimizer parameter loading

""" When loading parameters , First load the saved... From the disk state_dict, And then through set_state_dict Method is configured into the target object """

model.set_state_dict(paddle.load(PATH1))# It can be written in two steps model_state_dict = paddle.load(PATH1)

adam.set_state_dict(paddle.load(PATH2))# ditto , It can be written in two steps

here , The model parameters and optimizer parameters have been saved ( Yes scheduler Your words are also preserved ), Therefore, it can be used for the continuous training of incremental training model after loading .

7.3 Static graph model & Parameter saving and loading

Or to LeNet give an example :

- Save parameters :

paddle.save/loadCombined with model state_dict a , Save the dynamic graph similar to the above - Save the entire model : Save parameters , Still need to use

paddle.saveSave the model structure Program

import numpy as np

import paddle

import paddle.nn as nn

import paddle.optimizer as opt

# Define models and optimizers

model= paddle.vision.models.LeNet(num_classes=10)

adam = opt.Adam(learning_rate=0.001, parameters=layer.parameters())

paddle.save(model.state_dict(),"temp/model.pdparams")# Save model parameters

paddle.save(model, "temp/model.pdmodel") # Save the model structure

""" If only state_dict, Just load the parameters state_dict If the model structure is saved at the same time , You need to load the model structure first """

prog = paddle.load("temp/model.pdmodel")#r\ If the model structure is not saved , Skip this step

state_dict = paddle.load("temp/model.pdparams")

prog.set_state_dict(state_dict)

7.4 common problem

paddle.load What can be loaded API As a result ?

paddle.load In addition to being able to load paddle.save Outside the saved model , You can also load other save relevant API Stored state_dict, But in different scenarios , Parameters path In different forms :

- from

paddle.static.saveperhapspaddle.Model().save(training=True)The saved results of are loaded :path The full file name is required , for example model.pdparams perhaps model.opt; - from

paddle.jit.saveperhapspaddle.static.save_inference_modelperhapspaddle.Model().save(training=False)The saved results of are loaded :path Path prefix is required , for example model/mnist,paddle.load From mnist.pdmodel and mnist.pdiparams Chinese analysis state_dict And return . - from paddle 1.x API

paddle.fluid.io.save_inference_modelperhapspaddle.fluid.io.save_params/save_persistablesThe saved results of are loaded :path It needs to be a directory , for example model, here model Is a folder path .

It should be noted that , If from paddle.static.save perhaps paddle.static.save_inference_model Isostatic graph API In the stored results of state_dict, The structural variable names of parameters in dynamic graph mode cannot be restored . In the... That will be loaded state_dict Configure to current Layer In the middle of the day , Need configuration Layer.set_state_dict Parameters of use_structured_name=False.

7.5 Model of training deployment scenario & Parameter saving and loading

Please refer to paddle file

8、 ... and 、paddle Develop advanced usage

For the following, please refer to paddle file

8.1 Model visualization

8.2 Paddle Models and layers in

8.3 Customize Loss、Metric And Callback

8.4 Distributed training

边栏推荐

- 利用Scanorama高效整合异质单细胞转录组

- Shutter control layout

- Vulnerability recurrence - redis vulnerability summary

- 【机器学习】线性回归预测

- Shardingsphere-proxy-5.0.0 implementation of capacity range partition (V)

- skywalking 安装部署实践

- What should I pay attention to in the interview of artificial intelligence technology?

- Interview notes for Android outsourcing workers for 3 years. I still need to go to a large factory to learn and improve. As an Android programmer

- 一次 MySQL 误操作导致的事故,「高可用」都顶不住了!

- 抓取开机logcat

猜你喜欢

Accompanist组件库中文指南 - Glide篇,劲爆

毕业设计-论文写作笔记【毕设题目类型、论文写作细节、毕设资料】

CVPR2022 | 可精简域适应

Andorid development art exploration notes (2), cross platform applet development framework

小猫爪:PMSM之FOC控制15-MRAS法

分别用SVM、贝叶斯分类、二叉树、CNN实现手写数字识别

Mip-NeRF:抗混叠的多尺度神经辐射场ICCV2021

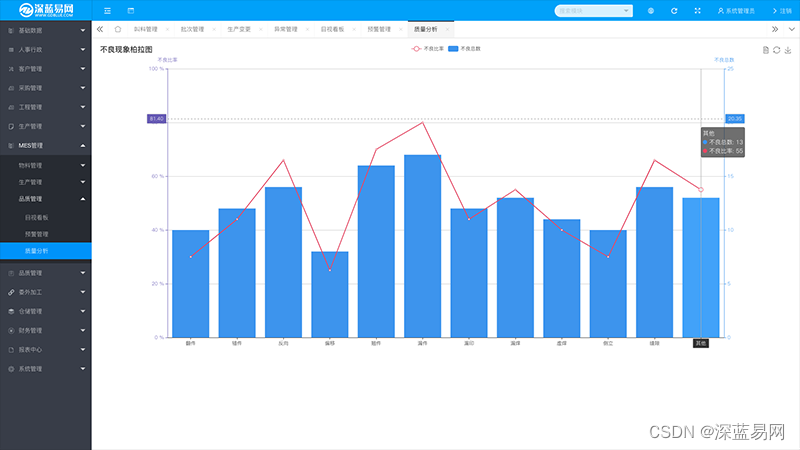

智能制造时代下,MES管理系统需要解决哪些问题

Andorid 开发艺术探索笔记(2),跨平台小程序开发框架

Data management: business data cleaning and implementation scheme

随机推荐

【ICCV Workshop 2021】基于密度图的小目标检测:Coarse-grained Density Map Guided Object Detection in Aerial Images

C语言:递归实现N的阶乘

Building a digital software factory -- panoramic interpretation of one-stop Devops platform

Experience summary of 9 Android interviews, bytes received, Ali, advanced Android interview answer

C语言:利用自定义函数排序

使用递归形成多级目录树结构,附带可能是全网最详细注释。

实时计算框架:Flink集群搭建与运行机制

持续测试和质量保障的关系

股票网上开户安全吗?需要满足什么条件?

【CVPR 2022】高分辨率小目标检测:Cascaded Sparse Query for Accelerating High-Resolution Smal Object Detection

Common WebGIS Map Libraries

skywalking 安装部署实践

杂乱的知识点

Salesforce batch apex batch processing (V) asyncapexjob intelligence

[CVPR 2020 oral] a physics based noise formation model for extreme low light raw denoising

[digital signal] spectrum refinement based on MATLAB analog window function [including Matlab source code 1906]

【小程序】编译预览小程序时,出现-80063错误提示

[applet] realize the effect of double column commodities

钟珊珊:被爆锤后的工程师会起飞|OneFlow U

牛学长周年庆活动:软件大促限时抢,注册码免费送!