当前位置:网站首页>Convolutional neural networks for machine learning -- an introduction to CNN

Convolutional neural networks for machine learning -- an introduction to CNN

2022-06-28 16:13:00 【Hua Weiyun】

Convolutional neural networks –CNN

1. Convolution neural network introduction

Convolutional neural networks (Convolutional Neural Networks,CNN) It's a kind of bag

A feedforward neural network with convolution computation and depth structure , It is one of the representative algorithms of deep learning .

common CNN The Internet has LeNet-5、VGGNet、GoogleNet、ResNet、

DenseNet、MobileNet etc. .

CNN Main application scenarios : Image classification 、 Image segmentation 、 object detection 、 Natural Language Division

Science and other fields .

2. Basic structure and principle of convolutional neural network

The basic structure of convolutional neural network

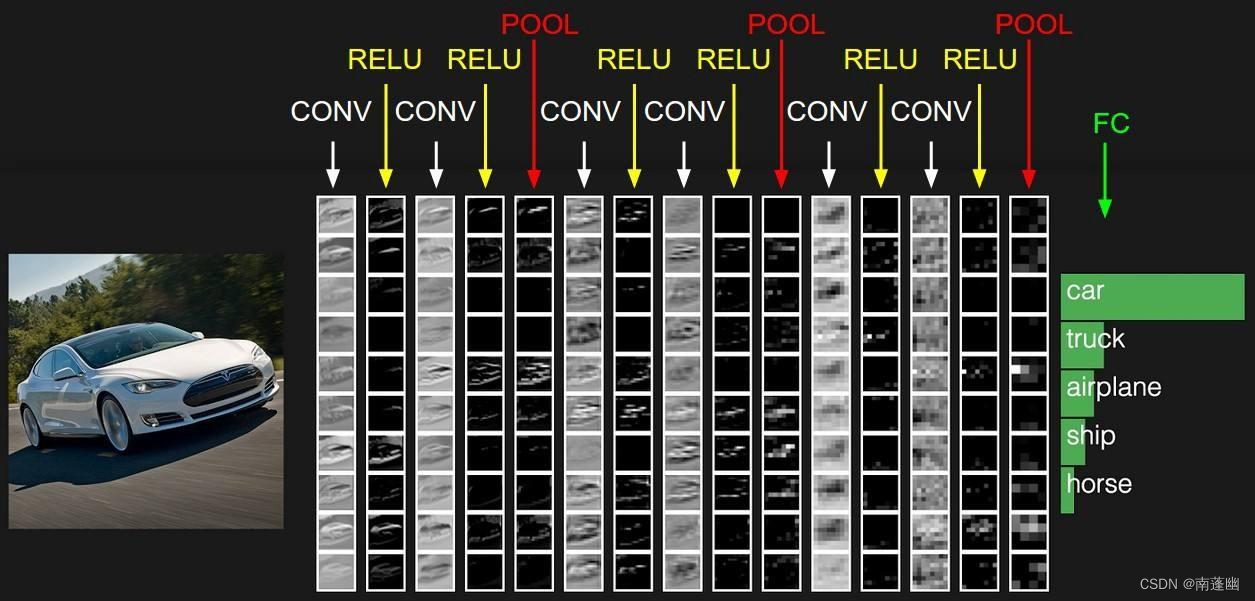

CNN The basic structure :INPUT -> Convolution -> Activate -> Pooling -> Full connection ->OUTPUT

Convolution layer

The input image data and convolution kernel are convoluted to extract the high-order features of the image

Several parameters of convolution process

1、 depth (depth): Number of convolution kernels , Also known as the number of neurons , Determine the number of output characteristic graphs .

2、 step (stride): The size of the convolution kernel sliding once , Decide how many steps you can take to reach the edge .

3、 Fill value (padding): Add at the outer edge 0 The number of layers .

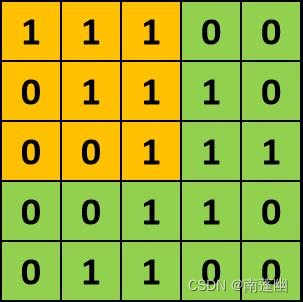

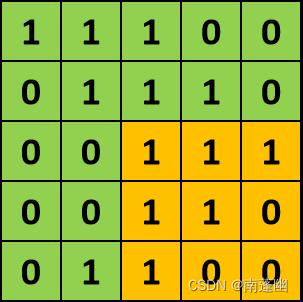

Convolution process

Two main characteristics of convolutional networks

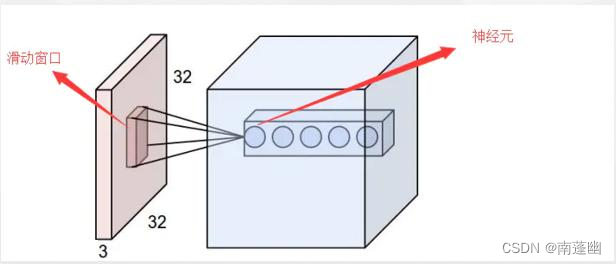

1、 Local awareness

2、 Weight sharing

Activation layer 、Relu function

Pooling layer

Down sampling (downsamples), Compress the input feature graph ;

On the one hand, make the feature map smaller , Simplify network computing complexity , Effectively control over fitting ;

On the other hand, feature compression , Extract the main features .

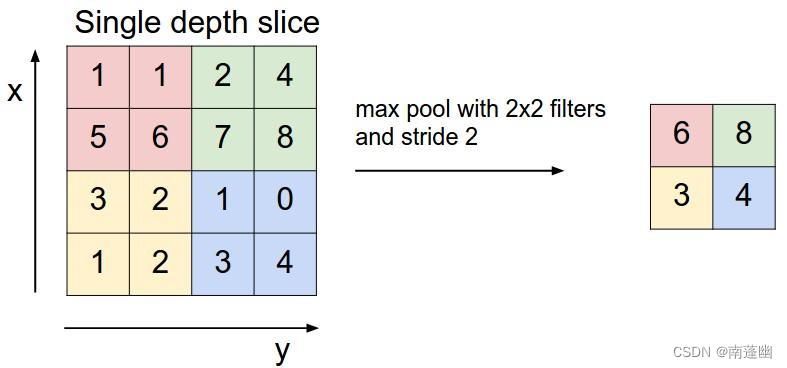

Pooling , The scale is usually 2*2, Operation generally includes 2 Kind of :

- Maximum pooling (Max Pooling). take 4 The maximum of points . This is the most common pooling method .

- Mean pooling (Mean Pooling). take 4 The mean of the points .

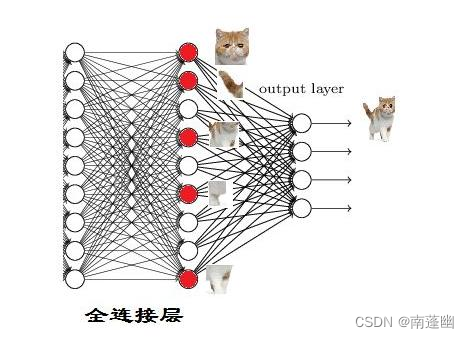

Fully connected layer

Connect all features , Send the output value to the classifier , Implementation classification .

3. pytorch Implementation of convolution in

Convolution layer

torch.nn.Conv2d()

Parameter description

in_channels: Enter the number of channels ( depth )

out_channels: Number of output channels ( depth )

kernel_size: filter ( Convolution kernel ) size

stride: Represents the step size of filter sliding

padding: Whether to zero fill

bias: The default is True, Indicates the use of offset

groups: Control packet convolution , Do not group by default , by 1 Group .

dilation: Convolution space between inputs , The default is True

Activation layer

torch.nn.ReLU()

Parameter description

inplace: Whether to operate on the original data , The default is False

Pooling layer

torch.nn.MaxPool2d()

torch.nn.AvgPool2d()

Parameter description

kernel_size : Indicates the window size for maximum pooling

stride: step

padding: Whether to zero fill

dilation: Convolution space between inputs , The default is True

Fully connected layer

torch.nn.Linear()

Parameter description

in_features : Enter the number of features ;

out_features: Output characteristic number ;

bias: The default is True, Indicates the use of offset

4. Introduction to classical convolutional neural networks

Lenet-5

LeNet5 Convolutional neural network comes from Yann LeCun stay 1998 A paper published in 1987 :Gradient-

based Learning Applied to Document Recognition, It is used for handwritten numeral recognition

Convolutional neural networks .

LeNet-5 yes CNN The most famous network model in network architecture , It is the beginning of convolutional neural network

do .

AlexNet

2012 year , AlexNet Born in the sky .AlexNet send ⽤ Convolution nerves ⽹ Collateral , And it's very ⼤ The best of

Shi wins ImageNet 2012 Image recognition challenge champion .

Alexnet Model from 5 Convolutions and 3 Multiple pooling Pooling layer , Among them are 3 A fully connected hierarchy

become .AlexNet Follow LeNet The structure is similar to , But make ⽤ More convolution layers and more ⼤ The parameter space of

close ⼤ Scale datasets ImageNet. It's a superficial nerve ⽹ Collaterals and deep nerves ⽹ The dividing line of collaterals .

cifar10 Data is introduced

CIFAR-10 By Hinton Of the students Alex Krizhevsky and Ilya Sutskever An arrangement

A small data set for identifying universal objects . It includes 10 Category RGB Color picture slice : fly

machine ( a Kowtow lane )、 automobile ( automobile )、 birds ( bird )、 cat ( cat )、 deer

( deer )、 Dog ( dog )、 Frogs ( frog )、 Horse ( horse )、 ship ( ship ) And trucks

( truck ). The size of the picture is 32×32 , There are... In the data set 50000 Zhang training prison film and

10000 Test pictures

VGGNet

VGGNet By the visual geometry group at Oxford University (Visual Geometry Group, VGG) carry

A deep convolution network structure , They are in 7.32% The error rate has won 2014 year ILSVRC branch

Runner up for class missions .

VGGNet The relationship between the depth of convolutional neural network and its performance is explored , Successfully constructed

16~19 Deep convolution neural network , It is proved that increasing the depth of the network can affect the network

Final performance , Make a big drop in error rate , At the same time, it has a strong expansibility , Migrate to other picture data

Generalization is also very good . up to now ,VGG Still used to extract image features .

VGG It can be seen as a deeper version of AlexNet. All are conv layer + FC layer

GoogleNet

GoogleNet yes 2014 year Google A new deep learning structure proposed by the team , Won

2014 year ILSVRC The champion of classified tasks .

GoogLeNet It is the first classical model using parallel network structure , This is in the development of deep learning

The process is of pioneering significance .

GoogLeNet The most basic network block is Inception, It is a parallel network block , Through constant

Iterative optimization , Developed Inception-v1、Inception-v2、Inception-v3、Inception-v4、

Inception-ResNet common 5 A version .

Inception The iterative logic of the family is to improve the generalization ability of the model through structural optimization 、 Reduced model

Parameters .

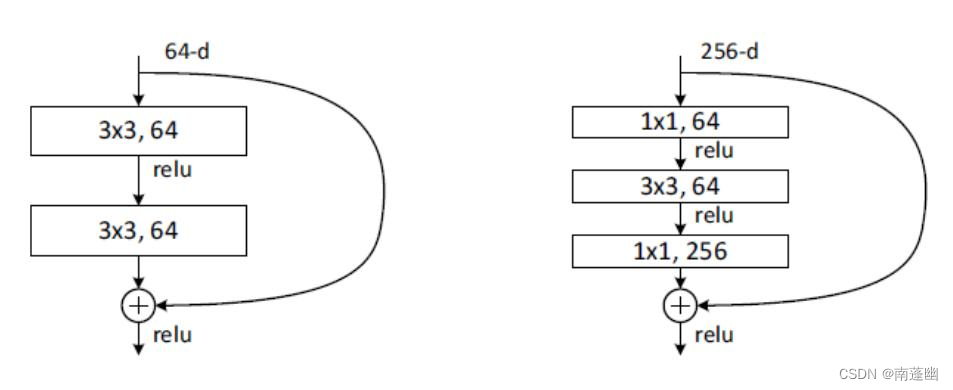

ResNet

ResNet( Residual network ) The Internet is in 2015 year By hekaiming and other great gods in Microsoft lab

Put forward , Capture the year ImageNet The first place in the classification task in the competition , First in target detection . get COCO

First place in target detection in data set , First place in image segmentation .

It uses a connection called “shortcut connection”, seeing the name of a thing one thinks of its function ,shortcut Just

yes “ Take a shortcut ” It means .

ResNet block There are two kinds of , A two-layer structure , A three-layer structure

MobileNet

MobileNet It's Google. 2017 in , Focus on lightweight in mobile terminals or embedded devices

level CNN The Internet .

MobileNet The basic unit of is deep separable convolution , It can be broken down into two smaller operations :

depthwise convolution and pointwise convolution.

边栏推荐

- NFT质押LP流动性挖矿系统开发详情

- The world has embraced Web3.0 one after another, and many countries have clearly begun to seize the initiative

- Etcd可视化工具:Kstone简介(一)

- What is the difference between treasury bonds and time deposits

- 面试官: 线程池是如何做到线程复用的?有了解过吗,说说看

- 【Spock】处理 Non-ASCII characters in an identifier

- among us私服搭建

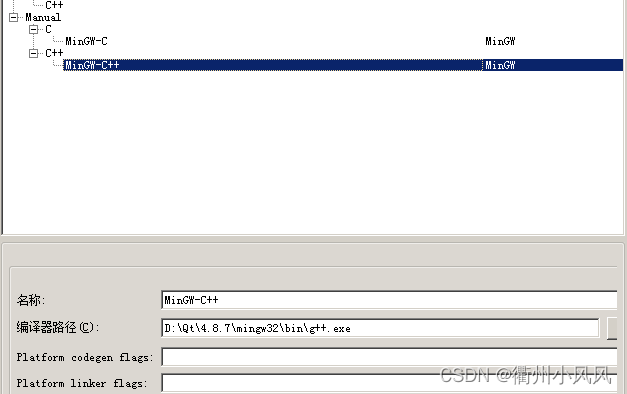

- QT create 5.0.3 configuring qt4.8.7

- Android和eclipse和MySQL上传图片并获取

- 10年测试经验,在35岁的生理年龄面前,一文不值

猜你喜欢

IPDK — Overview

抖音实战~我关注的博主列表、关注、取关

Geoffrey Hinton: my 50 years of in-depth study and Research on mental skills

Installation and use of Jenkins

wallys/DR7915-wifi6-MT7915-MT7975-2T2R-support-OpenWRT-802.11AX-supporting-MiniPCIe-Module

![[leetcode] 13. Roman numeral to integer](/img/3c/7c57d0c407f5302115f69f44b473c5.png)

[leetcode] 13. Roman numeral to integer

Tiktok actual battle ~ list of bloggers I follow, follow and check

Visual studio 2019 software installation package and installation tutorial

Qt create 5.0.3 配置Qt4.8.7

逆向调试入门-PE结构详解02/07

随机推荐

Openharmony - detailed source code of Kernel Object Events

全球陆续拥抱Web3.0,多国已明确开始抢占先机

3. caller service call - dapr

数字藏品热潮之下,你必须知道的那些事儿

10:00面试,10:02就出来了 ,问的实在是太...

面试官: 线程池是如何做到线程复用的?有了解过吗,说说看

逆向调试入门-PE结构详解02/07

No win32/com in vs2013 help document

Visual Studio 2010 编绎Qt5.6.3

What is the maximum number of concurrent TCP connections for a server? 65535?

wallys/DR7915-wifi6-MT7915-MT7975-2T2R-support-OpenWRT-802.11AX-supporting-MiniPCIe-Module

【高并发基础】MySQL索引优化

Traffic management and control of firewall Foundation

首次失败后,爱美客第二次冲刺港交所上市,财务负责人变动频繁

Navicat 15 for MySQL

【初学者必看】vlc实现的rtsp服务器及转储H264文件

[Spock] process non ASCII characters in an identifier

5 minutes to make a bouncing ball game

Geoffrey Hinton:我的五十年深度学习生涯与研究心法

How can the sports app keep the end-to-side background alive to make the sports record more complete?