当前位置:网站首页>[graduation project] Research on emotion analysis based on semi supervised learning and integrated learning

[graduation project] Research on emotion analysis based on semi supervised learning and integrated learning

2022-06-22 14:54:00 【God, stop it】

summary : Research on emotion analysis based on semi supervised learning and ensemble learning

data :text/JDMilk.arff[tf-idf]

about baseline

7% As a training set 30% As test set

about SSL alg

7% As a training set

63% Dimensionless dataset 30% As test set

Reference resources :[ Gao Wei, female, master ] A semi supervised emotion classification method based on random subspace self training

Split training set and test set : Four fold cross validation

The specific way is : Divide the data set into four parts , Take turns to put 3 As training data ,1 As test data , Carry out experiments , In the end 10 The average of the accuracy of the secondary results is used as an estimate of the accuracy of the algorithm obviously , This method of estimating accuracy has high time complexity

Test standard : Accuracy rate (Accuracy)

Environment configuration :

python2.7

scikit,numpy,scipy

docker

Algorithm :

Supervised learning (SL) Classifier selection for

Selection criteria : Capable of outputting a posteriori probability

1. Support vector machine (SVC)

2. Naive Bayes - Polynomial distribution assumption (MultinomialNB)

Semi-supervised learning (SSL)

1.Self-Training

The most primitive semi supervised learning algorithm , But easy to learn bad , There was no improvement at all , Even worse

Assumption:One’s own high confidence predictions are correct.

The main idea is to train a classifier by using small-scale labeled samples , Then classify the unlabeled samples , Select confidence ( Posterior probability ) The highest sample is automatically annotated and the annotation set is updated , Train the classifier iteratively

2.Co-Training

characteristic :Original(Blum & Mitshell) For multi view data ( Web page text and hyperlinks ), From different views ( angle ) Think about the problem , Based on differences

Original View is 2, They are website text and hyperlinks

p=1,n=3,k=30,u=75

Rule#1: Samples can be represented by two or more redundant conditional independent views

Rule#2: Each view can get a strong classifier from the training samples

Number of views 4 better [ From Su Yan literature ], The number of features contained in each view m by : Total number of features n/2[ From Wang Jiao's literature ]. however , General emotional comment text (nlp) There are no natural multiple views , Considering the huge number of features in emotional texts , Using the method of random characteristic subspace generation

[RandomSubspaceMethod,RSM] The text feature space is divided into multiple parts as multiple views

But at least between views ’redundant but notcompletely correlated’ Conditions

Because multiple views should be independent of each other , If they are all fully correlated , Then the classifiers trained based on multiple views are exactly the same for the same examples to be marked , thus Co-Training The algorithm degenerates into self-training Algorithm [ From plateau master's literature ]

source

First came from Tin Kam Ho Of The Random Subspace Method forConstructing Decision Forests The paper ,for improving weak classifiers.

① From Dr. Wang Jiao's literature

Assume that the original data feature space is n dimension , The random subspace is m dimension , Satisfy m < n. The tagged dataset has l Data , namely | L| = l. To any p ∈ L , Can be written as p = (p1 , p2 , …, pn), take p Project here m In the dimensional space , The resulting vector can be written as psub = ( ps1 , ps2 , …, psm) Owned by l individual psub A set of vectors Lsub , Is to tag data sets L In its m Projection in dimensional random machine subspace . Repeat the process K Time , Get the data feature space K Different views ,Lsubk(1 ≤k ≤K)

Q: I still haven't explained the projection clearly ( segmentation ) And random ?

②from wikipedia:

1.Let the number of training points be N and the number of features in the training data be D.

2.Choose L to be the number of individual models in the ensemble.

3.For each individual model l, choose dl (dl < D) to be the number of input variables for l. It is common to have only one value of dl for all the individual models.

4.For each individual model l, create a training set by choosing dl features from D with replacement and train the model.

③ Source Tin Kam Ho Of The Random Subspace Method forConstructing Decision Forests

Download resources

https://download.csdn.net/download/s1t16/85724818 https://download.csdn.net/download/s1t16/85724818

https://download.csdn.net/download/s1t16/85724818

边栏推荐

- The diffusion model is crazy again! This time the occupied area is

- Unity's rich text color sets the color to be fully transparent

- C#泛型方法

- [Zhejiang University] information sharing of the first and second postgraduate entrance examinations

- 作为过来人,写给初入职场的程序员的一些忠告

- Reading of double pointer instrument panel (II) - Identification of dial position

- 轻松上手Fluentd,结合 Rainbond 插件市场,日志收集更快捷

- 同花顺开户难么?网上开户安全么?

- 【毕业设计】基于半监督学习和集成学习的情感分析研究

- C # define and implement interface interface

猜你喜欢

Zhongshanshan: engineers after being blasted will take off | ONEFLOW u

【Pr】基础流程

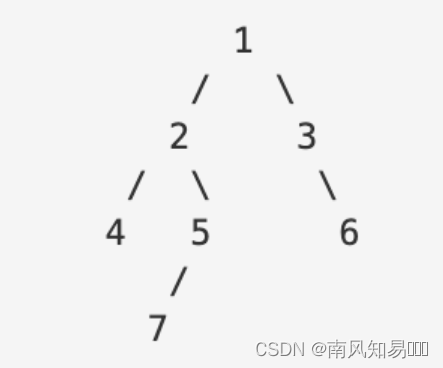

树结构二叉树

拜登簽署兩項旨在加强政府網絡安全的新法案

Deadlock found when trying to get lock; Try restarting transaction

Software architecture

NF-ResNet:去掉BN归一化,值得细读的网络信号分析 | ICLR 2021

Thoroughly understand the builder mode (builder)

Kukai TV ADB

RealNetworks vs. Microsoft: the battle in the early streaming media industry

随机推荐

网络地址转换NAT

Nansen Annual Report

Biden signe deux nouvelles lois visant à renforcer la cybersécurité du Gouvernement

直播出海 | 国内直播间再出爆品,「外卷」全球如何致胜

d的嵌套赋值

Verification code is the natural enemy of automation? See how the great God solved it

Struggle, programmer -- Chapter 46 this situation can be recalled, but it was at a loss at that time

unity防止按钮btn被连续点击

世界上所有的知名网络平台

[Software Engineering] acquire requirements

Groovy list operation

[PR] basic process

Redistemplate serialization

Software architecture

d的破坏与安全

C#泛型方法

C language student management system (open source)

Fluentd is easy to get started. Combined with the rainbow plug-in market, log collection is faster

一文彻底弄懂单例模式(Singleton)

Deadlock found when trying to get lock; Try restarting transaction