当前位置:网站首页>Pytorch distributed parallel processing

Pytorch distributed parallel processing

2022-08-05 06:48:00 【ProfSnail】

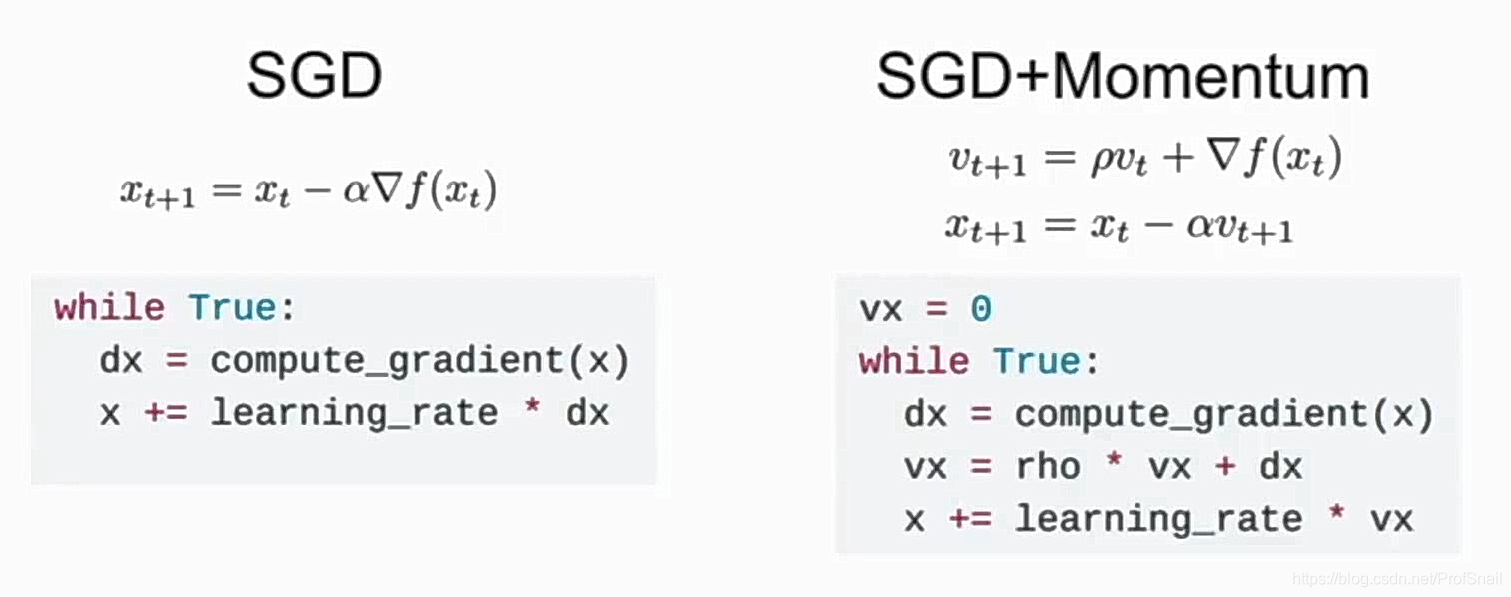

In the official documentation of version 1.9 of Pytorch, it is clearly stated that nn.DataParallel or multiprocessing is no longer recommended, but nn is recommended.parallel.DistributedDataParllel.Even if there is only one GPU core, nn.paralle.DistributeDataParalle is also recommended.The reason given in the official documentation is:

The difference between

DistributedDataParallelandDataParallelis:DistributedDataParalleluses multiprocessing where a process is created for each GPU, whileDataParalleluses multithreading. By using multiprocessing, each GPU has its dedicated process, this avoids the performance overhead caused by GIL of Python interpreter.

The general idea is that DistributedDataParallel is better because it allocates a fixed process to each GPU; and DataParallel is not recommended because it uses a multi-threaded method, which may incur performance overhead from the GIL or the Python interpreter.

Another Basic document mentions that for torch.multiprocessing or torch.nn.DataParallel, the user must displayCreate an independent copy of the main training script for each process.This is not convenient.

边栏推荐

猜你喜欢

随机推荐

js 使用雪花id生成随机id

DisabledDate date picker datePicker

The 25 best free games on mobile in 2020

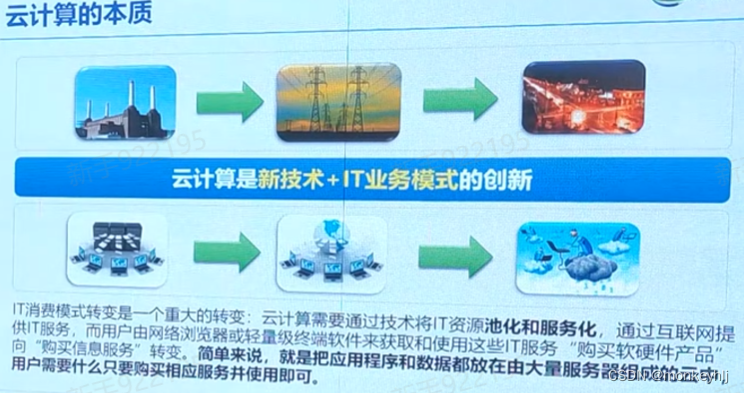

云计算基础-学习笔记

What is the website ICP record?

What is Alibaba Cloud Express Beauty Station?

Error correction notes for the book Image Processing, Analysis and Machine Vision

One-arm routing experiment and three-layer switch experiment

LaTeX image captioning text column automatic line wrapping

Collection of error records (write down when you encounter them)

LeetCode练习及自己理解记录(1)

Network Protocol Fundamentals - Study Notes

设置文本向两边居中展示

config.js相关配置汇总

link 和@improt的区别

js判断文字是否超过区域

reduce()方法的学习和整理

Come, come, let you understand how Cocos Creator reads and writes JSON files

Complete mysql offline installation in 5 minutes

文件内音频的时长统计并生成csv文件