当前位置:网站首页>Partition data 2

Partition data 2

2022-07-24 10:33:00 【nb1232】

import math

import numpy as np

import torch

from torch import nn

import matplotlib.pyplot as plt

import torchvision.datasets as datasets

from torch.utils import data

from torchvision import transforms

from torch.utils.data import Dataset

import torchvision

train_data=datasets.CIFAR10(root="../data", train=True, transform=transforms.ToTensor(), download=True)

test_data=datasets.CIFAR10(root="../data", train=False, transform=transforms.ToTensor(), download=True)

t0=[ i for i, x in enumerate(train_data.targets) if x == 0]

t1=[ i for i, x in enumerate(train_data.targets) if x == 1]

t2=[ i for i, x in enumerate(train_data.targets) if x == 2]

t3=[ i for i, x in enumerate(train_data.targets) if x == 3]

t4=[ i for i, x in enumerate(train_data.targets) if x == 4]

t5=[ i for i, x in enumerate(train_data.targets) if x == 5]

t6=[ i for i, x in enumerate(train_data.targets) if x == 6]

t7=[ i for i, x in enumerate(train_data.targets) if x == 7]

t8=[ i for i, x in enumerate(train_data.targets) if x == 8]

t9=[ i for i, x in enumerate(train_data.targets) if x == 9]

label0=[]

for i in t0:

label0.append(train_data.targets[i])

label1=[]

for i in t1:

label1.append(train_data.targets[i])

label2=[]

for i in t2:

label2.append(train_data.targets[i])

label3=[]

for i in t3:

label3.append(train_data.targets[i])

label4=[]

for i in t4:

label4.append(train_data.targets[i])

label5=[]

for i in t5:

label5.append(train_data.targets[i])

label6=[]

for i in t6:

label6.append(train_data.targets[i])

label7=[]

for i in t7:

label7.append(train_data.targets[i])

label8=[]

for i in t8:

label8.append(train_data.targets[i])

label9=[]

for i in t9:

label9.append(train_data.targets[i])

data0=np.array([])

data1=np.array([])

data2=np.array([])

data3=np.array([])

data4=np.array([])

data5=np.array([])

data6=np.array([])

data7=np.array([])

data8=np.array([])

data9=np.array([])

for i in range(len(train_data.data)):

if i in t0:

data0=np.append(data0,train_data.data[i])

elif i in t1:

data1=np.append(data1,train_data.data[i])

elif i in t2:

data2=np.append(data2,train_data.data[i])

elif i in t3:

data3=np.append(data3,train_data.data[i])

elif i in t4:

data4=np.append(data4,train_data.data[i])

elif i in t5:

data5=np.append(data5,train_data.data[i])

elif i in t6:

data6=np.append(data6,train_data.data[i])

elif i in t7:

data7=np.append(data7,train_data.data[i])

elif i in t8:

data8=np.append(data8,train_data.data[i])

elif i in t9:

data9=np.append(data9,train_data.data[i])

data0=np.transpose((data0),(0,3,1,2))

data1=np.transpose((data1),(0,3,1,2))

data2=np.transpose((data2),(0,3,1,2))

data3=np.transpose((data3),(0,3,1,2))

data4=np.transpose((data4),(0,3,1,2))

data5=np.transpose((data5),(0,3,1,2))

data6=np.transpose((data6),(0,3,1,2))

data7=np.transpose((data7),(0,3,1,2))

data8=np.transpose((data8),(0,3,1,2))

data9=np.transpose((data9),(0,3,1,2))

data0= torch.tensor((data0 - np.min(data0)) / (np.max(data0) - np.min(data0)))

data1= torch.tensor((data1 - np.min(data1)) / (np.max(data1) - np.min(data1)))

data2= torch.tensor((data2 - np.min(data2)) / (np.max(data2) - np.min(data2)))

data3= torch.tensor((data3 - np.min(data3)) / (np.max(data3) - np.min(data3)))

data4= torch.tensor((data4 - np.min(data4)) / (np.max(data4) - np.min(data4)))

data5= torch.tensor((data5 - np.min(data5)) / (np.max(data5) - np.min(data5)))

data6= torch.tensor((data6 - np.min(data6)) / (np.max(data6) - np.min(data6)))

data7= torch.tensor((data7 - np.min(data7)) / (np.max(data7) - np.min(data7)))

data8= torch.tensor((data8 - np.min(data8)) / (np.max(data8) - np.min(data8)))

data9= torch.tensor((data9 - np.min(data9)) / (np.max(data9) - np.min(data9)))

class DatasetXY(Dataset):

def __init__(self, x, y):

self._x = x

self._y = y

self._len = len(x)

def __getitem__(self, item): # The value returned in each cycle

return self._x[item], self._y[item]

def __len__(self):

return self._len

dataset0= DatasetXY(data0,label0)

dataset1= DatasetXY(data1,label1)

dataset2= DatasetXY(data2,label2)

dataset3= DatasetXY(data3,label3)

dataset4= DatasetXY(data4,label4)

dataset5= DatasetXY(data5,label5)

dataset6= DatasetXY(data6,label6)

dataset7= DatasetXY(data7,label7)

dataset8= DatasetXY(data8,label8)

dataset9= DatasetXY(data9,label9)

train0_iter=data.DataLoader(dataset0,batch_size=64,num_workers=0)

train1_iter=data.DataLoader(dataset1,batch_size=64,num_workers=0)

train2_iter=data.DataLoader(dataset2,batch_size=64,num_workers=0)

train3_iter=data.DataLoader(dataset3,batch_size=64,num_workers=0)

train4_iter=data.DataLoader(dataset4,batch_size=64,num_workers=0)

train5_iter=data.DataLoader(dataset5,batch_size=64,num_workers=0)

train6_iter=data.DataLoader(dataset6,batch_size=64,num_workers=0)

train7_iter=data.DataLoader(dataset7,batch_size=64,num_workers=0)

train8_iter=data.DataLoader(dataset8,batch_size=64,num_workers=0)

train9_iter=data.DataLoader(dataset9,batch_size=64,num_workers=0)

for x,y in train9_iter:

x=torchvision.utils.make_grid(x)

# print(x)

# x=torch.squeeze(x)

# print(x.shape)

plt.imshow(np.transpose(x.numpy(),(1,2,0)),aspect='auto')

plt.show()

break边栏推荐

- zoj-Swordfish-2022-5-6

- Ribbon's loadbalancerclient, zoneawareloadbalancer and zoneavoidancerule are three musketeers by default

- MySQL - 普通索引

- DSP CCS software simulation

- Domain Driven practice summary (basic theory summary and analysis + Architecture Analysis and code design + specific application design analysis V) [easy to understand]

- Tree array-

- 2022, will lead the implementation of operation and maintenance priority strategy

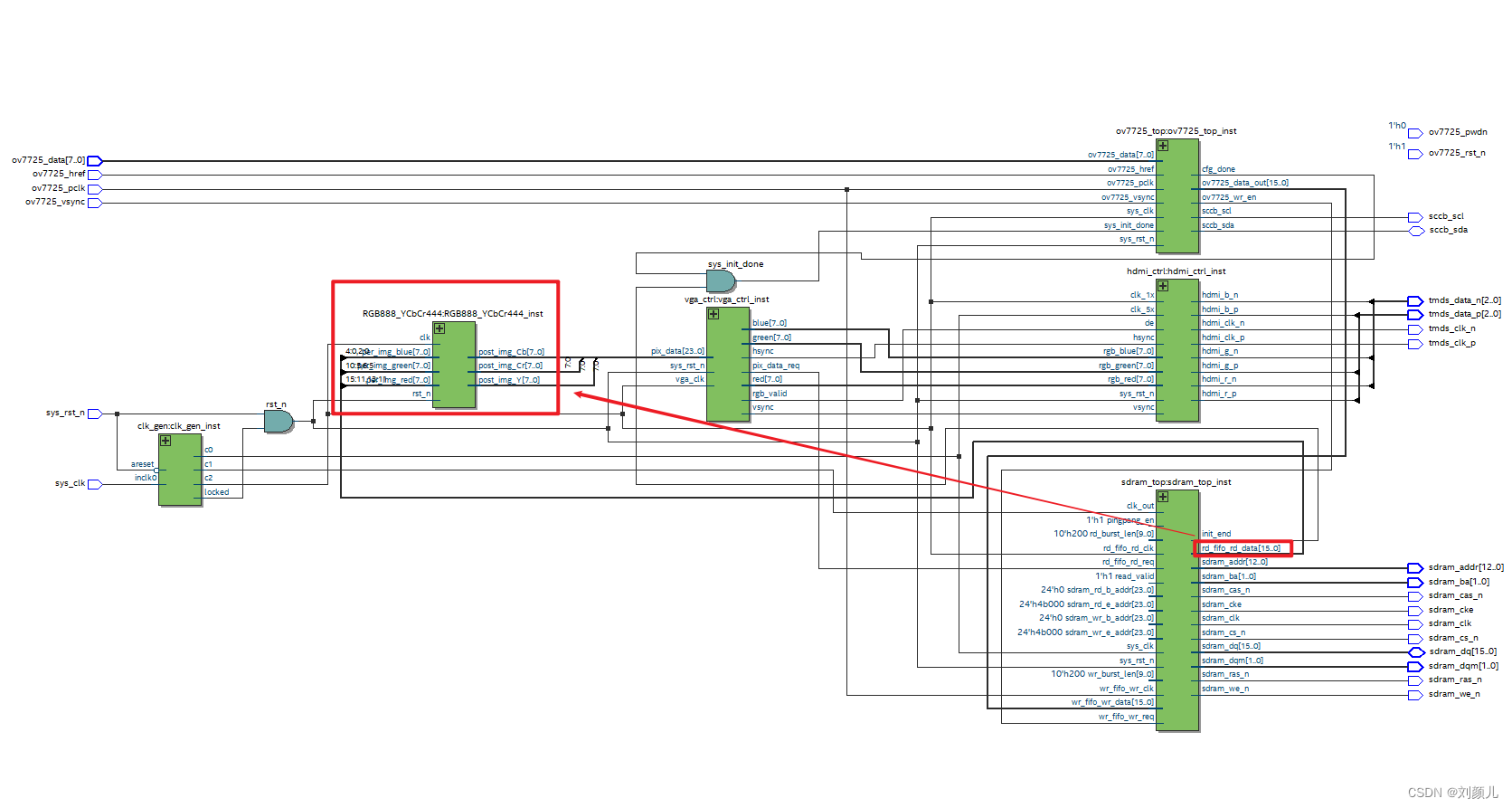

- Image processing: rgb565 to rgb888

- Set up mail server with dynamic ip+mdaemon

- String__

猜你喜欢

Interpretation of websocket protocol -rfc6455

Arduino + AD9833 waveform generator

Image processing: rgb565 to rgb888

Adobe substance 3D Designer 2021 software installation package download and installation tutorial

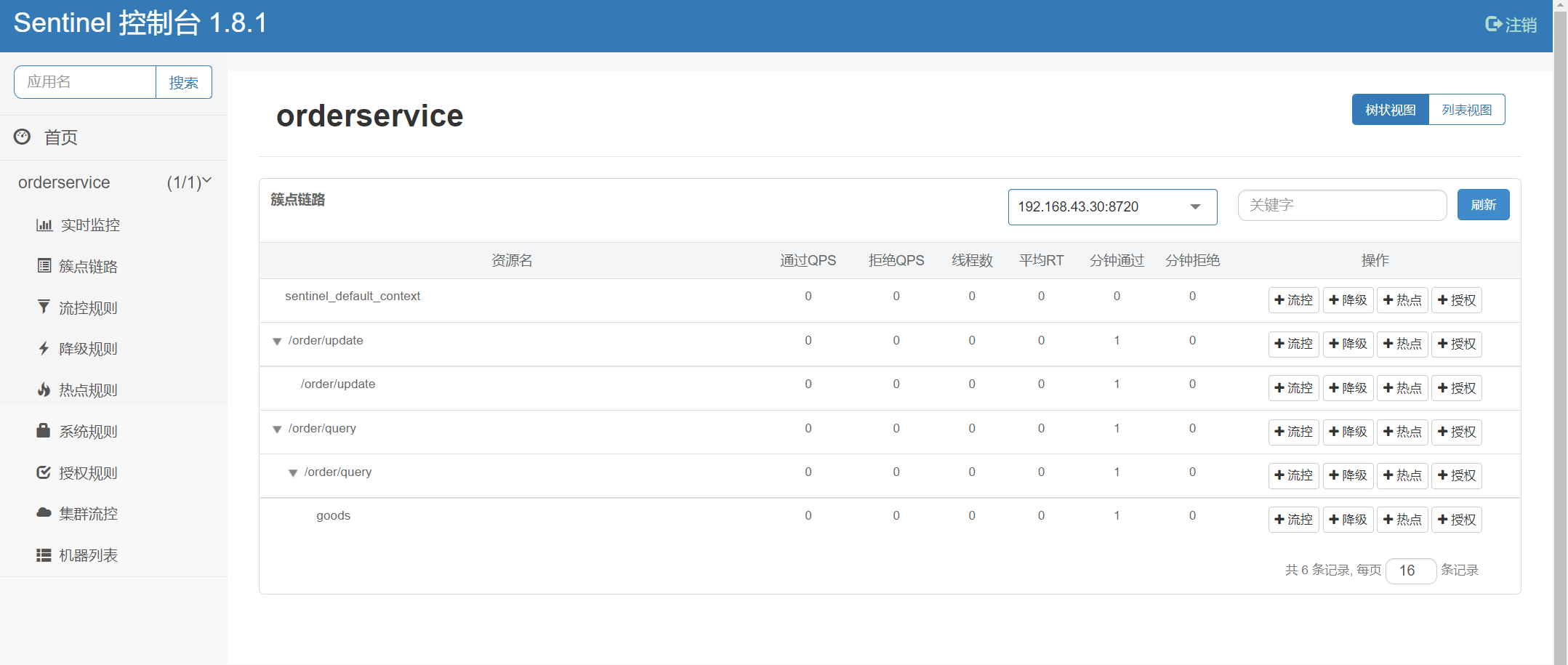

Sentinel 三种流控模式

MySQL - 唯一索引

Adobe Substance 3D Designer 2021软件安装包下载及安装教程

Figure model 2-2022-5-13

In depth analysis of common cross end technology stacks of app

Association Rules -- July 10, 2022

随机推荐

常量指针、指针常量

多表查询之子查询_单行单列情况

MySQL - 索引的隐藏和删除

N叉树、page_size、数据库严格模式修改、数据库中delect和drop的不同

Sentinel 实现 pull 模式规则持久化

Intranet remote control tool under Windows

Ribbon's loadbalancerclient, zoneawareloadbalancer and zoneavoidancerule are three musketeers by default

Adobe substance 3D Designer 2021 software installation package download and installation tutorial

Constant pointer, pointer constant

Kotlin advanced

题解——Leetcode题库第283题

CMS vulnerability recurrence - foreground arbitrary user password modification vulnerability

NIO知识点

Kotlin Advanced Grammar

757. Set the intersection size to at least 2: greedy application question

Add a love power logo to your website

Uniapp calendar component

[sword finger offer II 115. reconstruction sequence]

Interpretation of websocket protocol -rfc6455

In depth analysis of common cross end technology stacks of app