当前位置:网站首页>Apache, IIS6 and ii7 independent IP hosts screen and intercept spider crawling (applicable to VPS virtual machine servers)

Apache, IIS6 and ii7 independent IP hosts screen and intercept spider crawling (applicable to VPS virtual machine servers)

2022-06-28 03:04:00 【wwwwestcn】

If it is a normal search engine spider access , It is not recommended to ban spiders , Otherwise, the collection and ranking of the website in Baidu and other search engines will be lost , Causing losses such as loss of customers . You can give priority to upgrading the virtual host model to get more traffic or upgrade to Cloud server ( Its unlimited ). For more details, please visit : http://www.west.cn/faq/list.asp?unid=626

1. Use the web site administration assistant environment :http://www.west.cn/faq/list.asp?unid=650 Refer to this instruction to enable setting pseudo static components

2. windows2003+iis Manual station building environment :http://www.west.cn/faq/list.asp?unid=639 Refer to this instruction to load pseudo static components

3. Then configure in the configuration file according to the following system rules

Linux Next Rules file .htaccess( Create... By hand .htaccess File to the site root directory )

<IfModule mod_rewrite.c>

RewriteEngine On

#Block spider

RewriteCond %{HTTP_USER_AGENT} "SemrushBot|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|curl|perl|Python|Wget|Xenu|ZmEu" [NC]

RewriteRule !(^robots\.txt$) - [F]

</IfModule>windows2003 Next Rules file httpd.conf

#Block spider

RewriteCond %{HTTP_USER_AGENT} (SemrushBot|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|curl|perl|Python|Wget|Xenu|ZmEu) [NC]

RewriteRule !(^/robots.txt$) - [F]windows2008 Next web.config

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<system.webServer>

<rewrite>

<rules>

<rule name="Block spider">

<match url="(^robots.txt$)" ignoreCase="false" negate="true" />

<conditions>

<add input="{HTTP_USER_AGENT}" pattern="SemrushBot|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|curl|perl|Python|Wget|Xenu|ZmEu" ignoreCase="true" />

</conditions>

<action type="AbortRequest" />

</rule>

</rules>

</rewrite>

</system.webServer>

</configuration>Nginx Corresponding shielding rules

The code needs to be added to the corresponding site configuration file server In segment

if ($http_user_agent ~ "Bytespider|Java|PhantomJS|SemrushBot|Scrapy|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|perl|Python|Wget|Xenu|ZmEu|^$" )

{

return 444;

}notes : The default shielding part of the rule is unknown spiders , To shield other spiders, add them according to the rules

Link to the original text :https://www.west.cn/faq/list.asp?unid=820

边栏推荐

- Severe Tire Damage:世界上第一个在互联网上直播的摇滚乐队

- Review the submission of small papers for 2022 spring semester courses

- [block coding] simulation of image block coding based on MATLAB

- How does win11 add printers and scanners? Win11 add printer and scanner settings

- Summary of software testing tools in 2021 - fuzzy testing tools

- 榜单首发——前装搭载率站上10%大关,数字钥匙方案供应商TOP10

- [today in history] June 18: JD was born; The online store platform Etsy was established; Facebook releases Libra white paper

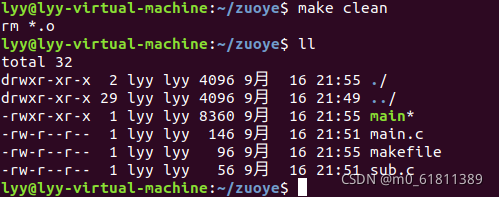

- 第一次使用gcc和makefile编写c程序

- [elevator control system] design of elevator control system based on VHDL language and state machine, using state machine

- 如何判断线程池已经执行完所有任务了?

猜你喜欢

![[today in history] June 8: the father of the world wide web was born; PHP public release; IPhone 4 comes out](/img/1b/31b5adbec5182207c371a23e41baa3.png)

[today in history] June 8: the father of the world wide web was born; PHP public release; IPhone 4 comes out

Le routage des microservices de la passerelle a échoué au chargement des ressources statiques des microservices

Writing C program with GCC and makefile for the first time

Opencv -- geometric space transformation (affine transformation and projection transformation)

分布式事务—基于消息补偿的最终一致性方案(本地消息表、消息队列)

Initial linear regression

![[plug in -statistical] statistics the number of code lines and related data](/img/84/ad5e78f7e0ed86d9c21cabe97b9c8e.png)

[plug in -statistical] statistics the number of code lines and related data

Severe Tire Damage:世界上第一个在互联网上直播的摇滚乐队

How to run unity webgl after packaging (Firefox configuration)

What if win11 cannot use dynamic wallpaper? Solution of win11 without dynamic wallpaper

随机推荐

Moving Tencent to the cloud: half of the evolution history of cloud server CVM

字节跳动面试官:一张图片占据的内存大小是如何计算

Single page application (SPA) hash route and historical API route

抓包整理外篇fiddler————了解工具栏[一]

How to enable multi language text suggestions? Win11 method to open multilingual text suggestions

[inverted pendulum control] Simulink simulation of inverted pendulum control based on UKF unscented Kalman filter

How does win11 add printers and scanners? Win11 add printer and scanner settings

访问网站提示:您未被授权查看该页恢复办法

PSM总结

Gateway微服務路由使微服務靜態資源加載失敗

抓包整理外篇fiddler————了解工具栏[一]

为什么大厂压力大,竞争大,还有这么多人热衷于大厂呢?

[cloud native] - docker installation and deployment of distributed database oceanbase

Arduino Esp8266 Web LED控制

js清空对象和对象的值:

分布式事务解决方案Seata-Golang浅析

LiveData 面试题库、解答---LiveData 面试 7 连问~

[block coding] simulation of image block coding based on MATLAB

Severe Tire Damage:世界上第一个在互联网上直播的摇滚乐队

math_(函数&数列)极限的含义&误区和符号梳理/邻域&去心邻域&邻域半径