当前位置:网站首页>Tensorflow, danger! Google itself is the one who abandoned it

Tensorflow, danger! Google itself is the one who abandoned it

2022-06-21 10:07:00 【QbitAl】

Xiao Xiao Abundant color From the Aofei temple

qubits | official account QbitAI

Close harvest 16.6 m Star、 Witness the rise of deep learning TensorFlow, The position is at stake .

And this time , The shock does not come from old rivals PyTorch, It's a rookie JAX.

The latest wave AI In the hot debate , even fast.ai founder Jeremy Howard Both end up saying :

JAX Is gradually replacing TensorFlow This matter , already be widely known 了 . Now it's happening ( At least within Google ).

LeCun I think that , Fierce competition between deep learning frameworks , Has entered a new stage .

LeCun Express , The original Google TensorFlow Do than Torch More fire . However Meta Of PyTorch After appearance , It is now more popular than TensorFlow 了 .

Now? , Include Google Brain、DeepMind And many external projects , Have started to use JAX.

A typical example is the recent explosion DALL·E Mini, In order to make full use of TPU, The author adopts JAX Programming . Some people sigh after using it :

It's comparable PyTorch It's much faster .

According to the 《 Business Insider 》 According to , Expected in the next few years ,JAX Will overwrite Google all Products using machine learning technology .

So it looks like , Now vigorously promote in-house JAX, It is more like a campaign launched by Google on the framework “ Save your ”.

JAX To come from ?

About JAX, Google is actually well prepared .

As early as 2018 In the year , It was built by a three person team of Google brain .

The research results were published in a paper entitled Compiling machine learning programs via high-level tracing Papers :

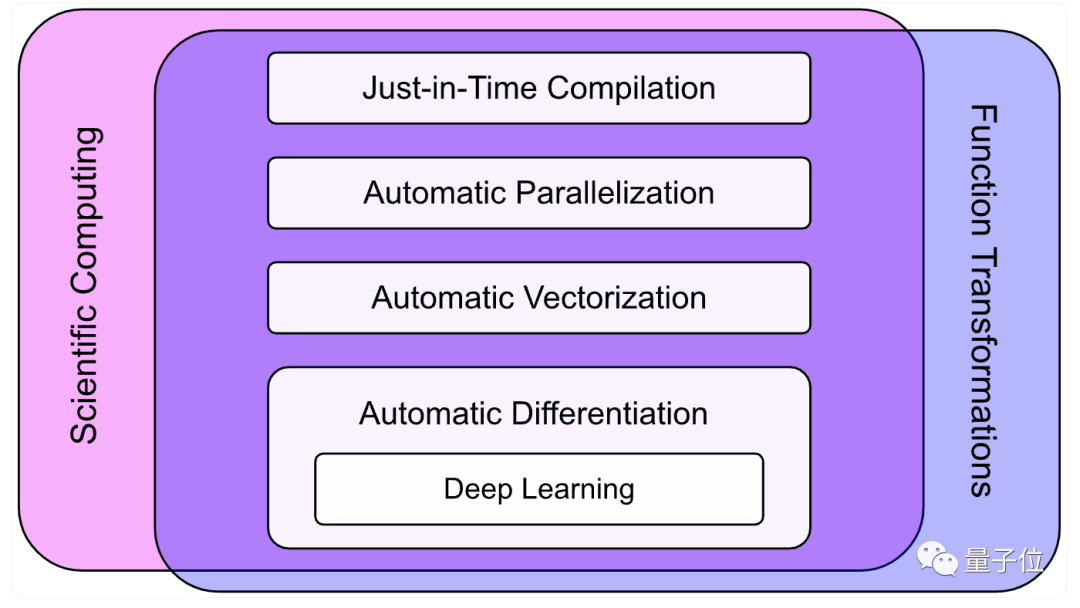

Jax It is used for high performance numerical computation Python library , And deep learning is just one of the functions .

Since its birth , Its popularity has been rising .

The biggest characteristic is fast .

Feel for an example .

For example, finding the sum of the first three powers of a matrix , use NumPy Realization , The calculation takes about 478 millisecond .

use JAX Just need 5.54 millisecond , Than NumPy fast 86 times .

Why so soon? ? There are many reasons , Include :

1、NumPy Accelerator .NumPy The importance of , use Python Engage in scientific computing and machine learning , No one can live without it , But it has never been natively supported GPU Wait for hardware acceleration .

JAX The calculation function of API All based on NumPy, Can make the model very easy in GPU and TPU Up operation . This point has been grasped by many people .

2、XLA.XLA(Accelerated Linear Algebra) Is to accelerate linear algebra , An optimization compiler .JAX Based on the XLA above , A substantial increase in JAX Calculate the upper limit of speed .

3、JIT. Researchers can use XLA Convert your own functions to real-time compilation (JIT) edition , It is equivalent to adding a simple function modifier to the calculation function , The computing speed can be increased by several orders of magnitude .

besides ,JAX And Autograd Fully compatible with , Support automatic difference , adopt grad、hessian、jacfwd and jacrev Equifunction conversion , Support reverse mode and forward mode differentiation , And the two can be composed in any order .

Of course ,JAX There are also some shortcoming On the body .

such as :

1、 although JAX Known as an accelerator , But it is not aimed at CPU Each operation in the calculation is fully optimized .

2、JAX Too new , No shape imaging TensorFlow Such a complete basic ecology . So it hasn't been launched by Google in the form of molded products .

3、debug The time and cost required are uncertain ,“ side effect ” Not entirely clear .

4、 I won't support it Windows System , Can only run in the above virtual environment .

5、 No data loader , Must borrow TensorFlow or PyTorch Of .

……

For all that , Simple 、 Flexible and easy to use JAX Or take the lead in DeepMind It's popular in China .2020 Some in-depth learning libraries were born in Haiku and RLax And so on are all based on it .

This year ,PyTorch One of the original authors Adam Paszke, Also joined full-time JAX The team .

at present ,JAX Our open source project is in GitHub Previous 18.4k Star sign .

It is worth noting that , in the meantime , There are many voices indicating that it is likely to replace TensorFlow.

On the one hand, it's because JAX The strength of the , On the other hand, it is mainly related to TensorFlow For many reasons .

Why did Google switch to JAX?

Born in 2015 Year of TensorFlow, It used to be all the rage , Soon after its launch, it surpassed Torch、Theano and Caffe Wait for a group “ Fashionable guy ”, Become the most popular machine learning framework .

However, in 2017 year , A new look PyTorch“ Making a comeback ”.

This is a Meta be based on Torch Built machine learning library , Because it's easy to get started 、 Easy to understand , Soon it was favored by many researchers , Even more than TensorFlow The trend of .

by comparison ,TensorFlow But it becomes more and more bloated in frequent updates and interface iterations , Gradually lost the trust of developers .

( from Stack Overflow According to the proportion of questions on ,PyTorch It's going up year by year ,TensorFlow But has been stagnant )

In the competition ,TensorFlow Their shortcomings are gradually exposed ,API unstable 、 The implementation is complex 、 The problem of high learning cost has not been solved with the update , Instead, the structure becomes more complex .

by comparison ,TensorFlow But they didn't continue to play better “ Operational efficiency ” Equal advantage .

In academia ,PyTorch The utilization rate of is gradually surpassing TensorFlow.

Especially at the top of each major building ACL、ICLR in , Use PyTorch The implemented algorithm framework has occupied more than in recent years 80%, by comparison TensorFlow The usage rate of is declining .

And that's why , Google can't sit still , Try to use JAX Recapture the support for the machine learning framework “ Dominance ”.

although JAX Not in name “ A common framework for deep learning ”, However, from the beginning of the release , Google's resources have been going to JAX tilt .

One side , Google brain and DeepMind Gradually build more libraries on JAX On .

Including Google brain Trax、Flax、Jax-md, as well as DeepMind The neural network library of Haiku And reinforcement learning library RLax etc. , It's all based on JAX Built .

According to Google officials :

JAX Ecosystem development , Consideration will also be given to ensuring that it is compatible with existing TensorFlow library ( Such as Sonnet and TRFL) The design of the ( As far as possible ) bring into correspondence with .

On the other hand , More projects are starting to be based on JAX Realization , The recent explosion of DALL·E mini The project is one of them .

Because we can make better use of Google TPU The advantages of ,JAX In terms of operation performance, it is better than PyTorch It's much better , More previously built on TensorFlow Industrial projects in China are also turning to JAX.

Some netizens even teased JAX The reason for the current explosion : May be TensorFlow Users of this framework can't stand it anymore .

that ,JAX Is there any hope to replace TensorFlow, Become and PyTorch The new forces of confrontation ?

Which framework do you prefer ?

overall , Many people still stand firm PyTorch.

They don't seem to like the speed with which Google comes up with a new framework every year .

“JAX Although very attractive , But not enough “ revolutionary character ” The ability to push people to abandon PyTorch To use it .”

But optimistic JAX There are not a few .

Someone said ,PyTorch It's perfect , but JAX Also narrowing the gap .

There are even some crazy people JAX hit call, It means that it is better than PyTorch It's going to be tough 10 times , said : If Meta If you don't keep pushing, Google will win .( Manual formation )

however , There's always something wrong care Who loses and who wins , They have a long-term vision :

No best , Only better . The most important thing is more players and good idea All join in , Make open source equal to really good innovation .

Project address :

https://github.com/google/jax

Reference link :

https://twitter.com/jeremyphoward/status/1538380788324257793

https://twitter.com/ylecun/status/1538419932475555840

https://mp.weixin.qq.com/s/AoygUZK886RClDBnp1v3jw

https://www.deepmind.com/blog/using-jax-to-accelerate-our-research

https://github.com/tensorflow/tensorflow/issues/53549

边栏推荐

- 从零开始做网站11-博客开发

- Stream programming: stream support, creation, intermediate operation and terminal operation

- 一行代码加速 sklearn 运算上千倍

- TC软件详细设计文档(手机群控)

- Inner class

- 移动应用开发学习通测试题答案

- 多态&Class对象&注册工厂&反射&动态代理

- R language through rprofile Site file, user-defined configuration of R language development environment startup parameters, shutdown parameters, user-defined specified cran local image source download

- Mid 2022 Summary - step by step, step by step

- 流式编程:流支持,创建,中间操作以及终端操作

猜你喜欢

应用配置管理,基础原理分析

![The most authoritative Lei niukesi in history --- embedded Ai Road line [yyds]](/img/0c/95930c7c49c5ebeee9c179c035b317.jpg)

The most authoritative Lei niukesi in history --- embedded Ai Road line [yyds]

Style penetration of vant UI components -- that is, some styles in vant UI components cannot be modified

聊聊大火的多模态项目

1. is god horse a meta universe?

Stm32mp1 cortex M4 Development Part 9: expansion board air temperature and humidity sensor control

并发底层原理:线程、资源共享、volatile 关键字

Are you still using localstorage directly? The thinnest in the whole network: secondary encapsulation of local storage (including encryption, decryption and expiration processing)

程序員新人周一優化一行代碼,周三被勸退?

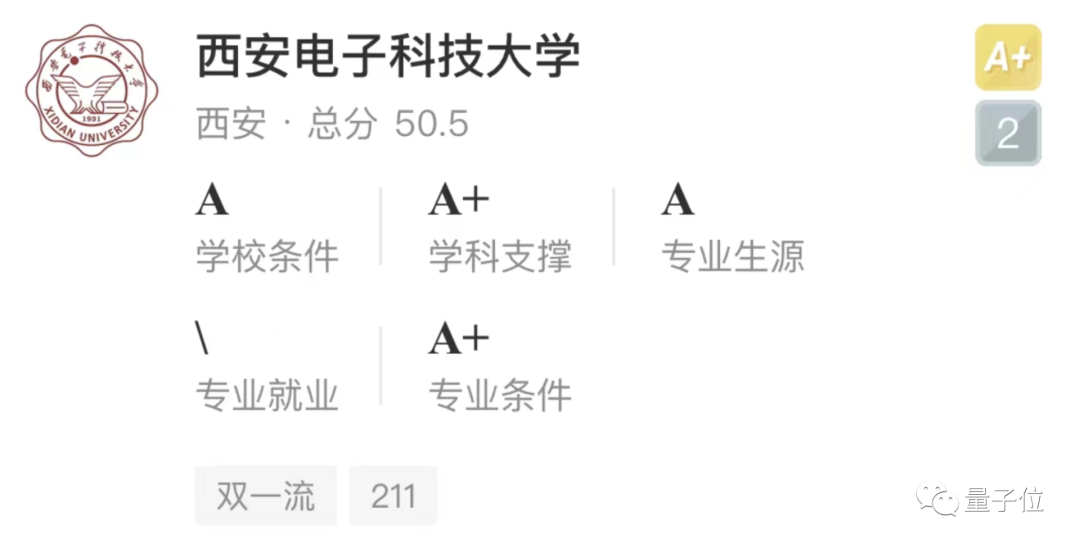

西电AI专业排名超清北,南大蝉联全国第一 | 2022软科中国大学专业排名

随机推荐

Original code, inverse code, complement calculation function applet; C code implementation;

stm32mp1 Cortex M4开发篇10:扩展板数码管控制

WCF RestFul+JWT身份验证

给电脑加装固态

The execution process before executing the main function after the DSP chip is powered on

从零开始做网站10-后台管理系统开发

异常

Underlying principle of Concurrency: thread, resource sharing, volatile keyword

Lei niukesi --- basis of embedded AI

Use this for attributes in mapstate

stm32mp1 Cortex M4开发篇9:扩展板空气温湿度传感器控制

109. use of usereducer in hooks (counter case)

Classification of ram and ROM storage media

Audio and video format introduction, encoding and decoding, audio and video synchronization

New programmers optimize a line of code on Monday and are discouraged on Wednesday?

leetcode:715. Range 模块【无脑segmentTree】

基因型填充前的质控条件简介

How to select embedded hands-on projects and embedded open source projects

Get the data in the configuration file properties

Are you still using localstorage directly? The thinnest in the whole network: secondary encapsulation of local storage (including encryption, decryption and expiration processing)