当前位置:网站首页>[deep learning] tensorflow, danger! Google itself is the one who abandoned it

[deep learning] tensorflow, danger! Google itself is the one who abandoned it

2022-06-22 09:59:00 【Demeanor 78】

Xiao Xiao Abundant color From the Aofei temple

qubits | official account QbitAI

Close harvest 16.6 m Star、 Witness the rise of deep learning TensorFlow, The position is at stake .

And this time , The shock does not come from old rivals PyTorch, It's a rookie JAX.

The latest wave AI In the hot debate , even fast.ai founder Jeremy Howard Both end up saying :

JAX Is gradually replacing TensorFlow This matter , already be widely known 了 . Now it's happening ( At least within Google ).

LeCun I think that , Fierce competition between deep learning frameworks , Has entered a new stage .

LeCun Express , The original Google TensorFlow Do than Torch More fire . However Meta Of PyTorch After appearance , It is now more popular than TensorFlow 了 .

Now? , Include Google Brain、DeepMind And many external projects , Have started to use JAX.

A typical example is the recent explosion DALL·E Mini, In order to make full use of TPU, The author adopts JAX Programming . Some people sigh after using it :

It's comparable PyTorch It's much faster .

According to the 《 Business Insider 》 According to , Expected in the next few years ,JAX Will overwrite Google all Products using machine learning technology .

So it looks like , Now vigorously promote in-house JAX, It is more like a campaign launched by Google on the framework “ Save your ”.

JAX To come from ?

About JAX, Google is actually well prepared .

As early as 2018 In the year , It was built by a three person team of Google brain .

The research results were published in a paper entitled Compiling machine learning programs via high-level tracing Papers :

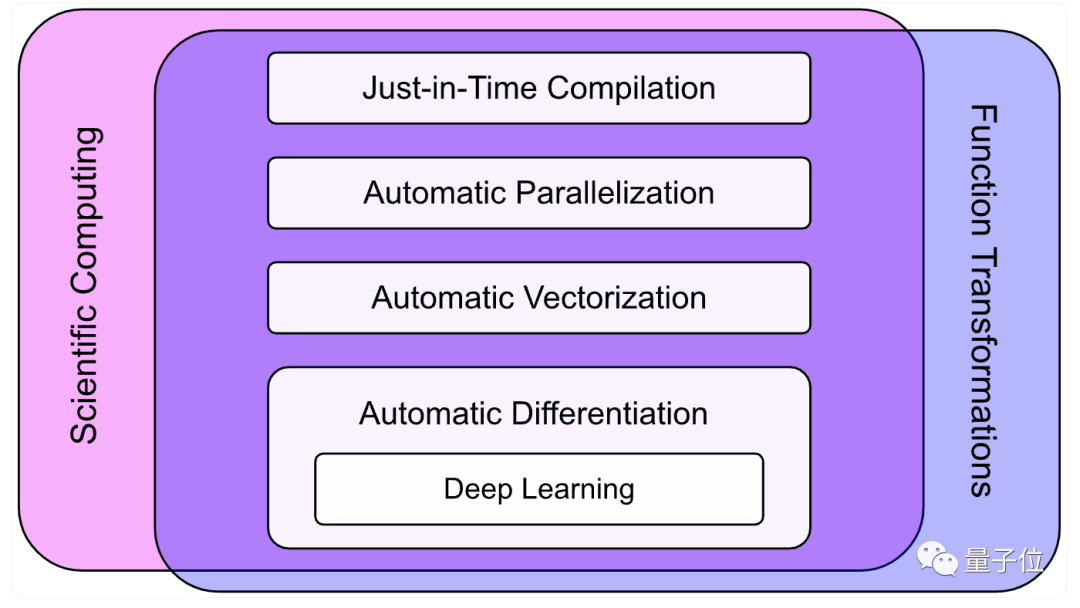

Jax It is used for high performance numerical computation Python library , And deep learning is just one of the functions .

Since its birth , Its popularity has been rising .

The biggest characteristic is fast .

Feel for an example .

For example, finding the sum of the first three powers of a matrix , use NumPy Realization , The calculation takes about 478 millisecond .

use JAX Just need 5.54 millisecond , Than NumPy fast 86 times .

Why so soon? ? There are many reasons , Include :

1、NumPy Accelerator .NumPy The importance of , use Python Engage in scientific computing and machine learning , No one can live without it , But it has never been natively supported GPU Wait for hardware acceleration .

JAX The calculation function of API All based on NumPy, Can make the model very easy in GPU and TPU Up operation . This point has been grasped by many people .

2、XLA.XLA(Accelerated Linear Algebra) Is to accelerate linear algebra , An optimization compiler .JAX Based on the XLA above , A substantial increase in JAX Calculate the upper limit of speed .

3、JIT. Researchers can use XLA Convert your own functions to real-time compilation (JIT) edition , It is equivalent to adding a simple function modifier to the calculation function , The computing speed can be increased by several orders of magnitude .

besides ,JAX And Autograd Fully compatible with , Support automatic difference , adopt grad、hessian、jacfwd and jacrev Equifunction conversion , Support reverse mode and forward mode differentiation , And the two can be composed in any order .

Of course ,JAX There are also some shortcoming On the body .

such as :

1、 although JAX Known as an accelerator , But it is not aimed at CPU Each operation in the calculation is fully optimized .

2、JAX Too new , No shape imaging TensorFlow Such a complete basic ecology . So it hasn't been launched by Google in the form of molded products .

3、debug The time and cost required are uncertain ,“ side effect ” Not entirely clear .

4、 I won't support it Windows System , Can only run in the above virtual environment .

5、 No data loader , Must borrow TensorFlow or PyTorch Of .

……

For all that , Simple 、 Flexible and easy to use JAX Or take the lead in DeepMind It's popular in China .2020 Some in-depth learning libraries were born in Haiku and RLax And so on are all based on it .

This year ,PyTorch One of the original authors Adam Paszke, Also joined full-time JAX The team .

at present ,JAX Our open source project is in GitHub Previous 18.4k Star sign .

It is worth noting that , in the meantime , There are many voices indicating that it is likely to replace TensorFlow.

On the one hand, it's because JAX The strength of the , On the other hand, it is mainly related to TensorFlow For many reasons .

Why did Google switch to JAX?

Born in 2015 Year of TensorFlow, It used to be all the rage , Soon after its launch, it surpassed Torch、Theano and Caffe Wait for a group “ Fashionable guy ”, Become the most popular machine learning framework .

However, in 2017 year , A new look PyTorch“ Making a comeback ”.

This is a Meta be based on Torch Built machine learning library , Because it's easy to get started 、 Easy to understand , Soon it was favored by many researchers , Even more than TensorFlow The trend of .

by comparison ,TensorFlow But it becomes more and more bloated in frequent updates and interface iterations , Gradually lost the trust of developers .

( from Stack Overflow According to the proportion of questions on ,PyTorch It's going up year by year ,TensorFlow But has been stagnant )

In the competition ,TensorFlow Their shortcomings are gradually exposed ,API unstable 、 The implementation is complex 、 The problem of high learning cost has not been solved with the update , Instead, the structure becomes more complex .

by comparison ,TensorFlow But they didn't continue to play better “ Operational efficiency ” Equal advantage .

In academia ,PyTorch The utilization rate of is gradually surpassing TensorFlow.

Especially at the top of each major building ACL、ICLR in , Use PyTorch The implemented algorithm framework has occupied more than in recent years 80%, by comparison TensorFlow The usage rate of is declining .

And that's why , Google can't sit still , Try to use JAX Recapture the support for the machine learning framework “ Dominance ”.

although JAX Not in name “ A common framework for deep learning ”, However, from the beginning of the release , Google's resources have been going to JAX tilt .

One side , Google brain and DeepMind Gradually build more libraries on JAX On .

Including Google brain Trax、Flax、Jax-md, as well as DeepMind The neural network library of Haiku And reinforcement learning library RLax etc. , It's all based on JAX Built .

According to Google officials :

JAX Ecosystem development , Consideration will also be given to ensuring that it is compatible with existing TensorFlow library ( Such as Sonnet and TRFL) The design of the ( As far as possible ) bring into correspondence with .

On the other hand , More projects are starting to be based on JAX Realization , The recent explosion of DALL·E mini The project is one of them .

Because we can make better use of Google TPU The advantages of ,JAX In terms of operation performance, it is better than PyTorch It's much better , More previously built on TensorFlow Industrial projects in China are also turning to JAX.

Some netizens even teased JAX The reason for the current explosion : May be TensorFlow Users of this framework can't stand it anymore .

that ,JAX Is there any hope to replace TensorFlow, Become and PyTorch The new forces of confrontation ?

Which framework do you prefer ?

overall , Many people still stand firm PyTorch.

They don't seem to like the speed with which Google comes up with a new framework every year .

“JAX Although very attractive , But not enough “ revolutionary character ” The ability to push people to abandon PyTorch To use it .”

But optimistic JAX There are not a few .

Someone said ,PyTorch It's perfect , but JAX Also narrowing the gap .

There are even some crazy people JAX hit call, It means that it is better than PyTorch It's going to be tough 10 times , said : If Meta If you don't keep pushing, Google will win .( Manual formation )

however , There's always something wrong care Who loses and who wins , They have a long-term vision :

No best , Only better . The most important thing is more players and good idea All join in , Make open source equal to really good innovation .

Project address :

https://github.com/google/jax

Reference link :

https://twitter.com/jeremyphoward/status/1538380788324257793

https://twitter.com/ylecun/status/1538419932475555840

https://mp.weixin.qq.com/s/AoygUZK886RClDBnp1v3jw

https://www.deepmind.com/blog/using-jax-to-accelerate-our-research

https://github.com/tensorflow/tensorflow/issues/53549

— End —

Past highlights

It is suitable for beginners to download the route and materials of artificial intelligence ( Image & Text + video ) Introduction to machine learning series download Chinese University Courses 《 machine learning 》( Huang haiguang keynote speaker ) Print materials such as machine learning and in-depth learning notes 《 Statistical learning method 》 Code reproduction album machine learning communication qq Group 955171419, Please scan the code to join wechat group

边栏推荐

- PowerDesigner技巧2 触发器模板

- [popular science] to understand supervised learning, unsupervised learning and reinforcement learning

- 6-35 constructing an ordered linked list

- [ZOJ] P3228 Searching the String

- CISP教材更新:2019年八大知识域新知识体系介绍

- 神经网络训练trick总结

- Attack and defense world web practice area beginner level

- day260:只出现一次的数字 III

- MySQL中from_unixtime和unix_timestamp处理数据库时间戳转换问题-案例

- DAO 的未来:构建 web3 的组织原语

猜你喜欢

Tiktok practice ~ one click registration and login process of mobile phone number (verification code)

软件项目管理 8.3.敏捷项目质量活动

Payment order case construction

IDE 的主题应该用亮色还是暗色?终极答案来了!

Summary and Prospect of AI security technology | community essay solicitation

Quickly master asp Net authentication framework identity - login and logout

APM 飞行模式切换--源码详解

使用pytorch mask-rcnn进行目标检测/分割训练

![[popular science] to understand supervised learning, unsupervised learning and reinforcement learning](/img/24/d26c656135219a38fd64e4d370c9ee.png)

[popular science] to understand supervised learning, unsupervised learning and reinforcement learning

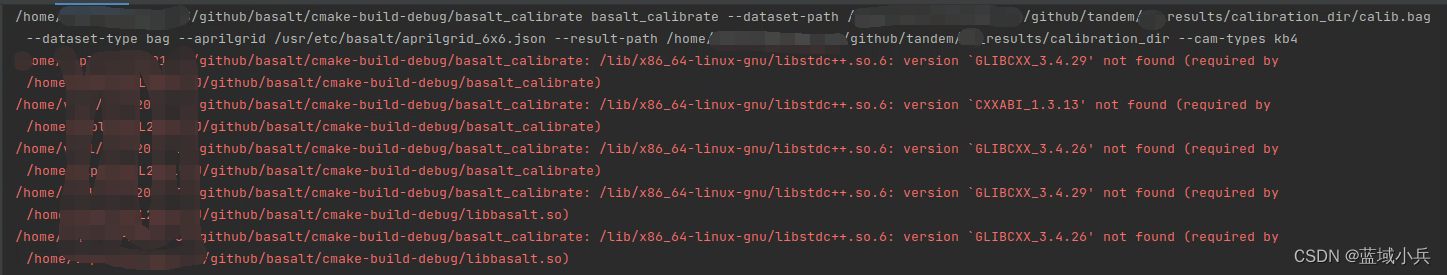

编译basalt时出现的报错

随机推荐

Binary String

[hdu] P1466 计算直线的交点数

PAT甲级 - 1010 Radix(思维+二分)

使用pytorch mask-rcnn进行目标检测/分割训练

[模板] kmp

Pareto's law at work: focus on results, not outputs

Former amd chip architect roast said that the cancellation of K12 processor project was because amd counseled!

Basic knowledge of AI Internet of things | community essay solicitation

Pytorch实现波阻抗反演

传iPhone 14将全系涨价;TikTok美国用户数据转移到甲骨文,字节无法访问;SeaTunnel 2.1.2发布|极客头条...

三个月让软件项目成功“翻身”!

SQLMap-hh

From in MySQL_ Unixtime and UNIX_ Timestamp processing database timestamp conversion - Case

office2016+visio2016

Lock reentrantlock

信息系统项目典型生命周期模型

HDU - 7072 双端队列+对顶

Up the Strip

【深度学习】不得了!新型Attention让模型提速2-4倍!

Tiktok practice ~ personal Center