当前位置:网站首页>What's new in redis4.0 - active memory defragmentation

What's new in redis4.0 - active memory defragmentation

2022-06-27 05:56:00 【Don't fight with heaven, doctor Jiang】

What is active defragmentation?

What is active memory defragmentation ?

Active (online) defragmentation allows a Redis server to compact the spaces left between small allocations and deallocations of data in memory, thus allowing to reclaim back memory.

Active memory defragmentation allows a Redis The server compresses the generated space in the process of data allocation and release in memory , To free up memory .

Fragmentation is a natural process that happens with every allocator (but less so with Jemalloc, fortunately) and certain workloads. Normally a server restart is needed in order to lower the fragmentation, or at least to flush away all the data and create it again. However thanks to this feature implemented by Oran Agra for Redis 4.0 this process can happen at runtime in a “hot” way, while the server is running.

All allocators will be naturally defragmented under certain load (Jemalloc Very few ). Usually , The server needs to be restarted to reduce fragmentation , Or at least clear all data and recreate them . however , because Oran Agra stay Redis 4.0 This feature is implemented in , The defragmentation process can be performed while the server is running .

Basically when the fragmentation is over a certain level (see the

configuration options below) Redis will start to create new copies of the values in contiguous memory regions by exploiting certain specific Jemalloc features (in order to understand if an allocation is causing fragmentation and to allocate it in a better place), and at the same time, will release the old copies of the data. This process, repeated incrementally for all the keys

will cause the fragmentation to drop back to normal values.

Basically , When the debris reaches a certain level ( See configuration options below ),Redis Will pass through Jemalloc The function of ( To see if allocation causes fragmentation , And assign them better positions ) And start creating new copies of these values in adjacent memory , A copy of the old value will also be released . This process will repeat incrementally for all keys , Until defragmentation returns to normal .

Important things to understand:

This feature is disabled by default, and only works if you compiled Redis to use the copy of Jemalloc we ship with the source code of Redis. This is the default with Linux builds.

This function is off by default , And only if you use the tape we released Jemalloc Code Redis Source code to compile Redis This function can only be used when ,Linux By default, the release version has this function .

You never need to enable this feature if you don’t have fragmentation issues.

If there is no defragmentation problem , You don't need to enable this feature .

Once you experience fragmentation, you can enable this feature when

needed with the command “CONFIG SET activedefrag yes”.You can go through "CONFIG SET activedefrag yes" Enable this feature .

The configuration parameters are able to fine tune the behavior of the defragmentation process. If you are not sure about what they mean it is a good idea to leave the defaults untouched.

Configuration parameters can adjust the effect of defragmentation , But if you're not sure what these configuration parameters mean , Then you can leave it at its default value .

# Active defragmentation is disabled by default

# By default , Active memory defragmentation is turned off

# activedefrag no

# Minimum amount of fragmentation waste to start active defrag

# Minimum amount of fragmentation loss to start active memory defragmentation

# active-defrag-ignore-bytes 100mb

# Minimum percentage of fragmentation to start active defrag

# Minimum percentage of fragments to start active memory defragmentation

# active-defrag-threshold-lower 10

# Maximum percentage of fragmentation at which we use maximum effort

# Maximum percentage of fragmentation rate for maximum effort defragmentation

# active-defrag-threshold-upper 100

# Minimal effort for defrag in CPU percentage, to be used when the lower

# threshold is reached

# Defragmentation takes up CPU Lower threshold

# active-defrag-cycle-min 1

# Maximal effort for defrag in CPU percentage, to be used when the upper

# threshold is reached

# Defragmentation takes up CPU Upper threshold

# active-defrag-cycle-max 25

# Maximum number of set/hash/zset/list fields that will be processed from

# the main dictionary scan

# set/hash/zset/list Maximum number of elements scanned for type

# active-defrag-max-scan-fields 1000

Test defragmentation

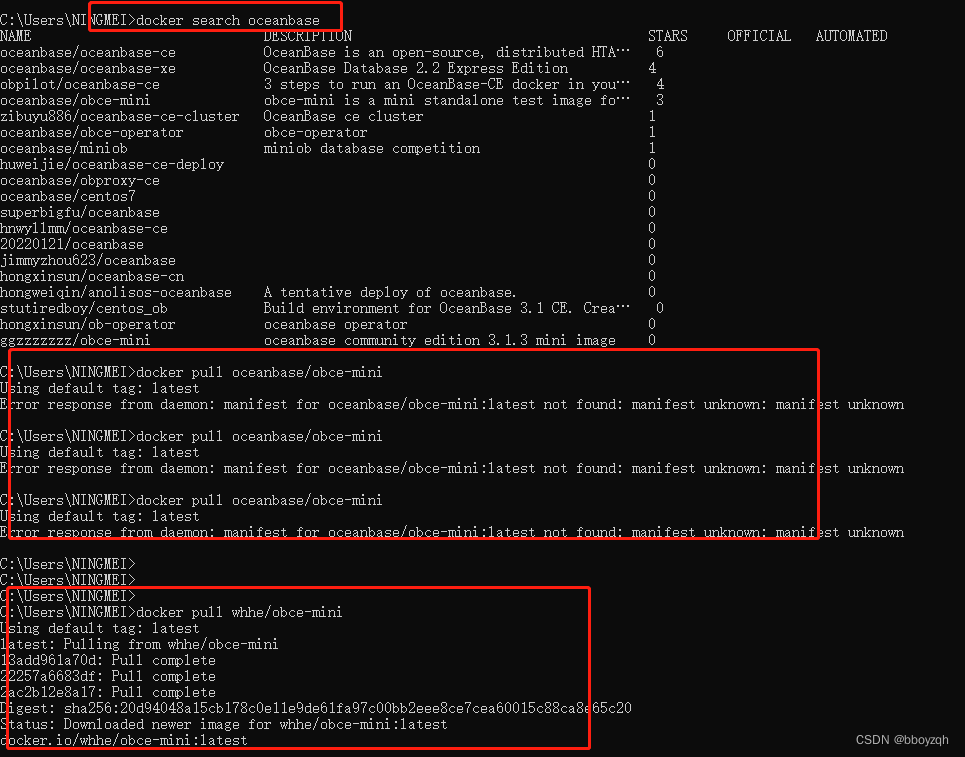

Use configuration to create redis example

redis-standalone.conf

port 7007

bind 0.0.0.0

daemonize yes

logfile "/opt/cachecloud/logs/redis-standalone-7007.log"

dir "/opt/cachecloud/data"

dbfilename "dump_7007.rdb"

# Configure active defragmentation on

activedefrag yes

active-defrag-ignore-bytes 10mb

active-defrag-threshold-lower 2

active-defrag-threshold-upper 150

active-defrag-cycle-min 1

active-defrag-cycle-max 25

active-defrag-max-scan-fields 1000

start-up redis example

redis-server /opt/cachecloud/conf/redis-standalone.conf

Insert a lot of data

========== Memory condition after inserting a large amount of data ==========

[[email protected] ~]# redis-cli -p 7007 info memory

# Memory

used_memory:545047960

used_memory_human:519.80M

used_memory_rss:563924992

used_memory_rss_human:537.80M

allocator_frag_ratio:1.00

allocator_frag_bytes:161464

mem_fragmentation_ratio:1.03

mem_fragmentation_bytes:18938936

active_defrag_running:0

[[email protected] ~]# redis-cli -p 7007 memory stats

37) "allocator-fragmentation.ratio"

38) "1.0003455877304077"

39) "allocator-fragmentation.bytes"

40) (integer) 188344

49) "fragmentation"

50) "1.0352247953414917"

51) "fragmentation.bytes"

52) (integer) 19196984

Start execution python Script batch delete data

import redis

import traceback

re = redis.StrictRedis(host = "10.4.7.104",port = 7007,password = "")

key = "jwjrand"

cur = '0'

cou = 1000

while cur != 0:

# cur,data = re.scan(cursor=cur, match=key, count = cou)

cur,data = re.execute_command('scan', cur, 'match', 'dmntest1*', "count", 1000)

try:

p = re.pipeline()

for item in data:

print("==>",item.decode())

p.delete(item.decode())

p.execute()

print("==>",cur)

except Exception as e:

print(traceback.format_exc())

=============== Memory condition during data cleaning ==============

[[email protected] ~]# redis-cli -p 7007 info memory

# Memory

used_memory:478123936

used_memory_human:455.97M

used_memory_rss:565260288

used_memory_rss_human:539.07M

allocator_frag_ratio:1.14

allocator_frag_bytes:65979840

active_defrag_running:2

[[email protected] ~]# redis-cli -p 7007 memory stats

37) "allocator-fragmentation.ratio"

38) "1.213597297668457"

39) "allocator-fragmentation.bytes"

40) (integer) 95918528

49) "fragmentation"

50) "1.2589750289916992"

51) "fragmentation.bytes"

52) (integer) 116249680

[[email protected] ~]# redis-cli -p 7007 info memory

# Memory

used_memory:375470976

used_memory_human:358.08M

used_memory_rss:564625408

used_memory_rss_human:538.47M

mem_fragmentation_ratio:1.50

mem_fragmentation_bytes:187056320

active_defrag_running:7

[[email protected] ~]# redis-cli -p 7007 memory stats

37) "allocator-fragmentation.ratio"

38) "1.5605411529541016"

39) "allocator-fragmentation.bytes"

40) (integer) 191159088

49) "fragmentation"

50) "1.6560860872268677"

51) "fragmentation.bytes"

52) (integer) 223619224

[[email protected] ~]# redis-cli -p 7007 info memory

# Memory

used_memory:265272096

used_memory_human:252.98M

used_memory_rss:557862912

used_memory_rss_human:532.02M

allocator_frag_ratio:1.76

allocator_frag_bytes:204627600

mem_fragmentation_ratio:2.09

mem_fragmentation_bytes:290492704

active_defrag_running:12

[[email protected] ~]# redis-cli -p 7007 memory stats

37) "allocator-fragmentation.ratio"

38) "1.5875883102416992"

39) "allocator-fragmentation.bytes"

40) (integer) 113410912

49) "fragmentation"

50) "2.6743261814117432"

51) "fragmentation.bytes"

52) (integer) 322839920

[[email protected] ~]# redis-cli -p 7007 info memory

# Memory

used_memory:915584

used_memory_human:894.12K

used_memory_rss:145321984

used_memory_rss_human:138.59M

mem_fragmentation_ratio:170.23

mem_fragmentation_bytes:144468304

mem_allocator:jemalloc-5.1.0

active_defrag_running:17

[[email protected] ~]# redis-cli -p 7007 memory stats

35) "allocator-fragmentation.ratio"

36) "1.2425054311752319"

37) "allocator-fragmentation.bytes"

38) (integer) 215048

47) "fragmentation"

48) "170.23004150390625"

49) "fragmentation.bytes"

50) (integer) 144468304

=============== Memory condition after data cleaning ==============

[[email protected] ~]# redis-cli -p 7007 info memory

# Memory

used_memory:853688

used_memory_human:833.68K

used_memory_rss:151199744

used_memory_rss_human:144.20M

used_memory_peak:545047968

allocator_frag_ratio:1.23

allocator_frag_bytes:190568

mem_fragmentation_ratio:190.96

mem_fragmentation_bytes:150407960

active_defrag_running:0

[[email protected] ~]# redis-cli -p 7007 memory stats

35) "allocator-fragmentation.ratio"

36) "1.2568612098693848"

37) "allocator-fragmentation.bytes"

38) (integer) 218480

47) "fragmentation"

48) "190.96084594726562"

49) "fragmentation.bytes"

50) (integer) 150407960

From the process of cleaning up data info memory and memory stats Command to view the memory usage and memory details , Active defragmentation is triggered during the cleaning process ,active_defrag_running:17, And defragmentation takes up CPU by 17%. Can also from mem_fragmentation_bytes In the process of change , In the process of deleting a large amount of data ,mem_fragmentation_bytes It grows larger because of debris , After active defragmentation ,mem_fragmentation_bytes The value of becomes smaller .

in addition info Several related return values of the command need to be explained :

mem_fragmentation_ratio:used_memory_rssRedis Memory requested from the system andused_memoryRedis The ratio of memory allocated to data . Not only fragments , It also includes other processes and code 、 Shared library 、 Memory overhead of stack, etc ( See allocator_* indicators ).mem_fragmentation_bytes:used_memory_rssandused_memoryThe difference between the . Note that if the amount of debris is low ( A few trillion ) But the fragmentation rate is very high , Nothing .allocator_allocated: Redis The total number of bytes of memory allocated by the allocator , Contains internal fragments . Usually withused_memorySame quantity .allocator_active: The amount of bytes in the allocator's active page , Contains external fragments .allocator_resident: The total number of bytes the allocator contains , Contains the total number of bytes of pages that can be published to the system ( adoptMEMORY PURGECommand or wait ).allocator_frag_ratio:allocator_activeandallocator_allocatedRatio of . This is a real indicator of external fragmentation ( No mem_fragmentation_ratio).active_defrag_running: Turn on activedefrag in the future , This indicator shows whether active defragmentation is being performed , And the CPU rate .

边栏推荐

- 30 SCM common problems and solutions!

- 微信小程序刷新当前页面

- openstack实例重启状态就会变成错误处理方法,容器搭建的openstack重启计算节点compute服务方法,开机提示Give root password for maintenance处理方法

- 【QT小记】QT元对象系统简单认识

- 双位置继电器XJLS-8G/220

- WebRTC系列-网络传输之7-ICE补充之提名(nomination)与ICE_Model

- [FPGA] realize the data output of checkerboard horizontal and vertical gray scale diagram based on bt1120 timing design

- Gao Xiang slam14 lecture - note 1

- 思维的技术:如何破解工作生活中的两难冲突?

- 【合辑】点云基础知识及点云催化剂软件功能介绍

猜你喜欢

随机推荐

Netease cloud music params and encseckey parameter generation code

Asp. Net core6 websocket simple case

cpu-z中如何查看内存的频率和内存插槽的个数?

创建一个基础WDM驱动,并使用MFC调用驱动

数据库-索引

Leetcode298 weekly race record

Senior [Software Test Engineer] learning route and necessary knowledge points

Obtenir le volume du système à travers les plateformes de l'unit é

Matlab quickly converts two-dimensional coordinates of images into longitude and latitude coordinates

[FPGA] UART serial port_ V1.1

【Cocos Creator 3.5.1】event. Use of getbutton()

汇编语言-王爽 第13章 int指令-笔记

Two position relay hjws-9440

Unity中跨平臺獲取系統音量

汇编语言-王爽 第8章 数据处理的两个基本问题-笔记

C language implementation timer

Some articles about component packaging and my experience

IAR Systems全面支持芯驰科技9系列芯片

Avoid asteroids

Go log -uber open source library zap use