当前位置:网站首页>[CUDA study notes] What is GPU computing

[CUDA study notes] What is GPU computing

2022-08-05 13:58:00 【Pastry chef learns AI】

Series Article Directory

Chapter 1 Getting to Know CUDA

Article table of contents

- Catalogue of articles

- Foreword

- I. CPU architecture vs GPU architecture

- Second, what is GPU computing:

- Third, why use GPU computing:

- Fourth, the division of labor and cooperation between CPU and GPU:

- V. GPU computing architecture:

- Six, program structure:

- VII. Language selection:

- VIII. Compiler:

- 9. CUDA tools:

- X. Sample program:

Article table of contents

- Catalogue of articles

- Foreword

- I. CPU architecture vs GPU architecture

- Second, what is GPU computing:

- Third, why use GPU computing:

- Fourth, the division of labor and cooperation between CPU and GPU:

- V. GPU computing architecture:

- Six, program structure:

- VII. Language selection:

- VIII. Compiler:

- 9. CUDA tools:

- X. Sample program:

Foreword

This article is my study notes for the CUDA course, mainly for myself to review the past and learn new things in the future.It's an honor to be of any help to you.If you are a beginner in CUDA, please correct me if I am wrong.If there is any infringement, please contact the author to delete it.

I. CPU architecture vs GPU architecture

CPU Architecture

GPU Architecture

Here is an example of three GPUs.In general, GPU is divided into three layers: GPU-SM-SP

sm: (full name: Streaming Multiprocessor, stream multiprocessor)

sp: (full name: Streaming Processor, stream processor)

1. Early GPU (C2050):

2, Fermi Architecture:

3, newer version GPU:

Second, what is GPU computing:

- NVIDIA released CUDA, a general parallel computing platform and programming model built on NVIDIA's CPUs. Based on CUDA programming, the parallel computing engine of GPUs can be used to solve more complex computing problems more efficiently.

- GPU is not an independent computing platform, but needs to work in conjunction with the CPU, which can be regarded as a co-processor of the CPU. Therefore, when we say GPU parallel computing, we actually refer to the CPU+GPU-based heterogeneous computing.Construct the computing architecture.The cpu controls the architecture and logic of the entire program, and the gpu acts as a collaboration and a computing module, thereby accelerating the running speed of the entire program.

- In the Tesco computing architecture, the GPU and CPU are linked together through the PCIe bus to work together.The speed of PCIe can reach 16G/s, 32G/s

- The location of the CPU is called the host, and the location of the GPU is called the device.

Third, why use GPU computing:

- The powerful parallel computing engine of GPUs can greatly speed up the calculation, such as about 15 times.

- Supercomputers use accelerators, such as Tianhe, Summit.

- Machine learning and artificial intelligence need to train models and require a lot of calculations, especially dense matrix and vector calculations, GPU can be more than ten times faster

- One of the most successful applications of GPU is in the field of deep learning. Parallel computing based on GPU has become the standard for training deep learning models.

Fourth, the division of labor and cooperation between CPU and GPU:

- GPU includes more computing cores, which are especially suitable for data-parallel computing-intensive tasks, such as large-scale matrix operations.

- CPU has fewer computing cores, but it can implement complex logic operations, so it is suitable for control-intensive tasks.

- The off-the-shelf on the CPU is heavyweight, and the context switching overhead is large

- GPUs are lightweight due to the presence of many cores

- Heterogeneous computing platforms based on CPU+GPU can complement each other's advantages. The CPU is responsible for processing the serial programs responsible for the logic, while the GPU focuses on processing data-intensive parallel computing programs, so as to maximize the efficiency.

V. GPU computing architecture:

Six. Program structure:

7. Language selection:

- CUDA is a GPU programming model developed by NVIDIA. It provides a simple interface for GPU programming. Based on CUDA programming, applications based on GPU computing can be built.

- CUDA provides support for other programming languages, such as C/C++, Python, Fortran and other languages. Here we choose the CUDA C/C++ interface to explain CUDA programming.

8. Compiler:

- CUDA: NVIDIA, latest CUDA nvcc

- OS: Linux Ubuntu

- Linux Advantages:

- Easy to write compilation scripts, Makefile;

- Many command lines to try;

- Lightweight operating environment;

- Free

9. CUDA tools:

- Compiler: nvcc (C/C++)

- Debugger: nvcc-gdb

- Performance analysis: nsight, nvprof

- Libraries: cublas, nvblas, cusolver, cufftw, cuspares, nvgraph

X. Sample program:

- https://github.com/huiscliu/tutorials

- Env: Linux, Mac OSX

边栏推荐

- LeetCode高频题69. x 的平方根,二分法搞定,非常简单

- 十四、正则表达式 - 章节课后练习题及答案

- Wireshark的工具下载

- LeetCode刷题攻略

- The memory problem is difficult to locate, that's because you don't use ASAN

- ‘proxy‘ config is set properly,see“npm help config“

- 伙伴分配器的内核实现

- 国密是什么意思?属于商密还是普密?

- 图扑软件数字孪生油气管道站,搭建油气运输管控平台

- DSPE-PEG-Silane,DSPE-PEG-SIL,磷脂-聚乙二醇-硅烷可修饰材料表面

猜你喜欢

随机推荐

Unity相机漫游脚本

day4·模块、包与矩阵列表变换

pandas连接oracle数据库并拉取表中数据到dataframe中、筛选当前时间(sysdate)到一分钟之前的所有数据(筛选一分钟之内的数据)

DonkeyCar source code reading .4 (project file creation)

IEEE Fellow 徐鹰:AI 生命科学的 30 年快意人生

思科交换机命令大全,含巡检命令,网工建议收藏!

选择排名靠前的期货公司开户

第五讲 测试技术与用例设计

施耐德电气庞邢健:以软件撬动可持续的未来工业

BufferedReader和BufferedWriter

用脚本启动和关闭jar包或者war包

R语言拟合ARIMA模型:使用forecast包中的auto.arima函数自动搜索最佳参数组合、模型阶数(p,d,q)

踩坑了!mysql明明加了唯一索引,还是产生了重复数据

leetcode 240. Search a 2D Matrix II 搜索二维矩阵 II(中等)

内存管理架构及虚拟地址空间布局

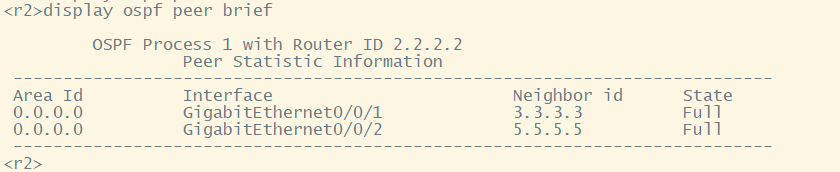

华为设备Smart Link和Monitor Link配置命令

C# employee attendance management system source code attendance salary management system source code

‘proxy‘ config is set properly,see“npm help config“

npm install时卡在sill idealTree buildDeps

期货开户公司的选择和作用