当前位置:网站首页>强化學習——策略梯度理解點

强化學習——策略梯度理解點

2022-07-23 14:10:00 【小陳phd】

策略梯度計算公式

目的是最大化reward函數,即調整 θ ,使得期望回報最大,可以用公式錶示如下

J ( θ ) = E τ ∼ p ( T ) [ ∑ t r ( s t , a t ) ] \mathrm{J}(\theta)=\mathrm{E}_{\tau \sim p}(\mathcal{T})\left[\sum_{\mathrm{t}} \mathrm{r}\left(\mathrm{s}_{t}, \mathrm{a}_{\mathrm{t}}\right)\right] J(θ)=Eτ∼p(T)[t∑r(st,at)]

對於上面的式子, τ \tau τ 錶示從從開始到結束的一條完整路徑。通常,對於最大化問題,我們可以使用梯度上昇算法來找到最大值,即

θ ∗ = θ + α ∇ J ( θ ) \theta^{*}=\theta+\alpha \nabla \mathrm{J}(\theta) θ∗=θ+α∇J(θ)

所以我們僅僅需要計算 (更新) ∇ J ( θ ) \nabla J(\theta) ∇J(θ) ,也就是計算回報函數 J ( θ ) J(\theta) J(θ) 關於 θ \theta θ 的梯度,也就是策略梯度,計算方法如下:

∇ θ J ( θ ) = ∫ ∇ θ p θ ( τ ) r ( τ ) d τ = ∫ p θ ∇ θ log p θ ( τ ) r ( τ ) d τ = E τ ∼ p θ ( τ ) [ ∇ θ log p θ ( τ ) r ( τ ) ] \begin{aligned} \nabla_{\theta} \mathrm{J}(\theta) &=\int \nabla_{\theta \mathrm{p}_{\theta}}(\tau) \mathrm{r}(\tau) \mathrm{d}_{\tau} \\ &=\int \mathrm{p}_{\theta} \nabla_{\theta} \log \mathrm{p}_{\theta}(\tau) \mathrm{r}(\tau) \mathrm{d}_{\tau} \\ &=\mathrm{E}_{\tau \sim \mathrm{p} \theta(\tau)}\left[\nabla_{\theta} \log \mathrm{p}_{\theta}(\tau) \mathrm{r}(\tau)\right] \end{aligned} ∇θJ(θ)=∫∇θpθ(τ)r(τ)dτ=∫pθ∇θlogpθ(τ)r(τ)dτ=Eτ∼pθ(τ)[∇θlogpθ(τ)r(τ)]

接著我們繼續講上式展開,對於 p θ ( τ ) \mathrm{p}_{\theta}(\tau) pθ(τ) ,即 p θ ( τ ∣ θ ) \mathrm{p}_{\theta}(\tau \mid \theta) pθ(τ∣θ) :

p θ ( τ ∣ θ ) = p ( s 1 ) ∏ t = 1 T π θ ( a t ∣ s t ) p ( s t + 1 ∣ s t , a t ) \mathrm{p}_{\theta}(\tau \mid \theta)=\mathrm{p}\left(\mathrm{s}_{1}\right) \prod_{t=1}^{\mathrm{T}} \pi_{\theta}\left(\mathrm{a}_{t} \mid \mathrm{s}_{\mathrm{t}}\right) \mathrm{p}\left(\mathrm{s}_{t+1} \mid \mathrm{s}_{t}, \mathrm{a}_{\mathrm{t}}\right) pθ(τ∣θ)=p(s1)t=1∏Tπθ(at∣st)p(st+1∣st,at)

取對數後為:

log p θ ( τ ∣ θ ) = log p ( s 1 ) + ∑ t = 1 T log π θ ( a t ∣ s t ) p ( s t + 1 ∣ s t , a t ) \log p_{\theta}(\tau \mid \theta)=\log p\left(s_{1}\right)+\sum_{t=1}^{T} \log \pi_{\theta}\left(a_{t} \mid s_{t}\right) p\left(s_{t+1} \mid s_{t}, a_{t}\right) logpθ(τ∣θ)=logp(s1)+t=1∑Tlogπθ(at∣st)p(st+1∣st,at)

繼續求導:

∇ log p θ ( τ ∣ θ ) = ∑ t = 1 T ∇ θ log π θ ( a t ∣ s t ) \nabla \log p_{\theta}(\tau \mid \theta)=\sum_{t=1}^{T} \nabla_{\theta} \log \pi_{\theta}\left(a_{t} \mid s_{t}\right) ∇logpθ(τ∣θ)=t=1∑T∇θlogπθ(at∣st)

帶入第三個式子,可以將其化簡為:

∇ θ J ( θ ) = E τ ∼ p θ ( τ ) [ ∇ θ log p θ ( τ ) r ( τ ) ] = E τ ∼ p θ [ ( ∇ θ log π θ ( a t ∣ s t ) ) ( ∑ t = 1 T r ( s t , a t ) ) ] = 1 N ∑ i = 1 N [ ( ∑ t = 1 T ∇ θ log π θ ( a i , t ∣ s i , t ) ) ( ∑ t = 1 N r ( s i , t , a i , t ) ) ] \begin{aligned} \nabla_{\theta} \mathrm{J}(\theta) &=\mathrm{E}_{\tau \sim p \theta(\tau)}\left[\nabla_{\theta} \log \mathrm{p}_{\theta}(\tau) \mathrm{r}(\tau)\right] \\ &=\mathrm{E}_{\tau \sim p \theta}\left[\left(\nabla_{\theta} \log \pi_{\theta}\left(\mathrm{a}_{\mathrm{t}} \mid \mathrm{s}_{\mathrm{t}}\right)\right)\left(\sum_{\mathrm{t}=1}^{\mathrm{T}} \mathrm{r}\left(\mathrm{s}_{\mathrm{t}}, \mathrm{a}_{\mathrm{t}}\right)\right)\right] \\ &=\frac{1}{N} \sum_{\mathrm{i}=1}^{\mathrm{N}}\left[\left(\sum_{\mathrm{t}=1}^{\mathrm{T}} \nabla_{\theta} \log \pi_{\theta}\left(\mathrm{a}_{\mathrm{i}, \mathrm{t}} \mid \mathrm{s}_{\mathrm{i}, \mathrm{t}}\right)\right)\left(\sum_{\mathrm{t}=1}^{\mathrm{N}} \mathrm{r}\left(\mathrm{s}_{\mathrm{i}, \mathrm{t}}, \mathrm{a}_{\mathrm{i}, \mathrm{t}}\right)\right)\right] \end{aligned} ∇θJ(θ)=Eτ∼pθ(τ)[∇θlogpθ(τ)r(τ)]=Eτ∼pθ[(∇θlogπθ(at∣st))(t=1∑Tr(st,at))]=N1i=1∑N[(t=1∑T∇θlogπθ(ai,t∣si,t))(t=1∑Nr(si,t,ai,t))]

重要性重采樣

使用另外一種數據分布,來逼近所求分布的一種方法,算是一種期望修正的方法,公式是:

∫ f ( x ) p ( x ) d x = ∫ f ( x ) p ( x ) q ( x ) q ( x ) d x = E x ∼ q [ f ( x ) p ( x ) q ( x ) ] = E x ∼ p [ f ( x ) ] \begin{aligned} \int \mathrm{f}(\mathrm{x}) \mathrm{p}(\mathrm{x}) \mathrm{dx} &=\int \mathrm{f}(\mathrm{x}) \frac{\mathrm{p}(\mathrm{x})}{\mathrm{q}(\mathrm{x})} \mathrm{q}(\mathrm{x}) \mathrm{dx} \\ &=\mathrm{E}_{\mathrm{x} \sim \mathrm{q}}\left[\mathrm{f}(\mathrm{x}) \frac{\mathrm{p}(\mathrm{x})}{\mathrm{q}(\mathrm{x})}\right] \\ &=\mathrm{E}_{\mathrm{x} \sim \mathrm{p}}[\mathrm{f}(\mathrm{x})] \end{aligned} ∫f(x)p(x)dx=∫f(x)q(x)p(x)q(x)dx=Ex∼q[f(x)q(x)p(x)]=Ex∼p[f(x)]

在已知 q q q 的分布後,可以使用上述公式計算出從 p 分布的期望值。也就可以使用 q q q 來對於 p 進行采樣了,即為重要性采樣。

边栏推荐

猜你喜欢

随机推荐

生活随笔:2022烦人的项目

OSPF综合实验

mysql开启定时调度任务执行

[laser principle and application -7]: semiconductor refrigeration sheet and Tec thermostat

Is machine learning difficult to get started? Tell me how I started machine learning quickly!

RIP实验

How can Creo 9.0 quickly modify CAD coordinate system?

excel随笔记录

The difference between Celeron n4000 and Celeron n5095

Best practices of JD cloud Distributed Link Tracking in financial scenarios

PyTorch到底好用在哪里?

OSPF comprehensive experiment

《Animal Farm》笔记

达人评测 酷睿i9 12950hx和i9 12900hx区别哪个强

容器网络原理

fastadmin更改默认表格按钮的弹窗大小

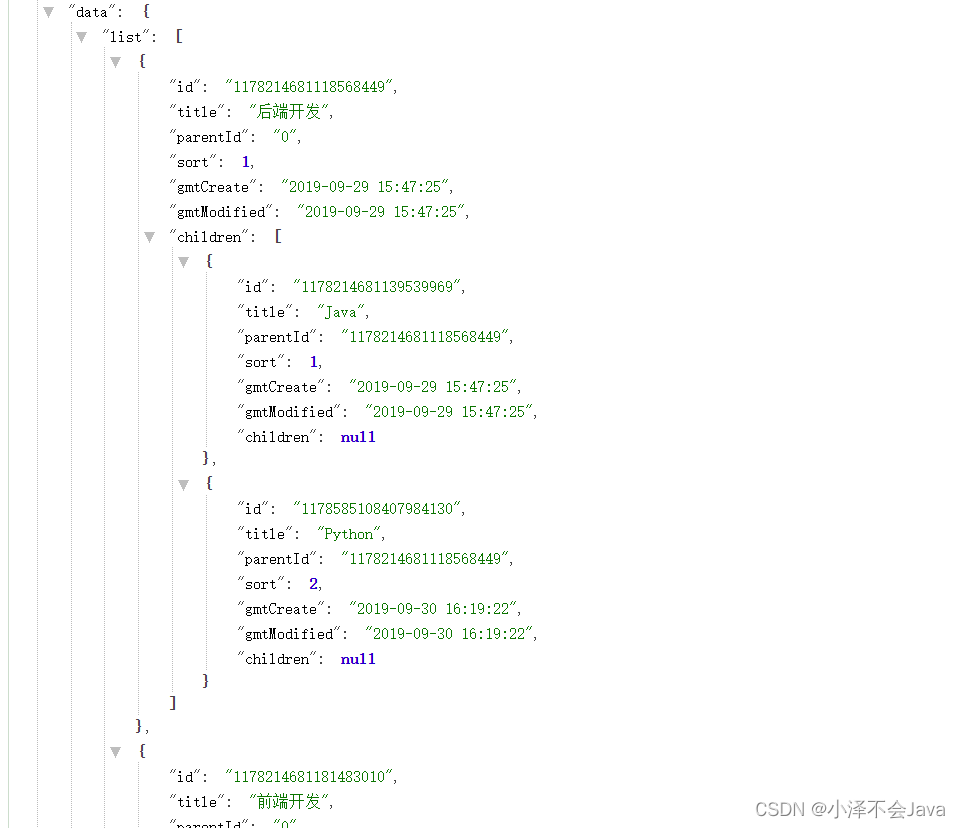

使用Stream流来进行分类展示。

赛扬n5095处理器怎么样 英特尔n5095核显相当于什么水平

Static comprehensive experiment (HCIA)

Comparison of iqoo 10 pro and Xiaomi 12 ultra configurations

![[laser principle and application -7]: semiconductor refrigeration sheet and Tec thermostat](/img/c8/e750ff7c64e05242eac7b53b84dbae.png)