当前位置:网站首页>[paper notes] effective CNN architecture design guided by visualization

[paper notes] effective CNN architecture design guided by visualization

2022-07-25 07:34:00 【m0_ sixty-one million eight hundred and ninety-nine thousand on】

The paper

Preface

Modern efficient Convolutional Neural Networks (

CNN) Always usedDepth separates the convolution(DSC) andNeural network architecture search(NAS) To reduce the number of parameters and computational complexity . But it ignores some inherent characteristics of the network . Subject to visual feature map and N×N(N>1) The inspiration of convolution kernel , This article introduces several guidelines , To further improve parameter efficiency and reasoning speed .The parameters designed based on these guidelines are efficient CNN The architecture is called

VGNetG, Better accuracy and lower latency than previous Networks , The parameters are reduced by about 30%~50%.VGNetG-1.0MPstayImageNetClassify the data set with0.99MThe parameters of are implemented67.7%Oftop-1Accuracy rate , stay1.14MUnder the parameters of69.2%Oftop-1Accuracy rate .Besides , prove

Edge detectorIt can be fixed byedge detection kernelReplaceN×N kernelTo replace the learnable deep convolution layer to mix features .VGNetF-1.5MPachieve64.4%(-3.2%) Oftop-1Accuracy and accuracy66.2%(-1.4%) Oftop-1Accuracy rate , With additionalgaussian kernel.

1 Methods of this paper

The author mainly studies the..., which is constructed by standard convolution 3 A typical network :

Standard convolution ==>

ResNet-RS,Group convolution ==>

RegNet,Depth separates the convolution ==>

MobileNet、ShuffleNetV2andEfficientNets.

These visualization results show that ,M×N×N kernel There are obviously different patterns and distributions in different stages of the network .

1.1 CNN You can learn how to satisfy the sampling theorem

Previous work has always believed that convolutional neural networks ignore the classical sampling theorem , But the author found that the convolutional neural network can meet the sampling theorem to a certain extent by learning the low-pass filter , Especially based on DSCs Network of , for example MobileNetV1 and EfficientNets, Pictured 2 Shown .

1、 Standard convolution / Group convolution

Pictured 2a and 2b Shown , Throughout M×N×N individual kernel There are one or more significant N×N individual kernel, For example, blur kernel, This phenomenon also means that the parameters of these layers are redundant . Please note that , remarkable kernel It doesn't necessarily look like Gauss kernel.

2、 Depth separates the convolution

Strided-DSC Of kernel Usually similar to Gauss kernel, Including but not limited to MobileNetV1、MobileNetV2、MobileNetV3、ShuffleNetV2、ReXNet、EfficientNets. Besides ,Strided-DSC kernel The distribution of is not Gaussian , It's a Gaussian mixture .

3、 The last convolution layer Kernels

modern CNN Always use the global pooling layer before the classifier to reduce the dimension . therefore , A similar phenomenon also appears on the final depth convolution layer , Pictured 4 Shown .

These visualizations indicate that depth convolution should be selected in the lower sampling layer and the last layer instead of standard convolution and group convolution . Besides , Fixed Gauss can be used in the lower sampling layer kernel.

1.2 Reuse feature maps between adjacent layers

Identity Kernel And similar characteristic graphs

As shown in the figure above , Many deep convolution kernels have large values only in the center , Just like the identity core in the middle of the network . Because the input is only passed to the next layer , Therefore, convolution with identity kernel will lead to repetition of characteristic graph and computational redundancy . On the other hand , The following figure shows that many feature maps are similar between adjacent layers ( Repetitive ).

therefore , Partial convolution can be replaced by identity mapping . otherwise , Depth convolution is slow in early layers , Because they are usually not fully utilized Shufflenet V2 Modern accelerators reported in . So this method can improve parameter efficiency and reasoning time .

1.3 Edge detector as a learnable depth convolution

Edge features contain important information about the image . As shown in the figure below , Most of the kernel Similar to edge detection kernel, for example Sobel filter kernel and Laplace filter kernel. And this kernel The proportion of is reduced in the later layer , And like ambiguity kernel Of kernel The proportion increases .

therefore , Maybe Edge detector Can replace based on DSC Deep convolution in the network of , To mix features between different spatial locations . The author will use edge detection kernel Replacement can be learned kernel To prove that .

2 Network architecture

2.1 DownsamplingBlock

DownsamplingBlock Halve the resolution and expand the number of channels . Pictured a Shown , Only extended channels are generated by pointwise convolution to reuse features . The kernel of deep convolution can be initialized randomly or use a fixed Gaussian kernel .

2.2 HalfIdentityBlock

Pictured b Shown , Replace half depth convolution with identity mapping , And reduce while maintaining the block width half pointwise convolutions.

Please note that , The right half of the input channel becomes the left half of the output channel , In order to better reuse features .

2.3 VGNet Architecture

Use DownsamplingBlock and HalfIdentityBlock Constructed a VGNets. whole VGNetG-1.0MP The architecture is shown in the table 1 Shown .

2.4 Variants of VGNet

For further study N×N The impact of the kernel , Introduced VGNets Several variants of :VGNetC、VGNetG and VGNetF.

VGNetC: All parameters are randomly initialized and learnable .

VGNetG: except DownsamplingBlock Outside the kernel of , All parameters are randomly initialized and learnable .

VGNetF: All parameters of depth convolution are fixed .

3 experiment

边栏推荐

- SAP queries open Po (open purchase order)

- What are runtimecompiler and runtimeonly

- 【论文笔记】EFFICIENT CNN ARCHITECTURE DESIGN GUIDED BY VISUALIZATION

- [dynamic programming] - Knapsack model

- Talk about programmers learning English again

- Tips - prevent system problems and file loss

- 华为无线设备STA黑白名单配置命令

- [cloud native] the ribbon is no longer used at the bottom of openfeign, which started in 2020.0.x

- RPC communication principle and project technology selection

- Gather the wisdom of developers and consolidate the foundation of the database industry

猜你喜欢

Robot Framework移动端自动化测试----01环境安装

QT学习日记20——飞机大战项目

【Unity入门计划】基本概念-2D碰撞体Collider 2D

Completely replace the redis+ database architecture, and JD 618 is stable!

线代(矩阵‘)

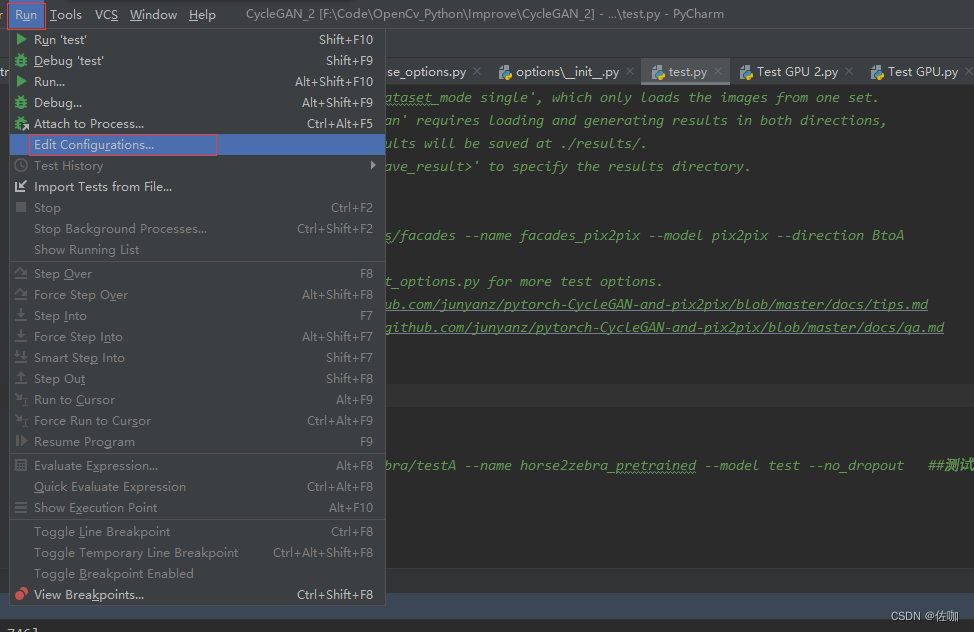

Problems in deep learning training and testing: error: the following arguments are required: --dataroot, solution: the configuration method of training files and test files

Line generation (matrix ')

![[dynamic programming] - Knapsack model](/img/0d/c467e70457495f130ec217660cbea7.png)

[dynamic programming] - Knapsack model

Cluster chat server: summary of project problems

BOM概述

随机推荐

A fast method of data set enhancement for deep learning

Room database migration

[ES6] function parameters, symbol data types, iterators and generators

北京内推 | 微软STCA招聘NLP/IR/DL方向研究型实习生(可远程)

About --skip networking in gbase 8A

论文阅读:UNET 3+: A FULL-SCALE CONNECTED UNET FOR MEDICAL IMAGE SEGMENTATION

Learn no when playing 10. Is enterprise knowledge management too boring? Use it to solve!

Oracle19采用自动内存管理,AWR报告显示SGA、PGA设置的过小了?

9大最佳工程施工项目管理系统

Summary of differences between data submission type request payload and form data

Incremental crawler in distributed crawler

【Unity入门计划】基本概念-预制件 Prefab

Robot framework mobile terminal Automation Test ----- 01 environment installation

轮询、中断、DMA和通道

Growth path - InfoQ video experience notes [easy to understand]

How to do a good job in safety development?

Nailing the latest version, how to clear the login phone number history data

Problems during nanodet training: modulenotfounderror: no module named 'nanodet' solution

Paper reading: UNET 3+: a full-scale connected UNET for medical image segmentation

What are runtimecompiler and runtimeonly