当前位置:网站首页>Acl2022 | MVR: multi view document representation for open domain retrieval

Acl2022 | MVR: multi view document representation for open domain retrieval

2022-06-23 21:53:00 【Zhiyuan community】

Today I bring you an article ACL2022 The paper MVR,「 Multi view document representation for open domain retrieval 」, It mainly solves the problem of semantic mismatch between the same document vector and multiple documents . adopt 「 Insert multiple special Token」 Realize the construction of multi view document vector representation , And in order to prevent the convergence of vectors between multiple perspectives , Introduced 「 annealing temperature 」 Overall situation - Partial loss , Full title of the paper 《Multi-View Document Representation Learning for Open-Domain Dense Retrieval》.

paper Address :https://aclanthology.org/2022.acl-long.414.pdf

This paper is the same as that shared two days ago DCSR- Sentence aware contrastive learning for open domain paragraph retrieval A piece of writing has the same meaning , Both of them do not introduce extra computation into search sorting , By inserting special Token Construct multi semantic vector representation of long documents , Make the same document similar to the vector representation of many different problems . And the current retrieval recall models have some defects :

- Cross-encoder Class model (BERT) Because of the amount of calculation , Cannot be used during recall ;

- Bi-encoder Class model (DPR) The multi topic elements in long documents cannot be well represented ;

- Late Interaction Class model (ColBERT) Due to the use sum operation , Cannot be used directly ANN Sort ;

- Attention-based Aggregator Class model (PolyEncoder) Additional operations are added and cannot be used directly ANN Sort .

Model

Usually, vector representation , Use special characters [CLS] The corresponding vector representation is the vector representation of the text . In order to obtain more fine-grained semantic information in the document ,MVR Introduce multiple special characters [VIE] replace [CLS].

- For documents , Insert multiple characters before text [], In order to prevent interference with the location information of the original text , We will [] All location information of is set to 0, Document statement location information from 1 Start .

- For the problem , Because the questions are short and usually mean the same thing , So only one special character is used [VIE].

The model uses double encoders as the backbone , Code the questions and documents separately , as follows :

among , Indicates a link character ,[VIE] and [SEP] by BERT Special characters of the model , And are the problem encoder and the document encoder respectively .

As shown in the figure above , First, calculate the dot product of the problem vector and the document vector of each perspective , Get a score for each perspective , And then through max-pooler operation , Get the score of the problem vector and document vector with the largest score in the perspective , as follows :

In order to prevent the convergence of vectors between multiple views , A new method with annealing temperature is introduced Global-Local Loss, Including global contrast loss and local uniform loss , as follows :

among , The global comparison loss is the traditional comparison loss function , Given a problem 、 One positive example document and multiple negative example documents , The loss function is as follows :

In order to improve the uniformity of multi view vector , Local uniformity loss is proposed , Force the selected query vector to be closer to the view vector , Principle other its view vector , as follows :

In order to further distinguish the differences between different view vectors , The annealing temperature is adopted , Gradually adjust the angle of view of different vectors softmax Distribution , as follows :

among , To control the hyperparameter of annealing speed , Number of training rounds for the model , Every training round , Temperature update once . Be careful : In global contrast loss and local uniform loss , The annealing temperature is used .

边栏推荐

- How to deal with the situation of repeated streaming and chaotic live broadcast in easydss?

- Cloud native practice of meituan cluster scheduling system

- Take you to understand the working process of the browser

- A batch layout WAF script for extraordinary dishes

- How does the hybrid cloud realize the IP sec VPN cloud networking dedicated line to realize the interworking between the active and standby intranet?

- [JS 100 examples of reverse] anti climbing practice platform for net Luozhe question 5: console anti debugging

- Analysis of visual analysis technology

- Wechat is new. You can create applications from Excel

- Embedded development: embedded foundation -- the difference between restart and reset

- CAD图在线Web测量工具代码实现(测量距离、面积、角度等)

猜你喜欢

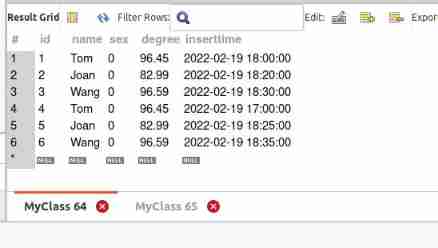

MySQL de duplication query only keeps one latest record

CAD图在线Web测量工具代码实现(测量距离、面积、角度等)

How to use the serial port assistant in STC ISP?

Uncover the secrets of Huawei cloud enterprise redis issue 16: acid'true' transactions beyond open source redis

Experiment 5 module, package and Library

大一女生废话编程爆火!懂不懂编程的看完都拴Q了

Teacher lihongyi from National Taiwan University - grade Descent 2

蓝牙芯片|瑞萨和TI推出新蓝牙芯片,试试伦茨科技ST17H65蓝牙BLE5.2芯片

Polar cycle graph and polar fan graph of high order histogram

Embedded development: embedded foundation -- the difference between restart and reset

随机推荐

BenchCLAMP:评估语义分析语言模型的基准

Minimize outlook startup + shutdown

微信小程序中发送网络请求

CAD图在线Web测量工具代码实现(测量距离、面积、角度等)

Error running PyUIC: Cannot start process, the working directory ‘-m PyQt5. uic. pyuic register. ui -o

How to make a label for an electric fan

Supplementary usage of upload component in fusiondesign 1

How API gateway finds the role of microserver gateway in microservices

[同源策略 - 跨域问题]

Simple code and design concept of "back to top"

《scikit-learn机器学习实战》简介

Selenium批量查询运动员技术等级

How to batch generate UPC-A codes

Tencent cloud database tdsql elite challenge Q & A (real-time update)

Analysis of a series a e-commerce app docommandnative

The 11th Blue Bridge Cup

SAP retail wrmo replenishment monitoring

Installation and use of Minio

HR SaaS is finally on the rise

SAP Migo mobile type 311 attempts to determine the batch, and the system reports an error -batch determination not Po