当前位置:网站首页>Cvpr2022 | pointdistiller: structured knowledge distillation for efficient and compact 3D detection

Cvpr2022 | pointdistiller: structured knowledge distillation for efficient and compact 3D detection

2022-06-27 01:55:00 【Zhiyuan community】

0. introduction

Knowledge distillation is a method of extracting knowledge from tedious models and compressing it into a single model , So that it can be deployed to practical applications . With 3D Target detection in autopilot 、 Virtual reality is becoming more and more important , Model extraction techniques such as knowledge distillation have also proved effective .

This article will share 2022 CVPR The paper of "PointDistiller: For high efficiency and compactness 3D The structural knowledge distillation of detection ", This paper presents a structured knowledge distillation framework , It can achieve better target detection . It is important to , The algorithm is open source .

1. Paper information

title :PointDistiller: Structured Knowledge Distillation Towards Efficient and Compact 3D Detection

author :Linfeng Zhang, Runpei Dong, Hung-Shuo Tai, Kaisheng Ma

source :2022 Computer Vision and Pattern Recognition (CVPR)

Link to the original text :https://arxiv.org/abs/2205.11098

Code link :https://github.com/RunpeiDong/PointDistiller

2. Abstract

Point clouds represent a significant breakthrough in learning that has driven their use in real-world applications , Such as autonomous vehicle and virtual reality . However , These applications often require not only accurate but also efficient 3D Object detection .

lately , Knowledge distillation is considered to be an effective model compression technique , It transfers knowledge from an overly parameterized teacher to a lightweight student , And in 2D Achieve consistent effectiveness in vision .

However , Because of the sparsity and irregularity of the point cloud , Applying the previous image-based knowledge distillation method directly to the point cloud detector usually leads to poor performance . To fill this gap , This paper proposes PointDistiller, A structured knowledge distillation framework for point cloud based 3D detection .

say concretely ,PointDistiller Including partial distillation , The local geometric structure of point cloud is extracted by dynamic graph convolution and reweighted learning strategy , Highlight students' learning of key points or voxels , Improve the efficiency of knowledge distillation .

A large number of experiments on voxel based and original point based detectors have proved the effectiveness of our method compared with the previous seven knowledge distillation methods . for example , our 4× Compress PointPillars The students are BEV and 3D The object detection aspect realizes 2.8 and 3.4 Of mAP promote , Each is higher than the teacher 0.9 and 1.8 Of mAP.

3. Algorithm analysis

Pictured 1 Shown is the structured knowledge distillation framework proposed by the author PointDistiller, The framework uses local distillation to extract teacher knowledge from the local geometry of point clouds , And the reweighted learning strategy to deal with the sparsity of point clouds by highlighting students' learning on relatively more important voxels . say concretely , This method first extracts the relatively key points from the whole point cloud N Take samples from individual elements or points , Then the local geometric structure is extracted by convolution of dynamic graph , Finally, it is extracted by reweighting .

chart 1 PointDistiller The framework outlined

The main contributions of the author are summarized as follows :

(1) Local distillation is proposed , Firstly, the local geometry of point cloud is encoded by convolution of dynamic graph , Then distill from teachers to students .

(2) A reweighted learning strategy is proposed to deal with the sparsity and noise in the point cloud . It gives voxels a higher learning weight in knowledge extraction , It highlights students' learning on voxels .

(3) A lot of experiments have been done on voxel based and primitive point based detectors , To prove that the proposed method outperforms the seven previous methods .

3.1 Local distillation

The author proposed local distillation , Instead of directly extracting the main features from teacher detector to student detector , This partial distillation is first carried out with KNN Cluster local adjacent voxels or points , Then we use dynamic graph convolution to encode the semantic information in the local geometric structure , Finally, it is refined from the teacher to the students . therefore , The student detector can inherit the teacher's ability to understand the local geometric information of the point cloud , And achieve better detection performance .

3.2 Reweighted learning strategies

One of the main methods to deal with point clouds is to convert them into voxels , Then it is encoded as rule data . However , Due to the sparsity and noise in the point cloud , Most voxels contain only one point , And it is probably a noise point . therefore , Compared with voxels containing multiple points , The representative features of these single voxels are of relatively low importance in knowledge distillation . Inspired by this observation , The author proposes a reweighted learning strategy , This strategy highlights students' learning on voxels with multiple points by giving them greater learning power . Besides , Similar ideas can also be easily extended to detectors based on original points , To highlight the knowledge extraction on the points that have a greater impact on the prediction of the teacher detector .

4. experiment

4.1 Comparative test

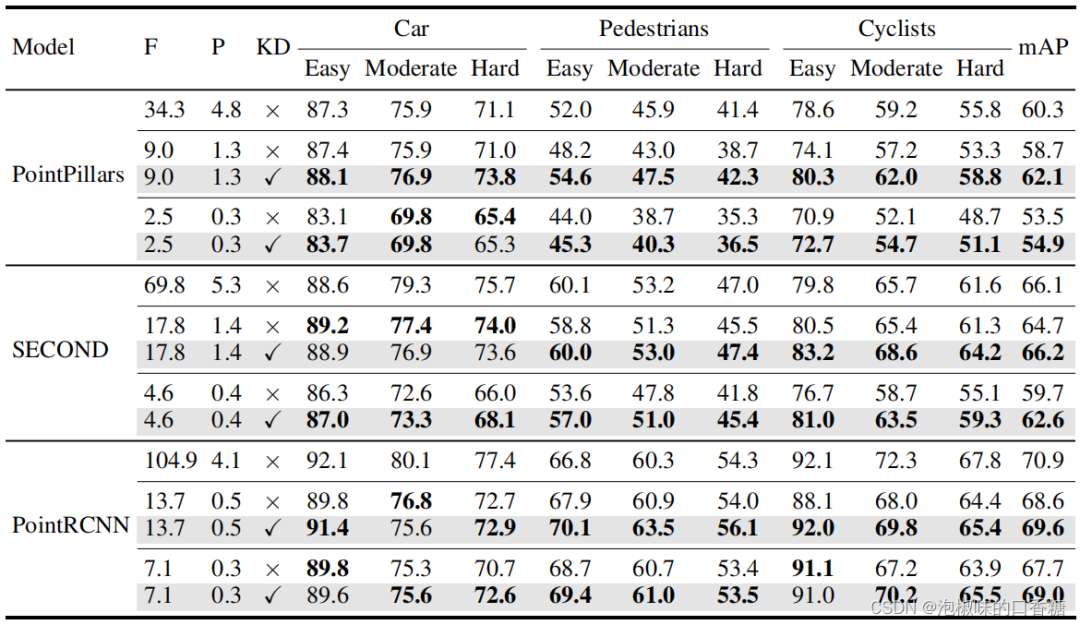

surface 1 And table 2 Shows using and not using PointDistiller about BEV Detection and 3D Test performance , It is proved that the method proposed by the author can successfully realize the knowledge transfer from teacher detector to student detector , And achieve significant and consistent performance improvements , The conclusion is as follows :

(1) about BEV and 3D testing , Use PointDistiller after , The average accuracy of all kinds of detectors and all compression ratios is significantly improved . On average, , For detectors based on voxels and original points , Can be observed separately 2.4 and 1.0 Of mAP promote . stay BEV and 3D On the test , You can get 1.9 and 1.9 Medium mAP improve .

(2) stay PointPillars and SECOND The detector's BEV On the test , use PointDistiller Trained 4× Compressed and accelerated students outperform their teachers , Respectively 0.9 and 0.9 Of mAP. stay PointPillars and SECOND The detector's 3D Testing , Use PointDistiller Trained 4× Compressing and accelerating students are higher than their teachers, respectively 1.8 and 0.1 Of mAP.

(3) A consistent average accuracy improvement can be observed in the detection results of all difficult tasks .

(4) A consistent improvement in average accuracy can be observed in all categories of test results .

(5) stay PointRCNN On , Can be in BEV and 3D An average of 1.3 and 1.2 Of mAP promote , prove PointDistiller It can also be applied to the original point detector .

surface 1 Use PointDistiller Conduct BEV Experimental results of object detection

surface 2 Use PointDistiller Conduct 3D Experimental results of object detection methods

surface 3 This is using PointDistiller Comparison with other previous knowledge distillation methods , The conclusion is as follows :

(1) PointDistiller Obviously superior to other previous methods . stay BEV and 3D Testing aspect ,PointDistiller It has surpassed the second best knowledge distillation method 1.5 and 1.9 Of mAP.

(2) PointDistiller Achieve the best performance in all categories of all difficulties .

(3) PointDistiller It is the only way to distill knowledge that makes the student detector better than the teacher detector .

surface 3 PointDistiller Comparison with other distillation methods

surface 4 Shown in nuScenes Use... On datasets 2× and 4× Compression of the PointPillars The results of the comparison . Results show , The method proposed by the author is useful for mAP and NDS The average performance of is improved 0.65 and 0.5, The effectiveness of this method for large-scale data sets is verified .

surface 4 stay nuScenes Experimental results on datasets

4.2 Ablation Experiment

in the light of PointDistiller The ablation experiment is mainly composed of two parts , Including reweighting learning strategies (RL) And partial distillation (LD). As shown in the table 5 It shows KITTI Upper 4× Compress PointPillars Student ablation research . among :

(1) Extract only by using reweighted learning strategies BEV Detection and 3D Detect backbone features on , You can get 2.0 and 1.9 Of mAP promote .

(2) stay BEV Detection and 3D The detection does not need to be reweighted , They can be obtained separately by using partial distillation 2.3 and 2.5 Of mAP promote .

(3) By combining these two methods , Can be in BEV Detection and 3D Detection is realized separately 0.5 and 0.9 Further mAP promote . These results suggest that ,PointDistiller Each module in has its own validity , And independent of each other . Besides , They also suggest that the local distillation and reweighted learning proposed by the authors can be combined with other knowledge distillation methods to achieve better performance .

surface 5 4× Compress PointPillars Students' ablation experiments

At the same time PointDistiller Conduct sensitivity studies , This experiment mainly introduces two super parameters :K and N, They respectively indicate the number of nodes used for local distillation in the figure and the voxels to be extracted ( spot ) The number of . The sensitivity study of the two superparameters is shown in the figure 2 Shown . Results show ,PointDistiller The method is always superior to the baseline by a large margin under different hyperparametric values , It is shown that the method is insensitive to super parameters .

chart 2 KITTI Upper 4× Compression of the PointPillars Superparametric sensitivity experiment

4.2 Visual analysis

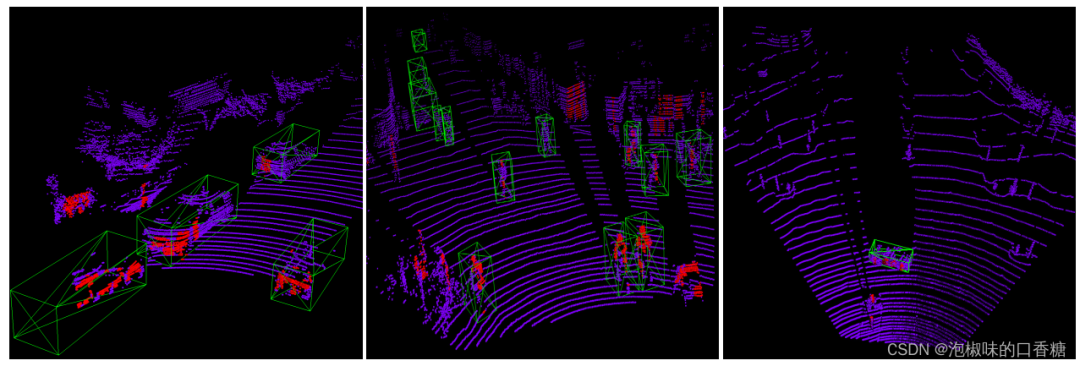

In reweighted learning strategies , Use the importance score of each voxel or point to determine whether it should be extracted .PointDistiller The visualization of the importance score in is shown in the figure 3 Shown . Results show , They successfully locate foreground objects and background objects .

chart 3 PointDistiller Visualization results of the importance score

Pictured 4 Shown , Student models without knowledge distillation tend to have more false positives (FP) forecast . by comparison , This is excessive FP Problem in use PointDistiller The students who have been trained by the method have been relieved .

chart 4 Qualitative comparison of test results between students who have received knowledge distillation and those who have not received knowledge distillation training

5. Conclusion

stay 2022 CVPR The paper "PointDistiller: Structured Knowledge Distillation Towards Efficient and Compact 3D Detection" in , Target detection based on point cloud , The author puts forward a framework of structured knowledge distillation PointDistiller. The algorithm consists of local distillation and weighted learning , The former first encodes and extracts the semantic information of the local geometric structure in the point cloud to the students , The latter deals with the sparsity and noise in the point cloud by assigning different learning weights to different points and voxels . meanwhile , A large number of experiments have proved that PointDistiller The effectiveness of the . This achievement belongs to the opening work of exploring 3D object detection based on point cloud , It is of constructive significance .

边栏推荐

猜你喜欢

消费者追捧iPhone,在于它的性价比超越国产手机

C language -- Design of employee information management system

svg拖拽装扮Kitty猫

Parameter estimation -- Chapter 7 study report of probability theory and mathematical statistics (point estimation)

1.44 inch TFT-LCD display screen mold taking tutorial

二叉树oj题目

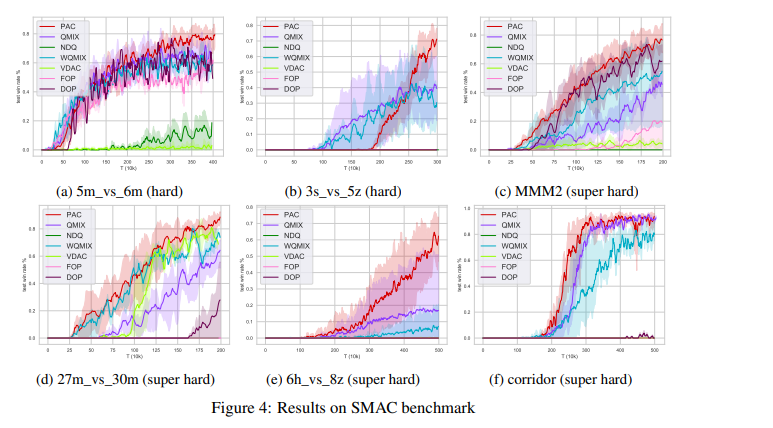

George Washington University: Hanhan Zhou | PAC: auxiliary value factor decomposition with counterfactual prediction in Multi-Agent Reinforcement Learning

在连接数据库的时候遇到了点问题,请问怎么解决呀?

浏览器缓存

Bs-gx-016 implementation of textbook management system based on SSM

随机推荐

SystemVerilog simulation speed increase

Oracle/PLSQL: To_ Clob Function

perl语言中 fork()、exec()、waitpid() 、 $? >> 8 组合

按键控制LED状态翻转

C语言--职工信息管理系统设计

Oracle/PLSQL: HexToRaw Function

The listing of Fuyuan pharmaceutical is imminent: the net amount raised will reach 1.6 billion yuan, and hubaifan is the actual controller

Daily question brushing record (V)

Continuous delivery blue ocean application

ThreadLocal详解

Parameter estimation -- Chapter 7 study report of probability theory and mathematical statistics (point estimation)

两个页面之间传参方法

NOKOV动作捕捉系统使多场协同无人机自主建造成为可能

h5液体动画js特效代码

Press key to control LED status reversal

dat.gui.js星星圆圈轨迹动画js特效

I earned 3W yuan a month from my sideline: the industry you despise really makes money!

lottie.js创意开关按钮动物头像

Oracle/PLSQL: Lower Function

Hibernate generates SQL based on Dialect