当前位置:网站首页>Deep learning convolutional neural network of machine learning to realize handwritten font recognition based on CNN network

Deep learning convolutional neural network of machine learning to realize handwritten font recognition based on CNN network

2022-06-28 15:40:00 【Hua Weiyun】

Implementation is based on CNN Handwritten font recognition on the Internet

First download the data

1、 build CNN A network model ;

class CNN(nn.Module): def __init__(self): super(CNN,self).__init__() ''' Generally speaking , The convolution network includes the following : 1. Convolution layer 2. neural network 3. Pooling layer ''' self.conv1=nn.Sequential( nn.Conv2d( #--> (1,28,28) in_channels=1, # The incoming image is several layers , Grey is 1 layer ,RGB It's three floors out_channels=16, # The output picture is several layers kernel_size=5, # The area points representing the scanning are 5*5 stride=1, # Just jump every few steps padding=2, # Border completion , Its calculation formula =(kernel_size-1)/2=(5-1)/2=2 ), # 2d Represents two-dimensional convolution --> (16,28,28) nn.ReLU(), # Nonlinear activation layer nn.MaxPool2d(kernel_size=2), # Set the scanning area here as 2*2, And remove the 2*2 Maximum of --> (16,14,14) ) self.conv2=nn.Sequential( nn.Conv2d( # --> (16,14,14) in_channels=16, # The input here is the output of the upper layer 16 layer out_channels=32, # Here we need to output it as 32 layer kernel_size=5, # The area points representing the scanning are 5*5 stride=1, # Just jump every few steps padding=2, # Border completion , Its calculation formula =(kernel_size-1)/2=(5-1)/2= ), # --> (32,14,14) nn.ReLU(), nn.MaxPool2d(kernel_size=2), # Set the scanning area here as 2*2, And remove the 2*2 Maximum of --> (32,7,7), Here is the 3D data ) self.out=nn.Linear(32*7*7,10) # Note that the data here is two-dimensional data def forward(self,x): x=self.conv1(x) x=self.conv2(x) #(batch,32,7,7) # Then proceed to expand and flatten , Convert 3D data to 2D data x=x.view(x.size(0),-1) #(batch ,32 * 7 * 7) output=self.out(x) return output2、 Design loss function , Select the optimization function ;

# Add optimization methods optimizer=torch.optim.Adam(cnn.parameters(),lr=LR)# Specifies that the loss function uses cross information entropy loss_fn=nn.CrossEntropyLoss()3、 Implement model training and testing .

step=0for epoch in range(EPOCH): # Load training data for step,data in enumerate(train_loader): x,y=data # Get the training data respectively x and y The value of b_x=Variable(x) b_y=Variable(y) output=cnn(b_x) # Call the model to predict loss=loss_fn(output,b_y)# Calculate the loss value optimizer.zero_grad() # Before each cycle , Clear the gradient to zero loss.backward() # Back propagation optimizer.step() # gradient descent # Every execution 50 Time , Output the current epoch、loss、accuracy if (step%50==0): # Calculate the accuracy of the model prediction test_output=cnn(test_x) y_pred=torch.max(test_output,1)[1].data.squeeze() accuracy=sum(y_pred==test_y).item()/test_y.size(0) print('now epoch : ', epoch, ' | loss : %.4f ' % loss.item(), ' | accuracy : ' , accuracy)

Code :

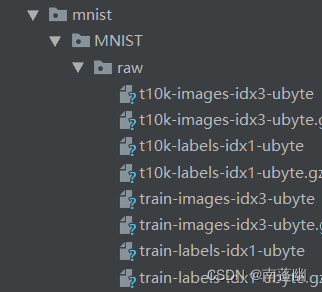

import torchimport torch.nn as nnfrom torch.autograd import Variableimport torch.utils.data as Dataimport torchvision#Hyper prametersEPOCH=1BATCH_SIZE=50LR=0.001DOWNLOAD_MNIST=Falsetrain_data = torchvision.datasets.MNIST( root='./mnist', train=True, transform=torchvision.transforms.ToTensor(), # Convert the downloaded file into pytorch cognitive tensor type , And change the value of the picture from (0-255) Normalize to (0-1) download=DOWNLOAD_MNIST)train_loader=Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)test_data=torchvision.datasets.MNIST( root='./mnist', train=False,)with torch.no_grad(): test_x=Variable(torch.unsqueeze(test_data.data, dim=1)).type(torch.FloatTensor)[:2000]/255 # Just take the first twothousand data , Almost enough , And then normalize it . test_y=test_data.targets[:2000]''' Start building CNN The Internet '''class CNN(nn.Module): def __init__(self): super(CNN,self).__init__() ''' Generally speaking , The convolution network includes the following : 1. Convolution layer 2. neural network 3. Pooling layer ''' self.conv1=nn.Sequential( nn.Conv2d( #--> (1,28,28) in_channels=1, # The incoming image is several layers , Grey is 1 layer ,RGB It's three floors out_channels=16, # The output picture is several layers kernel_size=5, # The area points representing the scanning are 5*5 stride=1, # Just jump every few steps padding=2, # Border completion , Its calculation formula =(kernel_size-1)/2=(5-1)/2=2 ), # 2d Represents two-dimensional convolution --> (16,28,28) nn.ReLU(), # Nonlinear activation layer nn.MaxPool2d(kernel_size=2), # Set the scanning area here as 2*2, And remove the 2*2 Maximum of --> (16,14,14) ) self.conv2=nn.Sequential( nn.Conv2d( # --> (16,14,14) in_channels=16, # The input here is the output of the upper layer 16 layer out_channels=32, # Here we need to output it as 32 layer kernel_size=5, # The area points representing the scanning are 5*5 stride=1, # Just jump every few steps padding=2, # Border completion , Its calculation formula =(kernel_size-1)/2=(5-1)/2= ), # --> (32,14,14) nn.ReLU(), nn.MaxPool2d(kernel_size=2), # Set the scanning area here as 2*2, And remove the 2*2 Maximum of --> (32,7,7), Here is the 3D data ) self.out=nn.Linear(32*7*7,10) # Note that the data here is two-dimensional data def forward(self,x): x=self.conv1(x) x=self.conv2(x) #(batch,32,7,7) # Then proceed to expand and flatten , Convert 3D data to 2D data x=x.view(x.size(0),-1) #(batch ,32 * 7 * 7) output=self.out(x) return output cnn=CNN()# print(cnn)# Add optimization methods optimizer=torch.optim.Adam(cnn.parameters(),lr=LR)# Specifies that the loss function uses cross information entropy loss_fn=nn.CrossEntropyLoss()''' Start training our model '''step=0for epoch in range(EPOCH): # Load training data for step,data in enumerate(train_loader): x,y=data # Get the training data respectively x and y The value of b_x=Variable(x) b_y=Variable(y) output=cnn(b_x) # Call the model to predict loss=loss_fn(output,b_y)# Calculate the loss value optimizer.zero_grad() # Before each cycle , Clear the gradient to zero loss.backward() # Back propagation optimizer.step() # gradient descent # Every execution 50 Time , Output the current epoch、loss、accuracy if (step%50==0): # Calculate the accuracy of the model prediction test_output=cnn(test_x) y_pred=torch.max(test_output,1)[1].data.squeeze() accuracy=sum(y_pred==test_y).item()/test_y.size(0) print('now epoch : ', epoch, ' | loss : %.4f ' % loss.item(), ' | accuracy : ' , accuracy)''' Print the results of ten test sets '''test_output=cnn(test_x[:10])y_pred=torch.max(test_output,1)[1].data.squeeze() # Select the position of the most possible value print(y_pred.tolist(),'predecton Result')print(test_y[:10].tolist(),'Real Result')边栏推荐

- Summary of language features of fluent dart

- SQL statement exercises

- R语言使用nnet包的multinom函数构建无序多分类logistic回归模型、使用回归系数及其标准误计算每个系数对应的Z统计量的值

- Expand Disk C (allocate the memory of disk d to Disk C)

- [leetcode] 13. Roman numeral to integer

- Facebook出手!自适应梯度打败人工调参

- VS2013 帮助文档中没有 win32/com

- openGauss内核:SQL解析过程分析

- A bug liver a week I can't help mentioning issue

- NAACL 2022 | 机器翻译SOTA模型的蒸馏

猜你喜欢

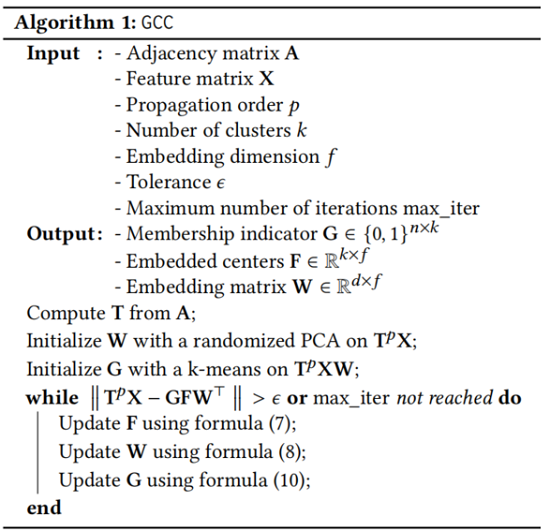

GCC efficient graph revolution for joint node representationlearning and clustering

See how the interface control devaxpress WinForms creates a virtual keyboard

CODING DevOps 助力中化信息打造新一代研效平台,驱动“线上中化”新未来

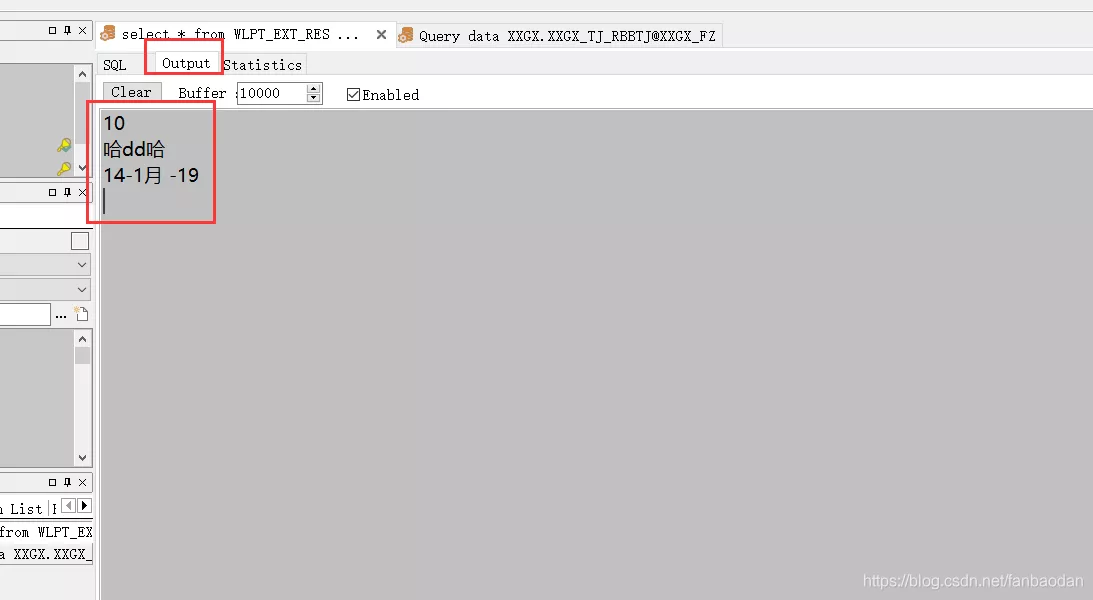

ORACLE中dbms_output.put_line输出问题的解决过程

Grand launch of qodana: your favorite CI code quality platform

Analysis of PostgreSQL storage structure

GBASE南大通用亮相第六届世界智能大会

Visual Studio 2010 配置和使用Qt5.6.3

Express template engine

Visual Studio 2010 configuring and using qt5.6.3

随机推荐

SAP MTS/ATO/MTO/ETO专题之九:M+M模式前后台操作,策略用50,提前准备原材料和半成品

Innovation and upgrading of supply chain system driven management mode in petrochemical industry and strengthening internal management of enterprises

Classic model transformer

SAP mts/ato/mto/eto topic 9: front and back desk operation in m+m mode, strategy 50, preparation of raw materials and semi-finished products in advance

Basic grammar of C language

web Worker 轮询请求

Technical secrets of ByteDance data platform: implementation and optimization of complex query based on Clickhouse

深度学习基础汇总

Express template engine

隆重推出 Qodana:您最爱的 CI 的代码质量平台

C语言学习-19-全排列

Visual Studio 2010 compilation qt5.6.3

【高并发基础】MySQL 不同事务隔离级别下的并发隐患及解决方案

Halcon basic summary (I) cutting pictures and rotating images

一种跳板机的实现思路

IPDK — Overview

sql语句 练习题

ROS知识点——ROS创建工作空间

Visual Studio 2010 编绎Qt5.6.3

Yiwen teaches you to quickly generate MySQL database diagram