当前位置:网站首页>Self learning neural network series - 7 feedforward neural network pre knowledge

Self learning neural network series - 7 feedforward neural network pre knowledge

2022-06-26 09:10:00 【ML_ python_ get√】

7 Feedforward neural network pre knowledge

One Perceptron algorithm

1 Model form

z = ∑ i = 0 D w d x d + b z = \sum_{i=0}^D w_dx_d+b z=i=0∑Dwdxd+b

2 Linear classifier

- Accept multiple input signals , Output a signal

- Neuron : Weighting the input signal , If the weighted number meets a certain condition, enter 1, Otherwise output 0

- The weight represents the importance of the signal

3 Existing problems

And gate : Only two inputs are 1 When the output 1, Other situation output 0

NAND gate : Invert the and gate output , Only two inputs are 1 When the output 0, Otherwise output 1

Or gate : As long as one input is 1, Then output 1, Only all inputs are 0, Just output 0

Exclusive OR gate : Only one input is 1 when , Will enter 1, If the two inputs are 1 when , Output 0, Pictured 1 Shown

Perceptron algorithms cannot handle XOR gates

The idea of perceptron algorithm : As long as the parameters of the sensor are adjusted, the switch between different doors can be realized ; Parameter adjustment is left to the computer , Let the computer decide what kind of door .

4 python Realization

(1) And gate

def AND(x1,x2):

''' Implementation of and gate '''

w1,w2,theta = 0.5,0.5,0.7

tmp = x1*w1+x2*w2

if tmp<=theta:

return 0

elif tmp>theta:

return 1

# Test functions

print(AND(1,1)) # 1

print(AND(0,0)) # 0

print(AND(1,0)) # 0

print(AND(0,1)) # 0

# Use offset and numpy Realization

def AND(x1,x2):

import numpy as np

x = np.array([x1,x2])

w = np.array([0.5,0.5])

b = -0.7 # threshold , Adjust how easily neurons are activated

tmp = np.sum(w*x) +b

if tmp<=0:

return 0

elif tmp>0:

return 1

# test Just like a normal implementation

print(AND(1,1)) # 1

print(AND(0,0)) # 0

print(AND(1,0)) # 1

print(AND(0,1)) # 1

(2) NAND gate

- The output is just the opposite , The weights and offsets are opposite to each other

def NAND(x1,x2):

''' NAND gate '''

import numpy as np

x = np.array([x1,x2])

w = np.array([-0.5,-0.5])

b = 0.7

tmp = np.sum(w*x)+b

if tmp <=0:

return 0

elif tmp>0:

return 1

# test

print(NAND(1,1)) # 0

print(NAND(1,0)) # 1

print(NAND(0,1)) # 1

print(NAND(0,0)) # 1

(3) Or gate

- The absolute value of the offset is less than 0.5 that will do Easier to activate

def OR(x1,x2):

import numpy as np

x = np.array([x1,x2])

w = np.array([0.5,0.5]) # As long as not both inputs go 0, It outputs 1

b = -0.2

tmp = np.sum(w*x)+b

if tmp<=0:

return 0

else:

return 1

OR(1,1)

OR(0,1)

OR(1,0)

OR(1,1)

5 Multi layer perceptron to solve XOR problem

- Exclusive OR gate : Cannot be separated by a straight line

- Introduce nonlinearity : Overlay layer perceptron

- Through the NAND gate, we get S1 Or door access S2 You can see the result of the XOR gate that can be reached through the and gate

- Multilayer perceptron : There are multiple linear classifiers , The nonlinear fitting can be realized through and gate

def XOR(x1,x2):

s1 = NAND(x1,x2) # NAND gate

s2 = OR(x1,x2) # Or gate

y = AND(s1,s2) # It is equivalent to combining two

return y

# test

XOR(1,1)

XOR(0,0)

XOR(1,0)

XOR(0,1)

Two Neural network structure

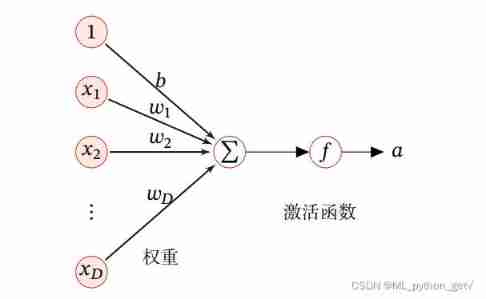

The basic idea : In the last section , Multi-layer perceptron is a multi-linear function that realizes nonlinear classification through logical operators , It is natural to associate the nonlinear transformation of linear function to solve the nonlinear separable classification problem . This kind of nonlinear transformation is an activation function in neural networks .

neural network : The multi-layer perceptron model with activation function is used to learn the statistical model of nonlinear feature expression . The common fully connected neural network structure is shown in the figure 2 Shown :

1 Common activation functions

- Any curve can be approximated by an activation function : Polynomial function can fit any point in space perfectly

- Any curve is the sum of some activation functions , Similar to the idea of spline estimation .

- The neural network is divided into several layers : Each layer uses the same activation function , These activation functions only differ in weight and bias

- Two ReLU Function to construct a step function ( Value 0,1) perhaps sigmoid function

- So in the same case ReLU The activation function of ( Neuron ) It needs to be doubled

(1)sigmoid Activation function

- Defined in machine learning sigmoid The activation function is Logstic The distribution of the CDF, Form the following :

σ ( x ) = 1 1 + e x p ( − x ) \sigma(x) ={1\over1+exp(-x)} σ(x)=1+exp(−x)1

Logical distribution belongs to exponential distribution family

Logstic Distribution is often used in periodic analysis , For example, the economic depression 、 recovery 、 prosperity 、 decline , At first the economy grew slowly , The economy began to grow rapidly after recovery , After the boom, the economy began to stagnate , Growth is slowing , Finally, it even began to decline , Continue into the depression , More in line with logistic Distribution .

The distribution function has 0-1 Characteristics of , So its output can be regarded as a probability distribution , Used for classification .

Intermediate activation value of distribution function , The characteristic of being suppressed on both sides , It conforms to the characteristics of neurons

The gradient vanishing problem

- The derivatives at both ends are close to 0

- The gradient is less than 1, When the chain rule is conducted too long , The gradient vanishing problem

(2)Tanh Activation function

- Tanh Activation function form

t a n h ( x ) = e x p ( x ) − e x p ( − x ) e x p ( x ) + e x p ( − x ) tanh(x) = {exp(x)-exp(-x)\over exp(x)+exp(-x)} tanh(x)=exp(x)+exp(−x)exp(x)−exp(−x)

- Tanh Can be seen as sigmoid Deformation of the activation function , Both belong to the family of exponential functions

t a n h ( x ) = 2 σ ( 2 x ) − 1 tanh(x) = 2\sigma(2x)-1 tanh(x)=2σ(2x)−1

- tanh(x) range by (-1,1) In line with the characteristics of centralization ,sigmoid(x) The value range is (0,1) Output constant greater than 0

(3)Relu Activation function

- Relu Activation function is the most commonly used activation function in neural networks , Form the following :

R e l u ( x ) = { x , x > 0 0 , x < = 0 Relu(x)=\begin{cases} x ,& x>0 \\ 0,& x<=0 \\ \end{cases} Relu(x)={ x,0,x>0x<=0

- Unilateral inhibition : Left saturation , The axis is infinitely far away , The phenomenon that the value of a function does not change significantly is called saturation ,sigmoid Functions and Tanh A function is a saturated function at both ends ,Relu The activation function is left saturated ;

- Wide boundaries of excitement : The activation area is wide , Positive input can be activated

- Ease the problem of gradient disappearance : Derivative is 1, To some extent, the gradient vanishing problem can be alleviated

- Relu The question of death : When the input is an outlier ,Target There will be a big deviation in our prediction , So back propagation ( The reverse is the error ), Will cause the offset b The update of is offset ( Offset offset problem ), At the same time, it may make b Negative , Offset is negative , In this way, no amount of samples will make the calculated hidden layer negative , after relu When the function is activated , The gradient of 0, Parameters are no longer updated , This phenomenon is called Death Relu problem .

(4)Leaky Relu

- To improve Relu function , Avoid death Relu problem ,Leaky Relu Activate function reenter x When it is negative , Introduce a small gradient , as follows :

L e a k y R e l u ( x ) = { x i f x > 0 γ i x i f x < = 0 Leaky Relu(x)=\begin {cases} x & if \space x>0 \\ \gamma_i x & if \space x<=0\\ \end{cases} LeakyRelu(x)={ xγixif x>0if x<=0

- among γ i \gamma_i γi It can be with estimated parameters , It can also be a constant

- ELU Activation function : Make γ i x = γ i ( e x p ( x ) − 1 ) \gamma_ix=\gamma_i(exp(x)-1) γix=γi(exp(x)−1)

(5)Softplus Activation function

- Relu The smooth version of the function , Form the following :

S o f t p l u s ( x ) = l o g ( 1 + e x p ( x ) ) Softplus(x) = log(1+exp(x)) Softplus(x)=log(1+exp(x))

- Derivative is sigmoid Activation function , The gradient vanishing problem has no sparse activation

- Unilateral inhibition 、 Wide boundaries of excitement

(6) Other activation functions

Swish function : s w i s h ( x ) = x σ ( β x ) swish(x) = x \sigma(\beta x) swish(x)=xσ(βx)

- σ Function as a gating unit , control x The output size of

- Be situated between Relu And linear function

Gelu function : G e l u ( x ) = x P ( X < = x ) Gelu(x) = xP(X<=x) Gelu(x)=xP(X<=x)

- Gaussian function is used as gating unit , control x Output

Maxout unit

- Piecewise linear functions

- Use all the outputs of the upper layer neurons instead of one of them , Get multiple parameter vectors

- The output takes the maximum value of multiple outputs after linear transformation

2 Network structure

(1) Feedforward neural networks

- It can only spread in one direction , There's no reverse flow of information

- All connected neural networks

- Convolutional neural networks

- Different from full connection : Partial connections between neurons of different layers 、 Share weight ( Convolution kernel ) Reduce the number of parameter estimates

(2) Cyclic neural network

- It can not only receive information from other neurons , You can also accept your own historical information

- The oblivion of past information + Now the information is updated + Future information

(3) Figure neural network

- Modeling graph structure data

- Nodes and edges are placed in vector space

- Establish neural networks for nodes and edges respectively

Reference material :

1. Qiu Xipeng :《 Neural networks and deep learning 》

2. Li Mu :《 Hands-on deep learning 》

边栏推荐

- Unity connects to Turing robot

- 行为树 文件说明

- How to set the shelves and windows, and what to pay attention to in the optimization process

- 编程训练7-日期转换问题

- ImportError: ERROR: recursion is detected during loading of “cv2“ binary extensions. Check OpenCV in

- dedecms小程序插件正式上线,一键安装无需任何php或sql基础

- 2021 software university ranking crawler program

- phpcms v9手机访问电脑站一对一跳转对应手机站页面插件

- Talk about the development of type-C interface

- Yolov5 advanced camera real-time acquisition and recognition

猜你喜欢

Yolov5 advanced zero environment rapid creation and testing

phpcms手机站模块实现自定义伪静态设置

Isinstance() function usage

唯品会工作实践 : Json的deserialization应用

修复小程序富文本组件不支持video视频封面、autoplay、controls等属性问题

力扣399【除法求值】【并查集】

【云原生 | Kubernetes篇】深入万物基础-容器(五)

Vipshop work practice: Jason's deserialization application

![[300+ continuous sharing of selected interview questions from large manufacturers] column on interview questions of big data operation and maintenance (I)](/img/cf/44b3983dd5d5f7b92d90d918215908.png)

[300+ continuous sharing of selected interview questions from large manufacturers] column on interview questions of big data operation and maintenance (I)

Practice is the fastest way to become a network engineer

随机推荐

Yolov5 advanced level 2 installation of labelimg

cookie session 和 token

隐藏式列表菜单以及窗口转换在Selenium 中的应用

Nacos注册表结构和海量服务注册与并发读写原理 源码分析

phpcms手机站模块实现自定义伪静态设置

[program compilation and pretreatment]

External sorting and heap size knowledge

Fix the problem that the rich text component of the applet does not support the properties of video cover, autoplay, controls, etc

Which software is safer to open an account on

Chargement à chaud du fichier XML de l'arbre de comportement

Unity webgl publishing cannot run problem

commonJS和ES6模块化的区别

isinstance()函数用法

20220213 Cointegration

[Matlab GUI] key ID lookup table in keyboard callback

小程序首页加载之前加载其他相关资源或配置(小程序的promise应用)

Tutorial 1:hello behavioc

dedecms小程序插件正式上线,一键安装无需任何php或sql基础

Upgrade phpcms applet plug-in API interface to 4.3 (add batch acquisition interface, search interface, etc.)

phpcms小程序接口新增万能接口get_diy.php