当前位置:网站首页>Idea runs the wordcount program (detailed steps)

Idea runs the wordcount program (detailed steps)

2022-07-24 11:15:00 【What about Saipan】

IDEA edition :2020.3

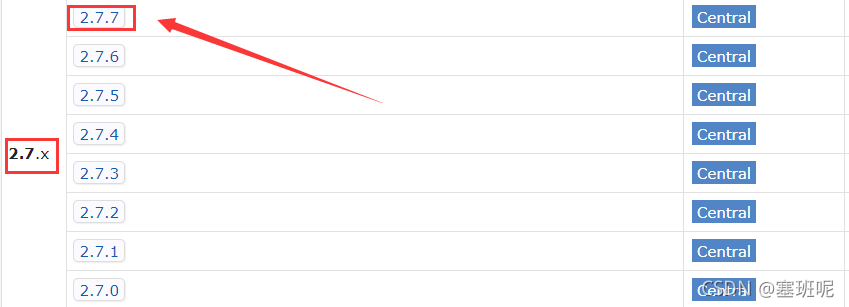

Hadoop edition :2.7.7

preparation :

First you have to build your hadoop colony , then IDEA plug-in unit Big Data Tools Can successfully connect hdfs distributed file system

First understand WordCount The principle of the program

then , Create a new one maven Program ( Just define the package name yourself )

![]()

To configure pom.xml file ( Import you hadoop Version corresponding dependencies , my hadoop yes 2.7.7 Of )

<dependencies>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.7</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.7</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.7</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.7</version>

</dependency>

</dependencies>The corresponding version can be found in maven Check in the warehouse :https://mvnrepository.com/

Prepare input files and output directories

Input file :(hdfs://192.168.183.101:9000/test/input/file01.txt)

You can write one locally txt file , And then to hdfs Up ; Or write a file in the virtual machine , Then send the document to hdfs Up . No, you can refer to :HDFS-java Programming

The output directory :“hdfs://192.168.183.101:9000/test/output” there output The folder has not been created , Because the program will create itself when it runs , We don't need to create , If the output directory exists before running, an error will be reported

Configuration file input and output path ( The input and output paths here are all in hdfs Upper )

The first is the path of the input file :“hdfs://192.168.183.101:9000/test/input/file01.txt”

The second number is the path of the output file :“hdfs://192.168.183.101:9000/test/output”

Be careful : The output path here must be hdfs That doesn't exist on the , Otherwise, it will report a mistake

Give the complete code

package cn.neu.connection.test;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static class Map extends Mapper<Object,Text,Text,IntWritable>{

private static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key,Text value,Context context) throws IOException,InterruptedException{

StringTokenizer st = new StringTokenizer(value.toString());

while(st.hasMoreTokens()){

word.set(st.nextToken());

context.write(word, one);

}

}

}

public static class Reduce extends Reducer<Text,IntWritable,Text,IntWritable>{

private static IntWritable result = new IntWritable();

public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException,InterruptedException{

int sum = 0;

for(IntWritable val:values){

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception{

System.setProperty("HADOOP_USER_NAME", "lingyi");

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage WordCount <int> <out>");

System.exit(2);

}

Job job = new Job(conf,"word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(Map.class);

job.setCombinerClass(Reduce.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

otherArgs[0]="hdfs://192.168.183.101:9000/test/input/file01.txt";// Input path

otherArgs[1]="hdfs://192.168.183.101:9000/test/output";// The output path , Before running hdfs No, well, there should be this path , Otherwise an error

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}Two points of attention

Change the user name here to your own

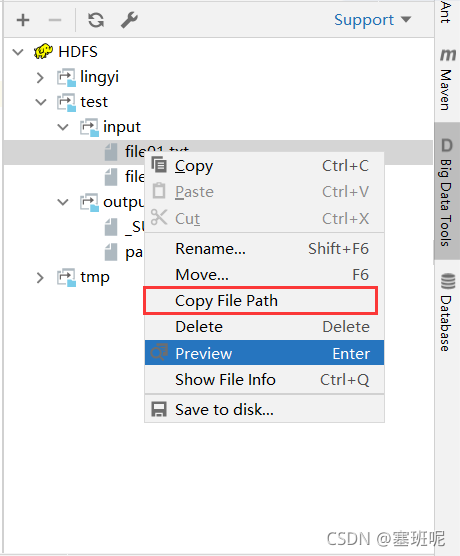

Input and output paths are defined by themselves , Must be hdfs Upper path ( It can be downloaded from Big Table Tools Get the path quickly in the plug-in )

Input and output paths are defined by themselves , Must be hdfs Upper path ( It can be downloaded from Big Table Tools Get the path quickly in the plug-in )

Come here , The program can run

If here 1 There are mistakes

If here 1 There are mistakes

At the same time, you can also xshell Or view on the virtual machine part-r-00000 The output file

hadoop fs -cat /test/output/part-r-00000

It's not over yet. .........

There is a problem , Every time you run the program, you have to delete or change the output path to another path

Here's the solution :

Determine whether the output file exists before each run , Delete when there is

The modified code

System.setProperty("HADOOP_USER_NAME", "lingyi");

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage WordCount <int> <out>");

System.exit(2);

}

// Check whether the output path exists before each run , Delete if it exists

Path outPath = new Path(otherArgs[1]);

if(fs.exists(outPath)) {

fs.delete(outPath, true);

}边栏推荐

- "Low power Bluetooth module" master-slave integrated Bluetooth sniffer - help smart door lock

- Publish local image to private library

- 简单使用 MySQL 索引

- Detailed explanation and example demonstration of Modbus RTU communication protocol

- Druid encryption command

- 【Golang】golang实现post请求发送form类型数据函数

- 聊聊软件测试-自动化测试框架

- [golang] golang implements MD5 encryption function

- 只会“点点点”,凭什么让开发看得起你?

- Blue Bridge Cup - binary conversion exercise

猜你喜欢

【反序列化漏洞-02】PHP反序列化漏洞原理测试及魔术方法总结

View the source code of idea Download

Selenium automated test (this one is enough) - self study

Blue Bridge Cup provincial match training camp - Calculation of date

This should be postman, the most complete interface testing tool in the whole network

《Nature》论文插图复刻第3期—面积图(Part2-100)

The difference between Lora wireless technology and lorawan gateway module

![[live registration] analysis of location cache module and detailed explanation of OCP monitoring and alarm](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

[live registration] analysis of location cache module and detailed explanation of OCP monitoring and alarm

CSDN会员的魅力何在?我要他有什么用?

JMeter interface test steps - Installation Tutorial - script recording - concurrent test

随机推荐

基于NoCode构建简历编辑器

Zynq TTC usage

在线客服聊天系统源码_美观强大golang内核开发_二进制运行傻瓜式安装_附搭建教程

2022,软测人的平均薪资,看完我瞬间凉了...

Nodejs installation tutorial

Text message verification of web crawler

【反序列化漏洞-02】PHP反序列化漏洞原理测试及魔术方法总结

High frequency written test questions (Weilai)

Publish local images to Alibaba cloud

Detailed explanation of the implementation process of redistribution watchdog

MySQL queries tables and fields according to comments

View the source code of idea Download

Xilinx FPGA soft core development process

[golang] golang implements MD5 encryption function

Development and course of Bluetooth Technology

E2PROM read / write (xiicps) on PS side of zcu102 board

08 [AIO programming]

MySQL query field matches the record of a rule

Redismission inventory deduction demo

Self taught software testing talent -- not covered