当前位置:网站首页>Development Series III of GaN (lapgan, srgan)

Development Series III of GaN (lapgan, srgan)

2022-07-24 17:33:00 【51CTO】

GAN Development Series III of (LapGAN、SRGAN)

We have already introduced it in the previous article GAN The introduction to generating countermeasure networks and some GAN series , In the following album will continue to introduce some of the more classic GAN.

GAN Introduction to generating countermeasure network

GAN The development of the series one (CGAN、DCGAN、WGAN、WGAN-GP、LSGAN、BEGAN)

GAN The development of series 2 (PGGAN、SinGAN)

One 、 LapGAN

The paper :《Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks》

Address of thesis :https://arxiv.org/abs/1506.05751

1、 The basic idea

LapGAN It's based on GAN and CGAN On the basis of , use Laplacian Pyramid The pyramid of Laplace To generate images from thick to thin , So as to generate high-resolution images . At each level of the pyramid, there are learning residuals with adjacent levels , By constantly stacking CGAN Get the final resolution .CGAN As we mentioned in the previous article, it is in GAN Add conditional constraints on the basis of , To alleviate the original GAN The generator generates samples too freely .

original GAN The formula of is :

CGAN The formula of is :

2、 The pyramid of Laplace

Laplacian pyramid is the result of continuous up sampling of images in scale space , Gaussian pyramid is the result of continuous down sampling of images in scale space . First build Gaussian pyramid , To image I0 For continuous K Next sampling , obtain

Is the first K The Laplace pyramid on level is

The Laplace pyramids on other levels are :

Laplacian pyramid No k The layer is equal to the Gaussian pyramid k Layer minus Gaussian pyramid k+1 Upper sampling of layer .

Use the Laplace pyramid to restore the image :

3、LapGAN principle

With K=3 For example , At this time, the pyramid of Laplace is 4 The layer structure , contain 4 A generator G0、G1、G2、G3, Generate... Separately 4 A resolution image 64x64、32x32、16x16、8x8, The lowest resolution image to train the original GAN, Input only noise , Later, higher resolution image training CGAN, Input the image sampled on the Gaussian pyramid image with noise and the same level .

LapGAN Through a series of CGAN In series , Constantly generate higher resolution .

LAPGAN stay CIFAR10、STL10 and LSUN Experiments were conducted on three data sets , The generated image is as follows :

Two 、 SRGAN

The paper 《Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network》

Address of thesis :https://arxiv.org/pdf/1609.04802.pdf

Code address :https://github.com/OUCMachineLearning/OUCML/blob/master/GAN/srgan_celebA/srgan.py

1、 The basic idea

SRGAN Yes, it will GAN Applied to the field of image super-resolution ,CNN Convolutional neural network has achieved very good results in the traditional super-resolution reconstruction , High peak signal-to-noise ratio can be achieved PSNR, With MSE Is the objective function of minimization .SRGAN Is the first to recover 4 The algorithm framework of down sampling image , The author proposes a perceptual loss function , Including confrontation loss and content loss , The counter loss comes from the discriminator , It is used to distinguish the real image from the generated super-resolution image , Content loss focuses on visual similarity .

Usually, image super-resolution algorithm uses the mean square error between the reconstructed super-resolution image and the real image MSE As an objective function , Optimize MSE So as to improve PSNR, however MSE and PSNR The value of is not a good indicator of the visual effect , The following figure PSNR The vision with the highest value is not good .

2、 Network structure

Usually per pixel MSE Due to excessive smoothing, it is difficult to deal with the super-resolution details of the image , This paper designs a new loss function , Will be per pixel MSE Replace loss with content loss . Perceived loss is expressed as the weighted sum of content loss and adversarial loss ,

Content Loss It is the loss per pixel of the feature map of a certain layer as the content loss ,

Adversarial Loss Against the loss

The network structure proposed by the author is as follows , The generator consists of a residual structure Residual blocks form ,

The author uses sub-pixel Network as a generative network , use VGG As a discriminant network GAN Got very good results , But this uses the difference per pixel as the loss function . after , The author tries to use the perceptual loss function proposed by himself as the optimization goal , although PSNR and SSIM Not high , But the visual effect is better than other networks , Avoid the over smooth characteristics of other methods .

边栏推荐

- Nearly 30 colleges and universities were named and praised by the Ministry of education!

- socat 端口转发

- 使用matplotlib模拟线性回归

- 微信朋友圈的高性能复杂度分析

- Keyboard input operation

- Getaverse, a distant bridge to Web3

- TCP protocol debugging tool tcpengine v1.3.0 tutorial

- Analog electricity - what is the resistance?

- Pat class A - A + B format

- UFW port forwarding

猜你喜欢

Heuristic merging (including examples of general formula and tree heuristic merging)

Getaverse, a distant bridge to Web3

List of stringutils and string methods

Kernel development

使用matplotlib模拟线性回归

Analog electricity - what is the resistance?

Wrote a few small pieces of code, broke the system, and was blasted by the boss

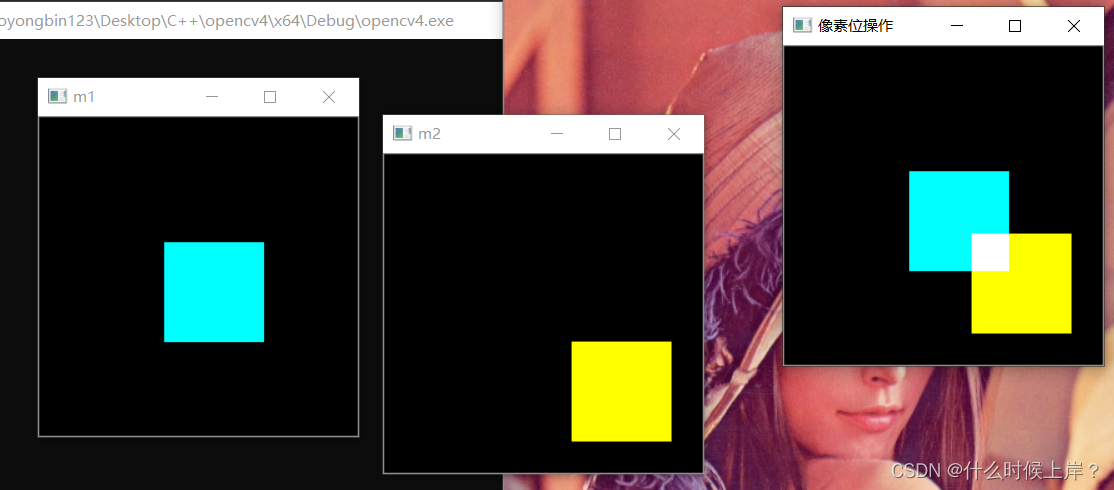

图像像素的逻辑操作

Cann training camp learns the animation stylization and AOE ATC tuning of the second season of 2022 model series

安全:如何为行人提供更多保护

随机推荐

socat 端口转发

AI opportunities for operators: expand new tracks with large models

Yolopose practice: one-stage human posture estimation with hands + code interpretation

实习报告1——人脸三维重建方法

内核开发

Live review | wonderful playback of Apache pulsar meetup (including PPT download)

awk从入门到入土(19)awk扩展插件,让awk如虎添翼

Three.js (7): local texture refresh

Hcip fourth day notes

Natbypass port forwarding

PAT甲级——A + B 格式

Use yarn

nc 端口转发

Internet Download Manager Configuration

[how to optimize her] teach you how to locate unreasonable SQL? And optimize her~~~

图像像素的逻辑操作

[wechat official account H5] authorization

Getaverse,走向Web3的远方桥梁

Internship report 1 - face 3D reconstruction method

Eth POS 2.0 stacking test network pledge process