当前位置:网站首页>2022 Tsinghua summer school notes L2_ 2 basic introduction of CNN and RNN

2022 Tsinghua summer school notes L2_ 2 basic introduction of CNN and RNN

2022-07-24 21:33:00 【The duck neck is gone】

2022 Tsinghua University large model cross Seminar

L2 Neural Network basics

1 Recurrent Neural Networks

Cyclic neural network RNNs

1.1 RNN Basics

Sequence data Sequential memory ( The brain is easier to recognize )

The input is usually data of variable length ,h Variables for different time steps ,y For export

RNN Structural unit

Sequential memory : Every time step hi It is the content of the previous hidden state ,h0 It needs to be initialized by itself .

- application :

- Sequence tags ( Label properties )

- Sequence prediction ( Forecast the weather )

- Image description ( A given picture generates a sentence )

- Text classification ( Distinguish emotions )

- advantage

- Can handle any length of input

- The size of the model will not change due to the length of the input

- Parameters of the Shared

- Later calculations will use the information from the previous steps

- shortcoming

- Time is slow

- In fact, the latter calculation is difficult to use the previous data

- The gradient disappears ( Often ) Or gradient explosion

1.2 RNN variant

Ideas : Optimization unit , Complicate hidden layers

1.2.1 GRU(Gated Recurrent Unit)

Introduce the gating mechanism RNN, Weigh past information against current input . By observing the formula, we find , Here W Are exclusive weights

- Reset door

h ~ i = tanh ( W x x i + r i ∗ W h h i − 1 + b ) \tilde{h}_{i}=\tanh \left(W_{x} x_{i}+r_{i} * W_{h} h_{i-1}+b\right) h~i=tanh(Wxxi+ri∗Whhi−1+b)

Considering the current activation of the state of the previous layer , We can get a temporary hi.

If our ri Close to the 0 Words , We will find that hi And the last one hi Our relationship is weak . - Update door

h i = z i ∗ h i − 1 + ( 1 − z i ) ∗ h ~ i h_{i}=z_{i} * h_{i-1}+\left(1-z_{i}\right) * \tilde{h}_{i} hi=zi∗hi−1+(1−zi)∗h~i

Weigh the new hi and hi-1 The impact between , So as to get the... Transmitted to the next layer hi.

When zi Close to the 1 When ,hi and hi-1 Completely equal ; When zi Close to the 0 When , We can directly adopt the activated new hi. - demonstration

Benefits of gating mechanism : Can control the relevance of different places ( Quickly establish distant relationships ); Reduce the quantity

1.2.2 LSTM(Long Short-Term Memory)

- Ct

- I added a new one Ct Express cell The state of , To learn long-term dependencies .

- Forget gate ft Oblivion gate

f t = σ ( W f ⋅ [ h t − 1 , x t ] + b f ) f_{t}=\sigma\left(W_{f} \cdot\left[h_{t-1}, x_{t}\right]+b_{f}\right) ft=σ(Wf⋅[ht−1,xt]+bf)

Decide which information in the previous state can be obtained from cell Remove . Calculation method : The current state and the state of the previous hidden layer . final ft by 0-1 Within the interval . If 0, Indicates that the past information is directly discarded . - Input gate

Decide what information can be stored cell In state- it: Enter the door parameters

i t = σ ( W i ⋅ [ h t − 1 , x t ] + b i ) i_{t}=\sigma\left(W_{i} \cdot\left[h_{t-1}, x_{t}\right]+b_{i}\right) it=σ(Wi⋅[ht−1,xt]+bi) - C ~ t \tilde{C}_{t} C~t For the candidate ct Variable

C ~ t = tanh ( W C ⋅ [ h t − 1 , x t ] + b C ) \tilde{C}_{t}=\tanh \left(W_{C} \cdot\left[h_{t-1}, x_{t}\right]+b_{C}\right) C~t=tanh(WC⋅[ht−1,xt]+bC)

- it: Enter the door parameters

- to update cell state

- C t = f t ∗ C t − 1 + i t ∗ C ~ t C_{t}=f_{t} * C_{t-1}+i_{t} * \tilde{C}_{t} Ct=ft∗Ct−1+it∗C~t

- First update the old cell state: Multiply the forgetting door by one floor cell state, To decide what information needs to be discarded

- Multiply the input gate by the new vector to be selected , To decide what information needs to be added to the next layer cell state.

- Output gate

The output gate determines which information can be output

o t = σ ( W o [ h t − 1 , x t ] + b o ) o_{t}=\sigma\left(W_{o}\left[h_{t-1}, x_{t}\right]+b_{o}\right) ot=σ(Wo[ht−1,xt]+bo)

h t = o t ∗ tanh ( C t ) h_{t}=o_{t} * \tanh \left(C_{t}\right) ht=ot∗tanh(Ct)

( It can be understood as adjusting some information to the expression of words )

1.2.3 Bidirectional RNNs( two-way RNN)

- Preface

- In tradition RNN Tasks , Each hidden state variable is determined from the state variable in the previous time and the current input

- But in many applications, we will rely on the whole input sequence

- Example : Handwriting / speech recognition

- figure

2 Convolutional Neural Networks

Convolutional neural networks CNNs

2.1 Preface

- application :

- CV Mission

- NLP Mission : Emotional categories 、 Relationship classification

- Good at extracting local and location invariant patterns

- CV: Color 、 Corner 、 Texture, etc

- NLP: Phrases and grammatical structures

- To extract a pattern :

- Calculate all possible in a sentence n-gram It means

- Do not rely on external linguistic tools ( Such as dependency resolution )

2.2 CNN Structure example

- structure : Input layer 、 Convolution layer (filter)、 Maximum pool layer ( The extracted features )、 The nonlinear layer

2.2.1 Input layer

Pass the word word embedding Convert to vector representation .

X Express matrix for word vector ,m Here is 6,d Let's continue the dimension selected when word vector representation .

2.2.2 Convolution layer

The second line , Indicates the selected N Tuples

W Sliding convolution kernel

f The feature representation after convolution

The size of convolution kernel is shared by global parameters

2.2.3 CNN and RNN Comparison

边栏推荐

- HSPF (hydraulic simulation program FORTRAN) model

- MySQL - multi table query - seven join implementations, set operations, multi table query exercises

- Go language pack management

- 【Pyspark基础】行转列和列转行(超多列时)

- 怎么在中金证券购买新课理财产品?收益百分之6

- Lazily doing nucleic acid and making (forging health code) web pages: detained for 5 days

- Mysql database commands

- Summary of communication with customers

- rogabet note 1.1

- Summary of yarn capacity scheduler

猜你喜欢

Intranet penetration learning (I) introduction to Intranet

【类的组合(在一个类中定义一个类)】

npm Warn config global `--global`, `--local` are deprecated. Use `--location=global` instead

Eight transformation qualities that it leaders should possess

Alibaba cloud and parallel cloud launched the cloud XR platform to support the rapid landing of immersive experience applications

Unity & facegood audio2face drives face blendshape with audio

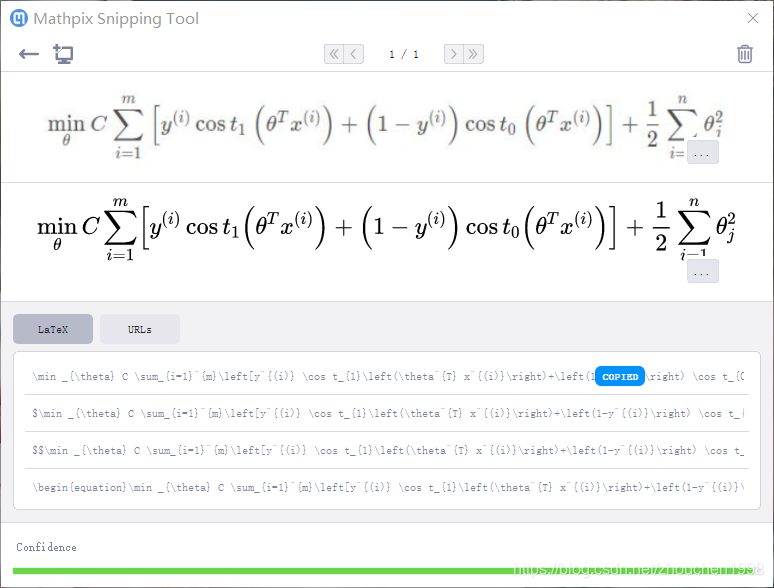

Mathpix formula extractor

About the acid of MySQL, there are thirty rounds of skirmishes with mvcc and interviewers

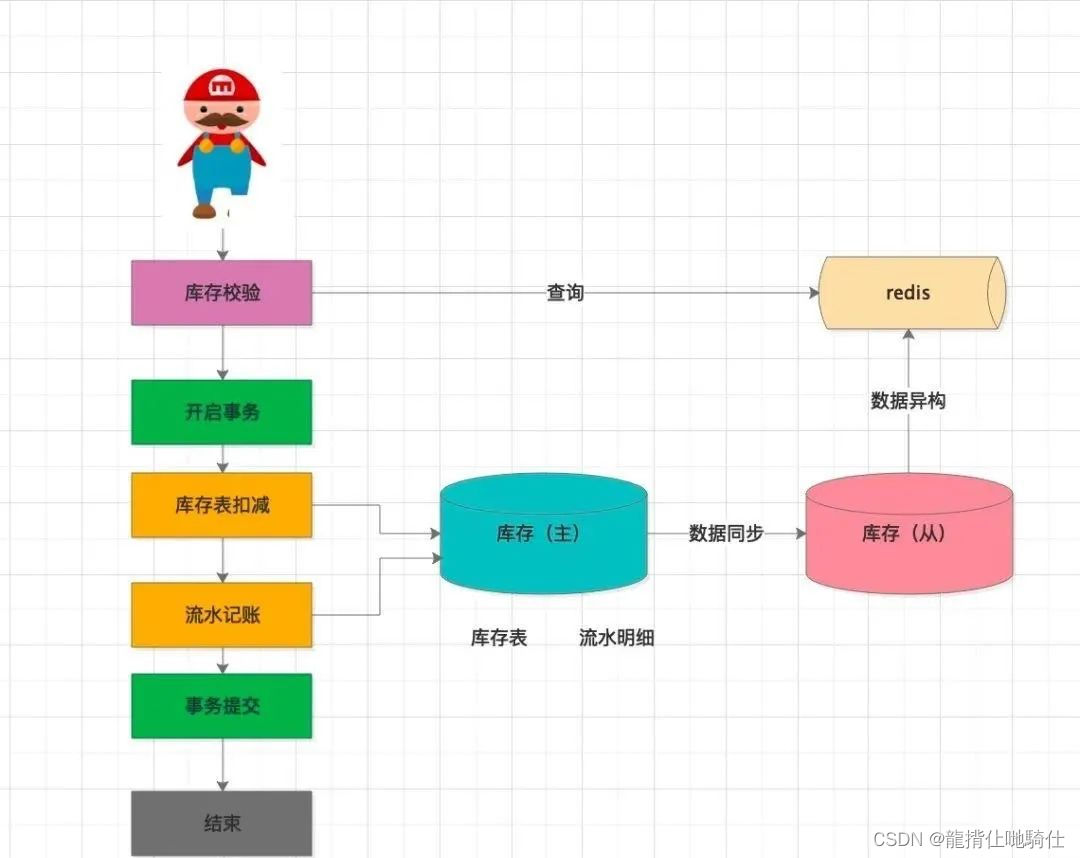

How does redis realize inventory deduction and prevent oversold? (glory Collection Edition)

C synchronous asynchronous callback state machine async await demo

随机推荐

Shenzhen Merchants Securities account opening? Is it safe to open a mobile account?

Sword finger offer 15. number of 1 in binary

Codeforces Round #809 (Div. 2)(A~D2)

From front-line development to technical director, you are almost on the shelf

Thank Huawei for sharing the developer plan

[jzof] 04 search in two-dimensional array

CAD sets hyperlinks to entities (WEB version)

None of the most complete MySQL commands in history is applicable to work and interview (supreme Collection Edition)

93. Recursive implementation of combinatorial enumeration

Use of cache in C #

Information System Project Manager - Chapter 10 project communication management and project stakeholder management

Scientific computing toolkit SciPy data interpolation

IO flow overview

Intel internship mentor layout problem 1

Scientific computing toolkit SciPy image processing

Brand new: the latest ranking of programming languages in July

Huawei cloud data governance production line dataarts, let "data 'wisdom' speak"

Es+redis+mysql, the high availability architecture design is awesome! (supreme Collection Edition)

Baidu interview question - judge whether a positive integer is to the power of K of 2

Baidu PaddlePaddle easydl helps improve the inspection efficiency of high-altitude photovoltaic power stations by 98%